Course Overview

This course fulfils the requirements of the Software Developer apprenticeship standard set by the Institute for Apprenticeships.

The content aims to go beyond the minimal requirements of the standard, and to provide apprentices with a breadth and depth of knowledge of software engineering concepts and skills that enables them to work in a range of software development roles and to contribute productively to their employment as soon as possible.

The goal of enabling apprentices to contribute productively as soon as possible is the reason why the first part of the course is a 5-week full-time “bootcamp”. The bootcamp is made up of a series of exercises that introduce a broad range of concepts and get the apprentices to apply those new concepts in practical exercises.

In our experience, once apprentices have completed the bootcamp, they have a toolkit and the confidence to start taking on new challenges and contributing significant value for their employer.

From this point the course moves to undertaking a series of 14 modules, where each module is allocated 4 weeks. During this time the apprentice performs the work that their employment generally requires them but also over the course of a module’s 4 weeks, the apprentice does a deep-dive into that module’s topic. The deep-dive includes self-led reading of the module content, a group workshop to undertake practical exercises on the module’s topic, and a separate group review and discussion.

Therefore, following the breadth of experience gained rapidly during bootcamp, the apprentices focus on gaining depth of knowledge and experience, and regularly relating the course back to their employment.

Taking these two approaches in the course content means that the learners are setup to be successful and adaptable software developers.

Course Structure

The course is structured in three major phases, beginning with the bootcamp followed by ongoing training and finally End Point Assessment period.

The course is predominantly undertaken in a single programming language, with small amounts of other languages when appropriate (for example, for web development or databases).

Bootcamp

The 5-week bootcamp is full-time led by the tutor and covers the full development stack.

The first three weeks of bootcamp consist of the following allocation of exercises to days. There is a slide deck presented at the start of each day over this period.

| Week | Day | Exercise | Topics |

|---|---|---|---|

| 1 | 1 | FizzBuzz | Writing logical and maintainable code |

| 2 | SupportBank (part 1) | File processing, exception handling and logging | |

| 3 | SupportBank (part 2) | ||

| 4 | BusBoard (part 1) | Calling APIs including combining data from different sources | |

| 5 | BusBoard (part 2) | ||

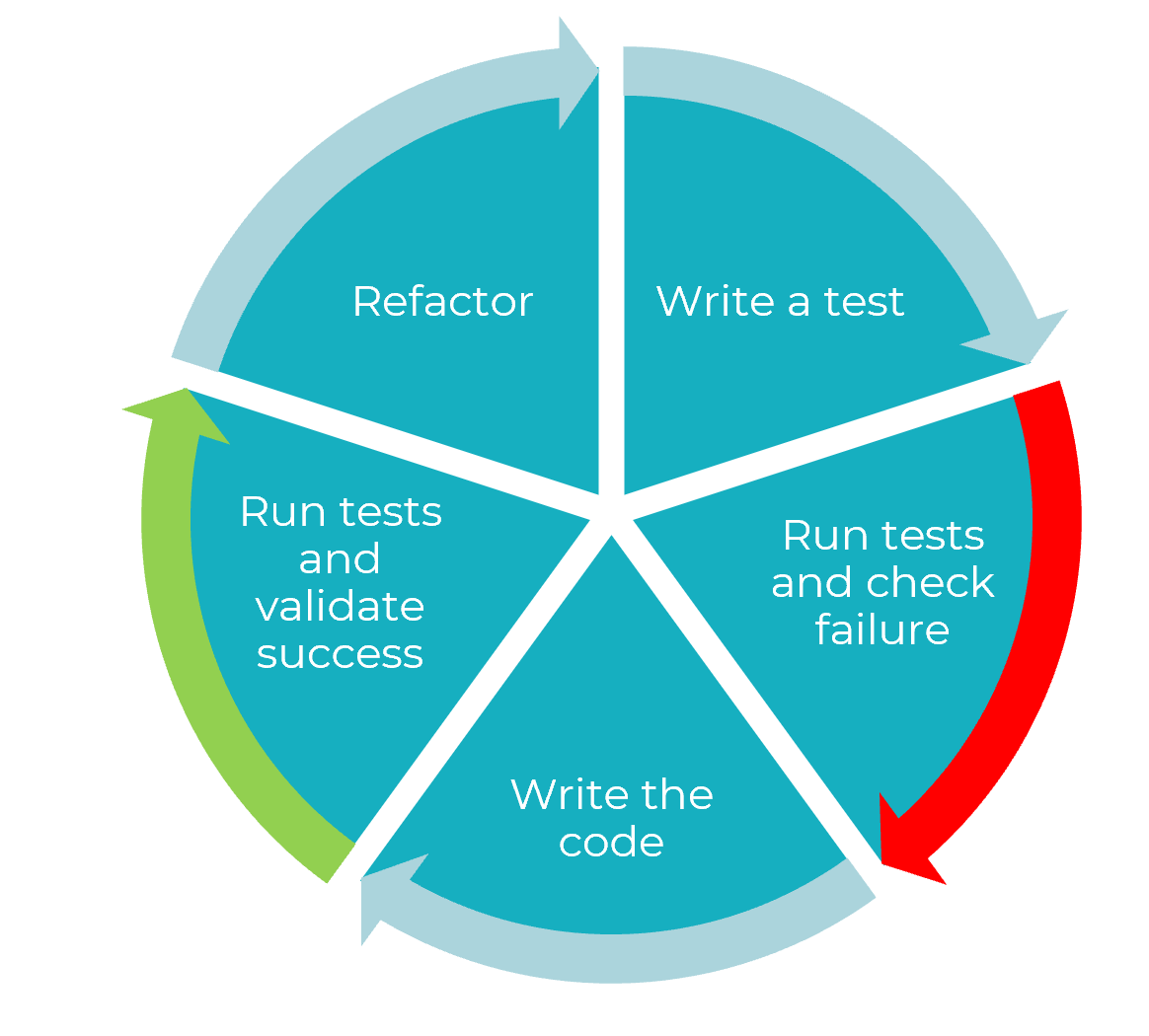

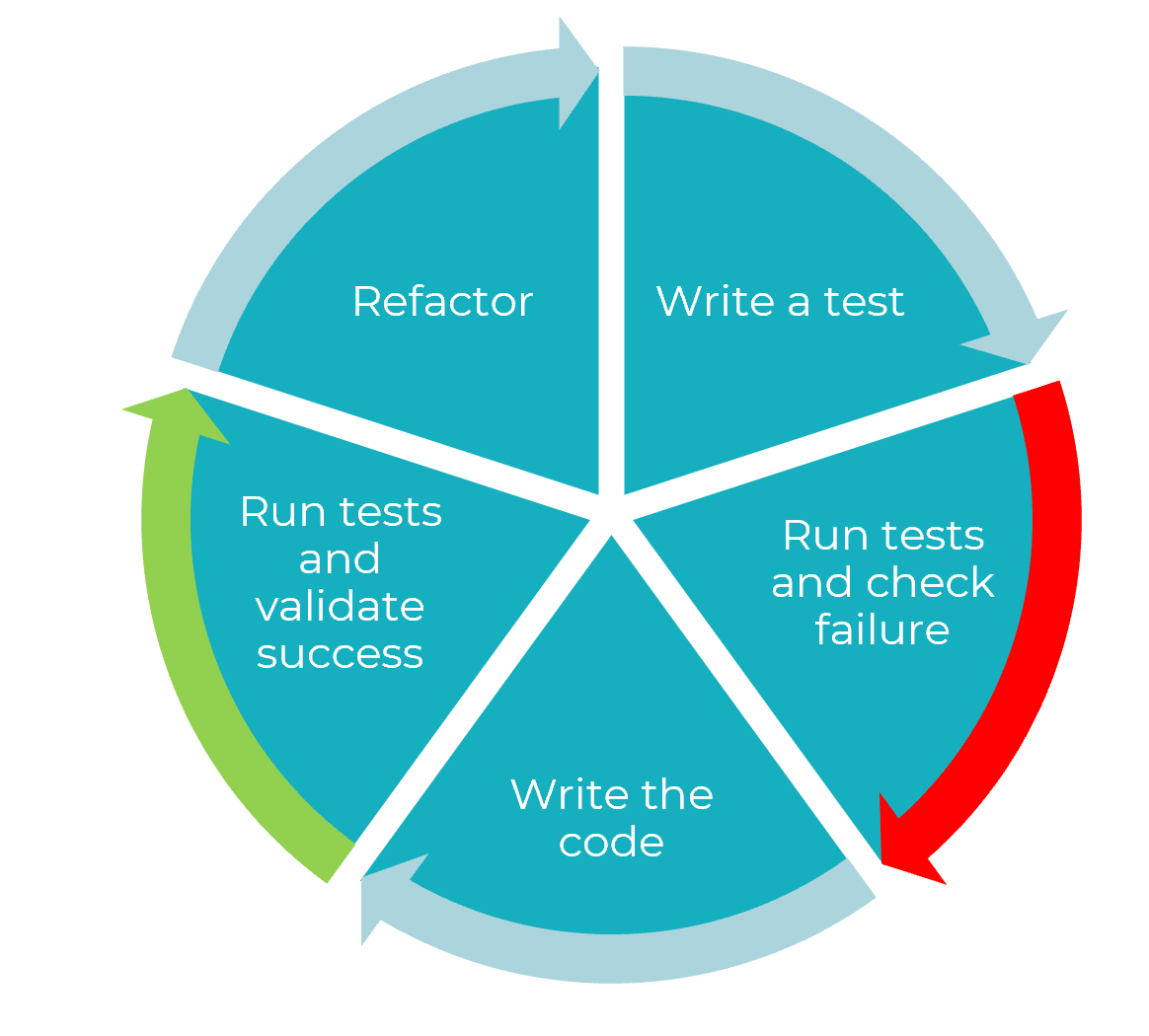

| 2 | 1 | Chessington (part 1) | Testing, including unit tests, frameworks and Test Driven Development |

| 2 | Chessington (part 2) | ||

| 3 | Bookish (part 1) | Creating and connecting to a database, the MVC model and effective user interfaces | |

| 4 | Bookish (part 2) | ||

| 5 | Bookish (part 3) | ||

| 3 | 1 | Bookish (part 4) | |

| 2 | DuckDuckGoose (part 1) | Version control in git, working in shared codebases and continuous integration | |

| 3 | DuckDuckGoose (part 2) | ||

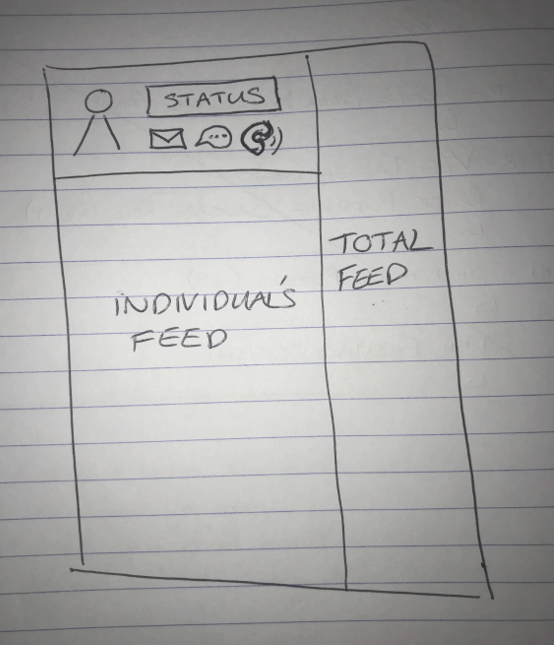

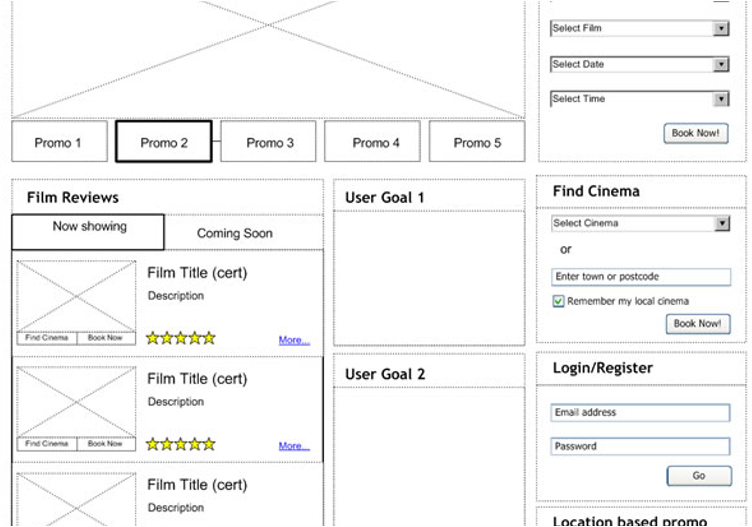

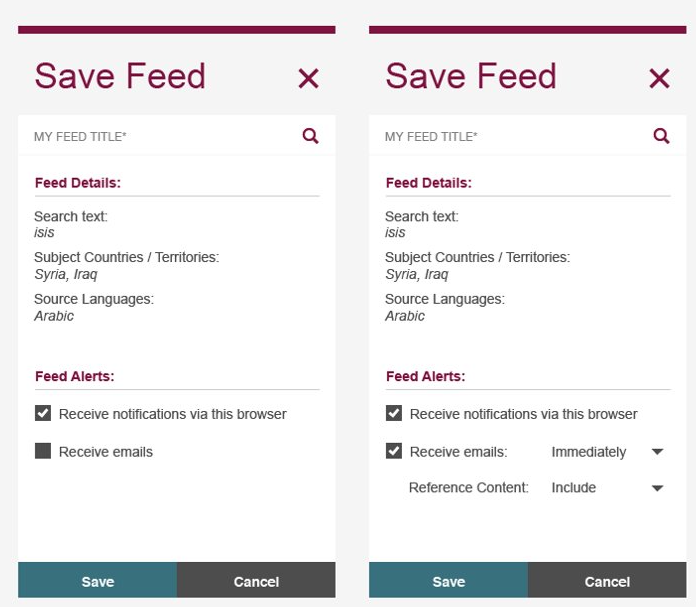

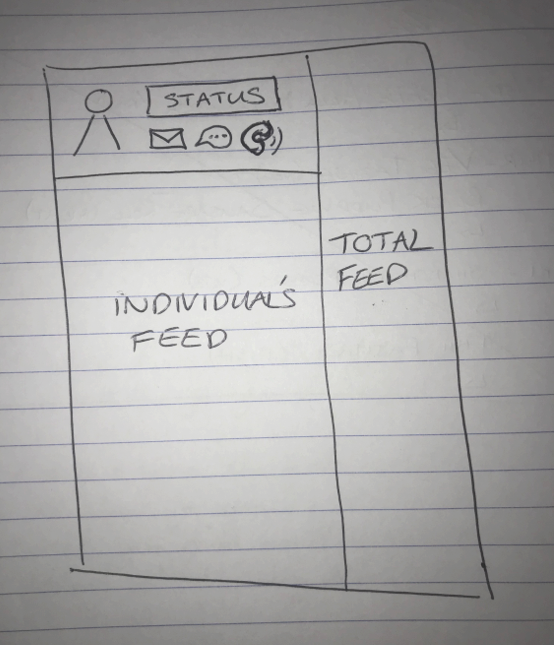

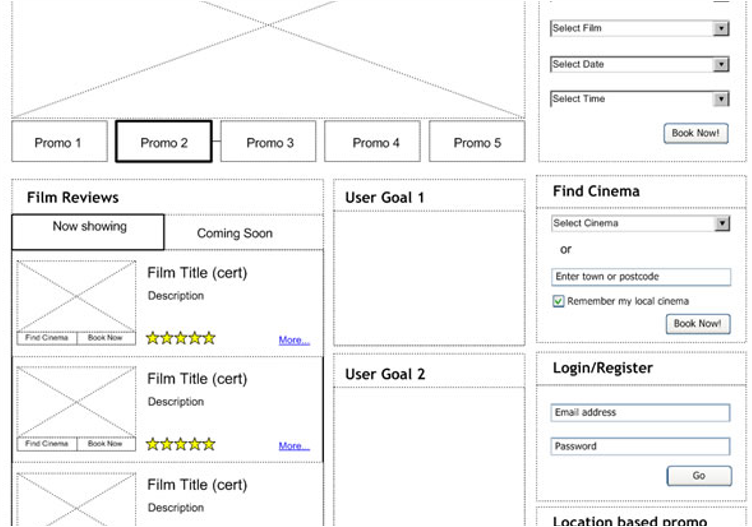

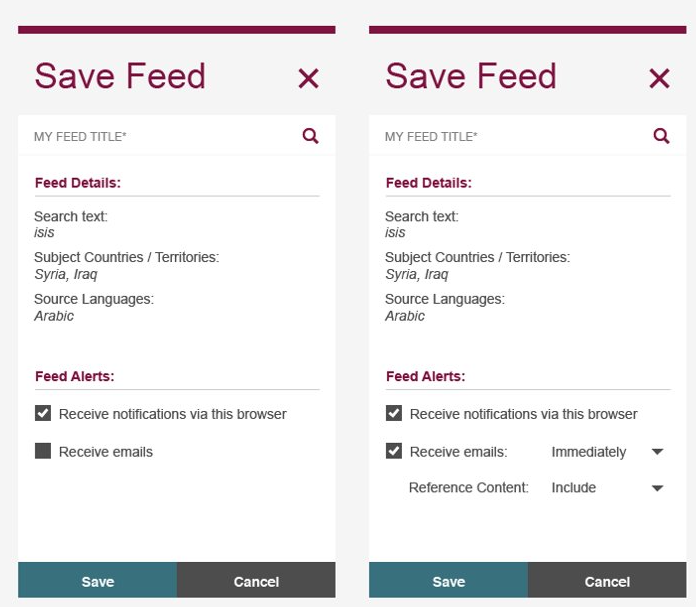

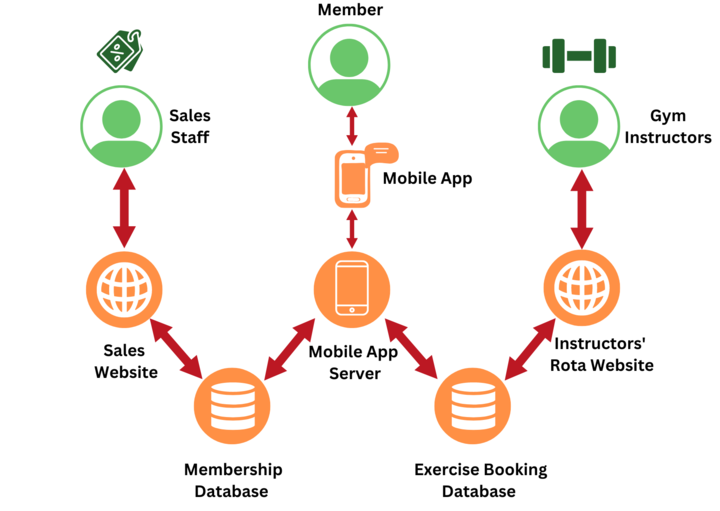

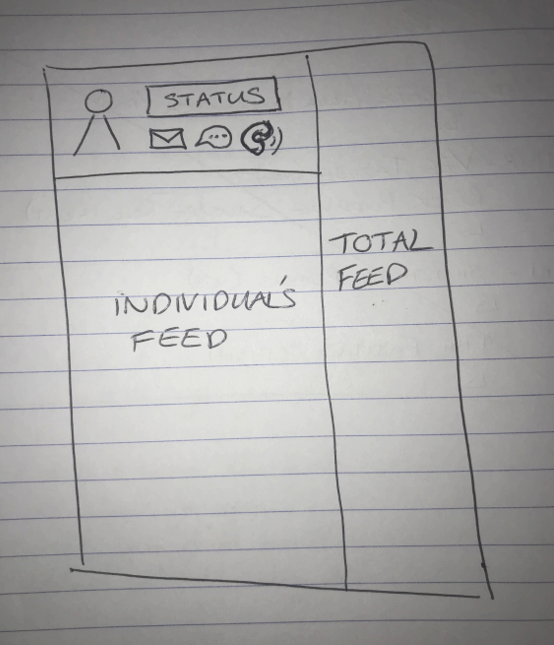

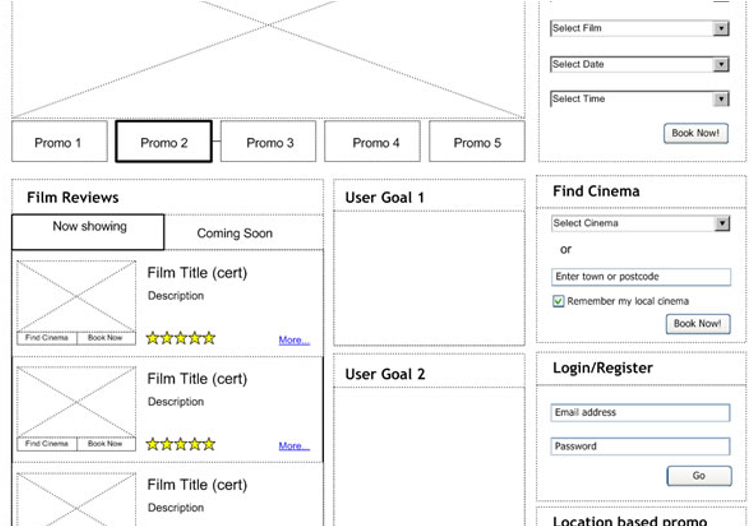

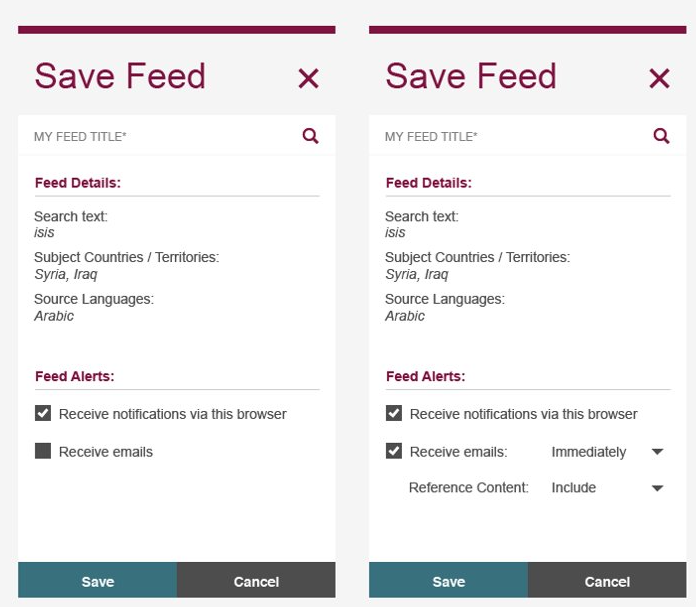

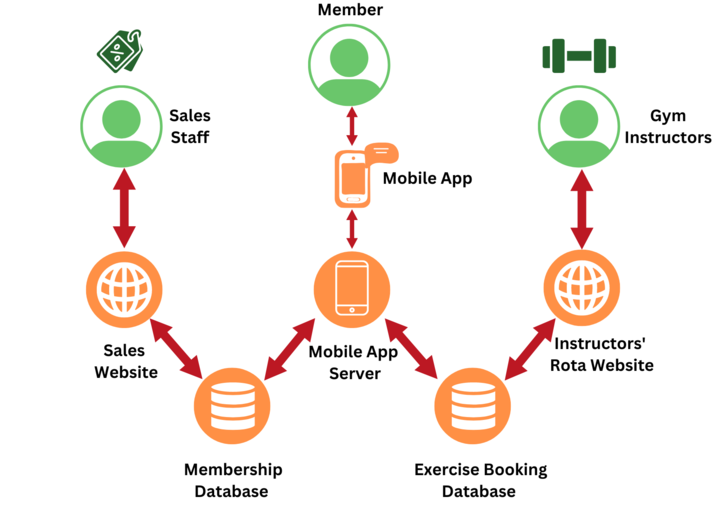

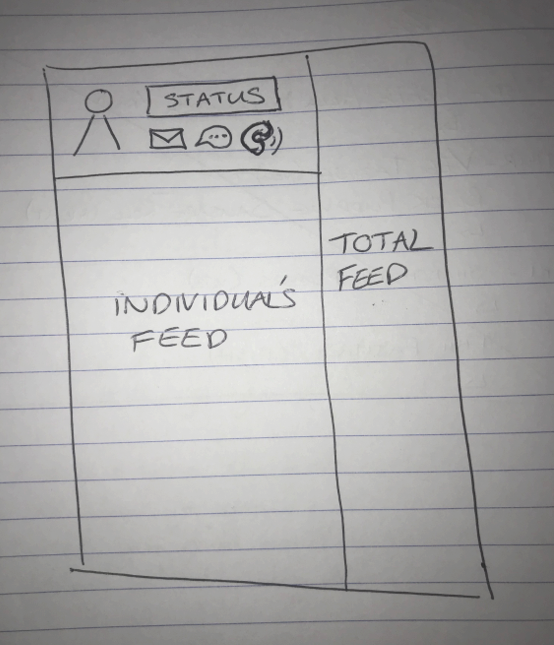

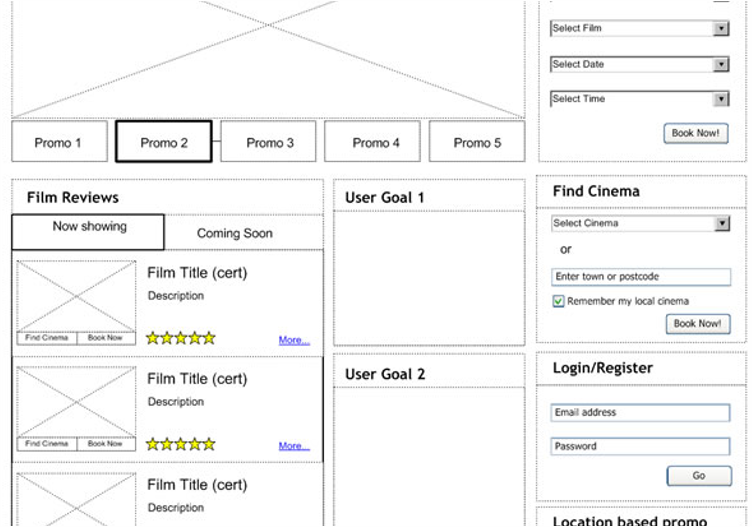

| 4 | Mini-project planning (part 1) | Producing wireframes, user stories and technical design from a project brief | |

| 5 | Mini-project planning (part 2) |

Weeks 4 and 5 of bootcamp are spent implementing the project that the learners planned over the last two days of week 3. This will be done in groups (suggested group size being 8-10). On the final day of week 5, each group will present a demo of their project to their trainer (and potentially others).

Ongoing training

The ongoing training phase of the course consists of 14 modules that are completed part-time while the learner is working for their employer. During this period each learner also gathers a portfolio of evidence of work that they have done in the course of their employment.

Each module addresses one topic in-depth and is intended to take 4 weeks during which the learner is expected to undertake the following:

- Week 1: full day; learner performs self-led reading of the module content and has a one-to-one coaching call with their PDE.

- Week 2: full day; practical workshop involving all learners and the trainer(s), working through the exercises for that module.

- Week 3: full day; learner works independently on their portfolio.

- Week 4: half day; group workshop involving all learners and the trainer(s), in which the module is discussed and related to each learner’s employment. Each of these workshops has a slide deck of questions to review the module content, with the remaining time for group discussion and self-guided work on completing module exercises and/or their portfolio.

The modules are:

- Object-oriented programming

- Functional programming

- Asynchronous programming

- Tests (Part 1)

- Tests (Part 2)

- Databases

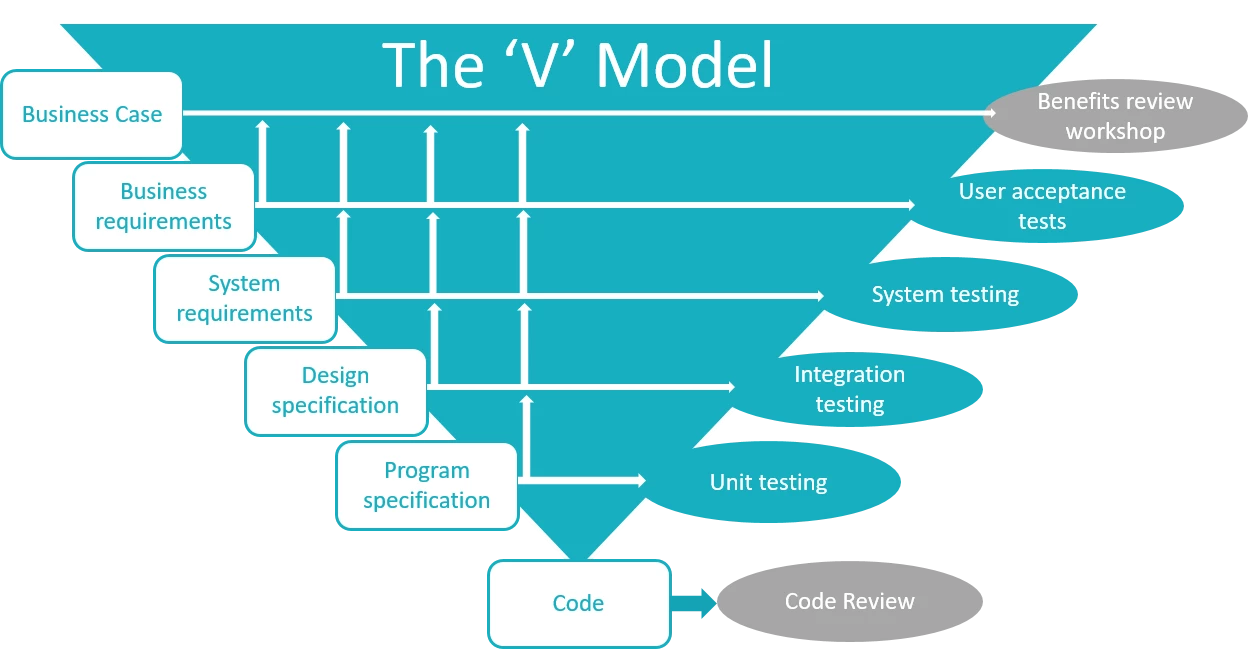

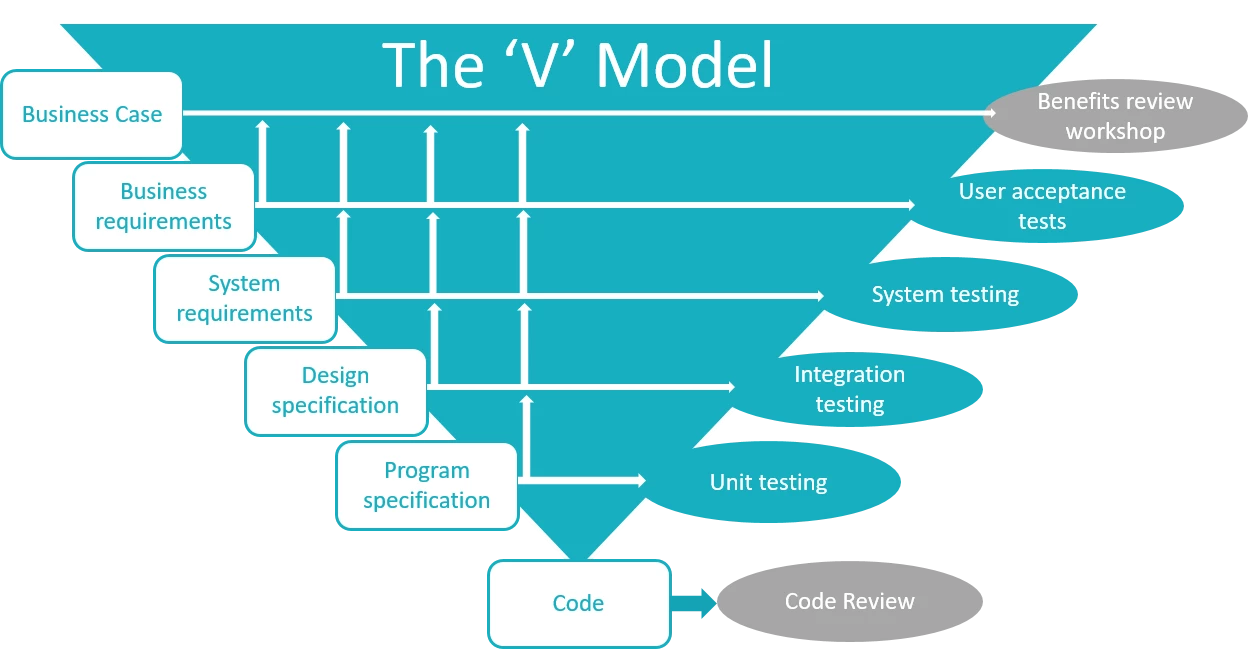

- The Software Development Life Cycle

- Further HTML & CSS

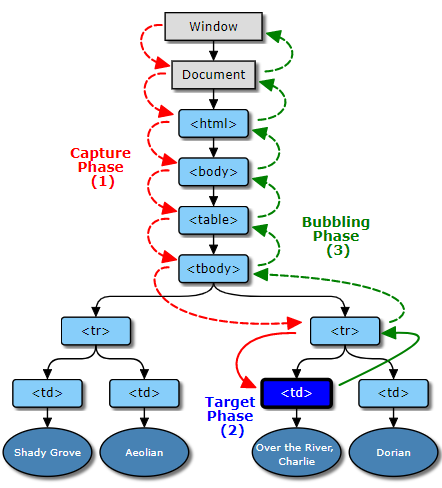

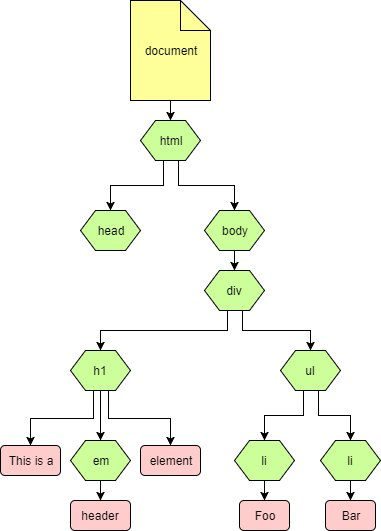

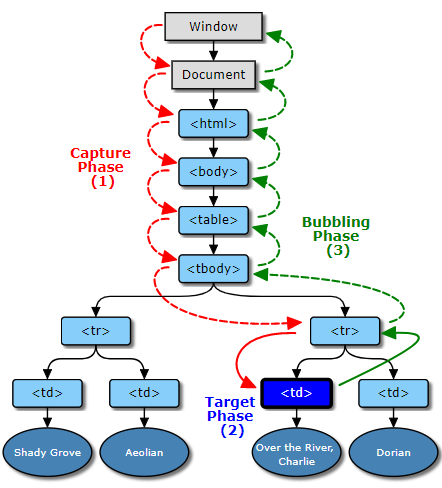

- Further Javascript, the DOM and bundlers

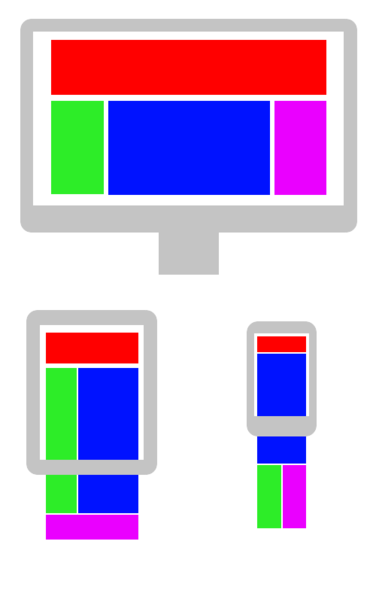

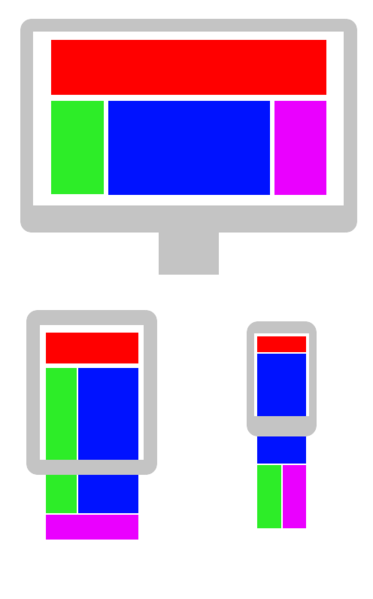

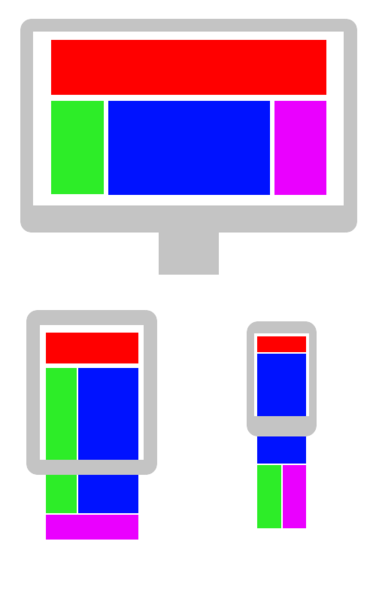

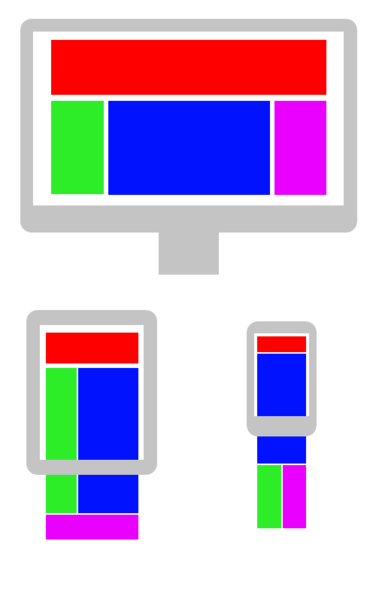

- Responsive design and accessibility

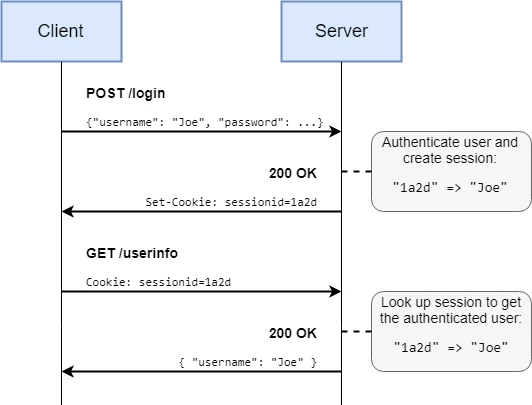

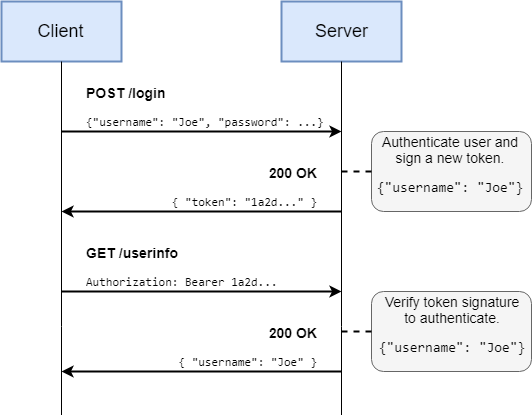

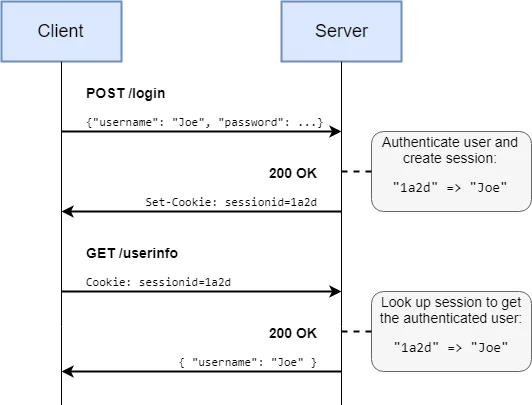

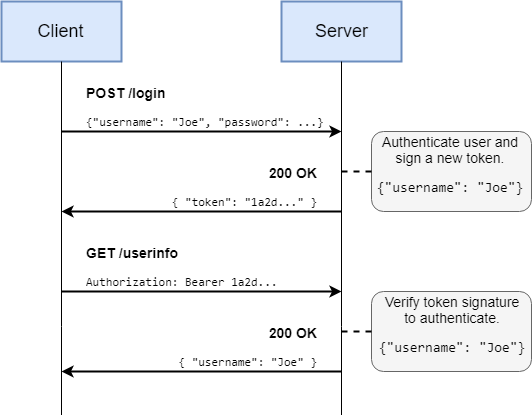

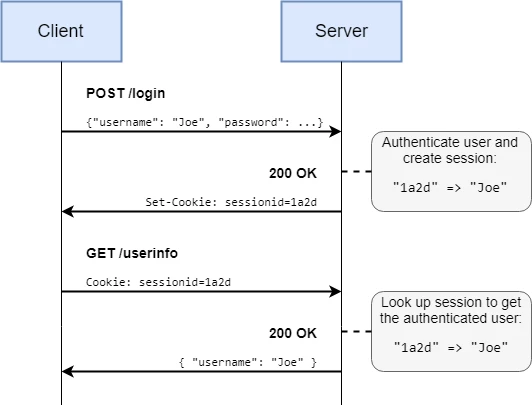

- Web servers, auth and security

- Data structures and algorithms

- Infrastructure and deployment

- EPA preparation

End Point Assessment Period

End Point Assessment (EPA) commences once a work-based project has been agreed between the learner and their employer, and approved by the British Computer Society (BCS), who are the assessors. The BCS have requested that the EPA Period duration be 6 months.

During the EPA period, the learner undertakes the agreed work-based project as part of their normal employment. Current advice from the BCS on the timings for this period are:

- 7 weeks performing the work-based project

- 2 weeks writing up the report

At some time in the remainder of the EPA period, the assessors will review the learner’s portfolio of work and address it in the apprentice’s professional discussion.

Portfolio Requirements

This document is a guide for skills coaches and apprentices addressing what needs to be covered in the portfolio that the learners produce during the ongoing training period.

Soon after each learner has started the “ongoing training” phase of the course, their skills coach should introduce them to the purpose of the portfolio, how learners should build it and how it will be assessed.

Purpose

The purpose of the portfolio is to present evidence that during the course, the apprentice’s on-the-job experience has given them real-world opportunities to learn and apply core principles of being a software developer. Over the period of the apprenticeship, the portfolio should demonstrate that the apprentice as an individual has undertaken progressively more challenging tasks and roles.

How the portfolio should be built

Over time, you as an apprentice should build a range of experience in the work you undertake for your employer. The evidence that you gather to demonstrate that should come from real-world documents (after necessary redactions), code you’ve written, images of features you’ve implemented, etc. – your skills coach will be able to discuss the possibilities in detail with you, and the amount of written context you should give for each item.

You should make sure that you’re working on the portfolio regularly rather than leaving it until late in the process. The course timetable has time set aside during every module cycle which is intended to be spent on portfolio preparation.

When choosing what should be to the portfolio, consider the assessment critera that need to be prioritised. See the section Criteria below for a list. As the course goes on, you might find that there are some necessary critera for which you have no evidence in your portfolio; in that case you should discuss with your Line Manager whether you can be assigned tasks outside your usual work so that you can build the experience and gather the evidence.

How the portfolio will be assessed

After all the training modules are complete, you will go through the End-Point Assessment process. There are a few parts to that which will be discussed in detail closer to the time. One of the pieces of EPA assessment is a one-hour Professional Discussion with an assessor; this covers some general questions as well as questions directly related to your portfolio.

Criteria

This section goes through the criteria that are used to assess your professional discussion and portfolio. Each criteria corresponds to one or more KSBs (Knowledge, Skills and Behaviour) as defined in the Software Developer apprenticeship standard – you can see the individual KSB definitions on that page.

In order to gain a pass in the overall professional discussion you must meet the following criteria:

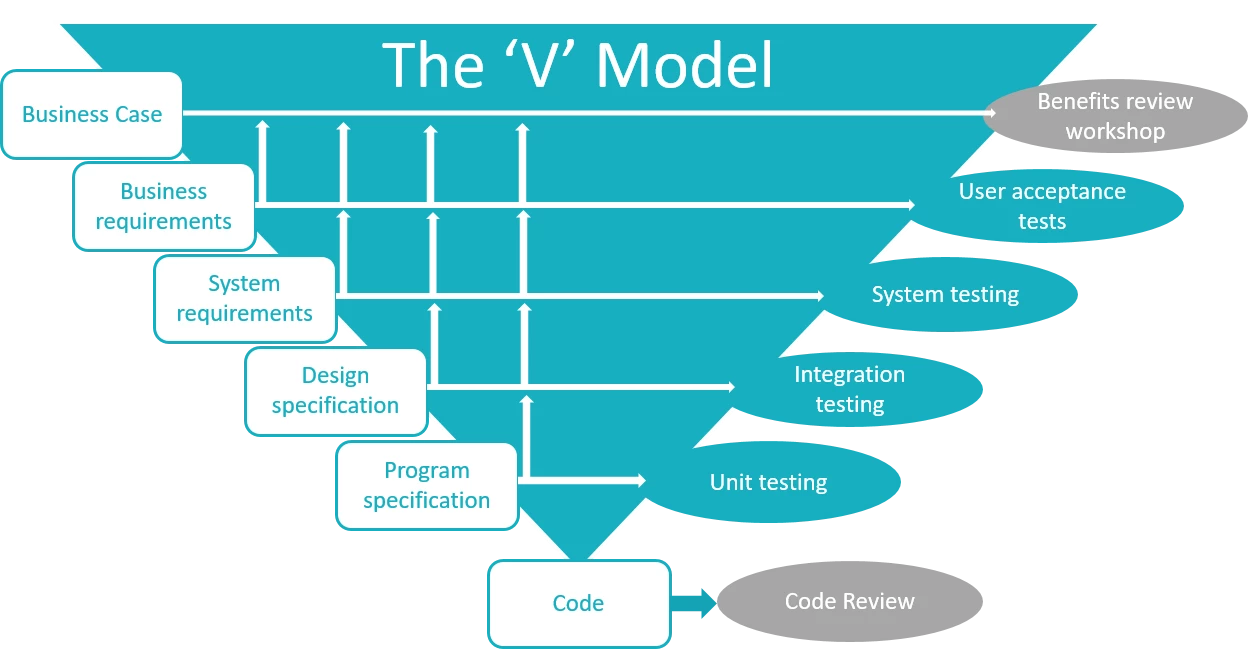

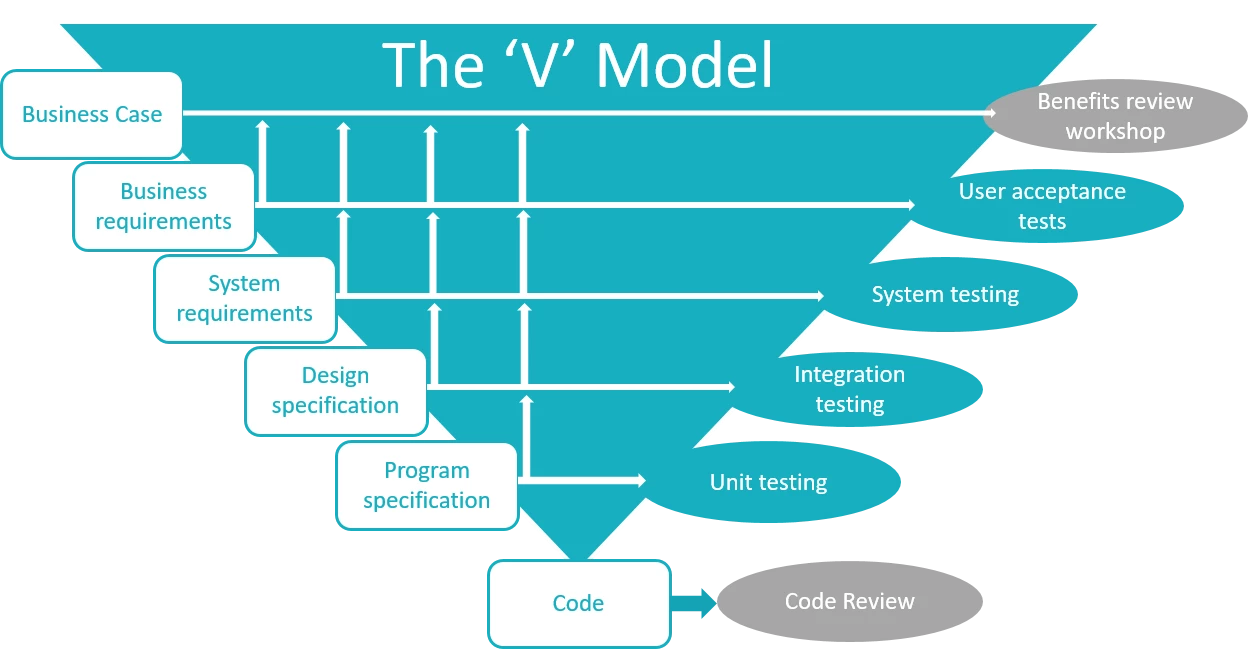

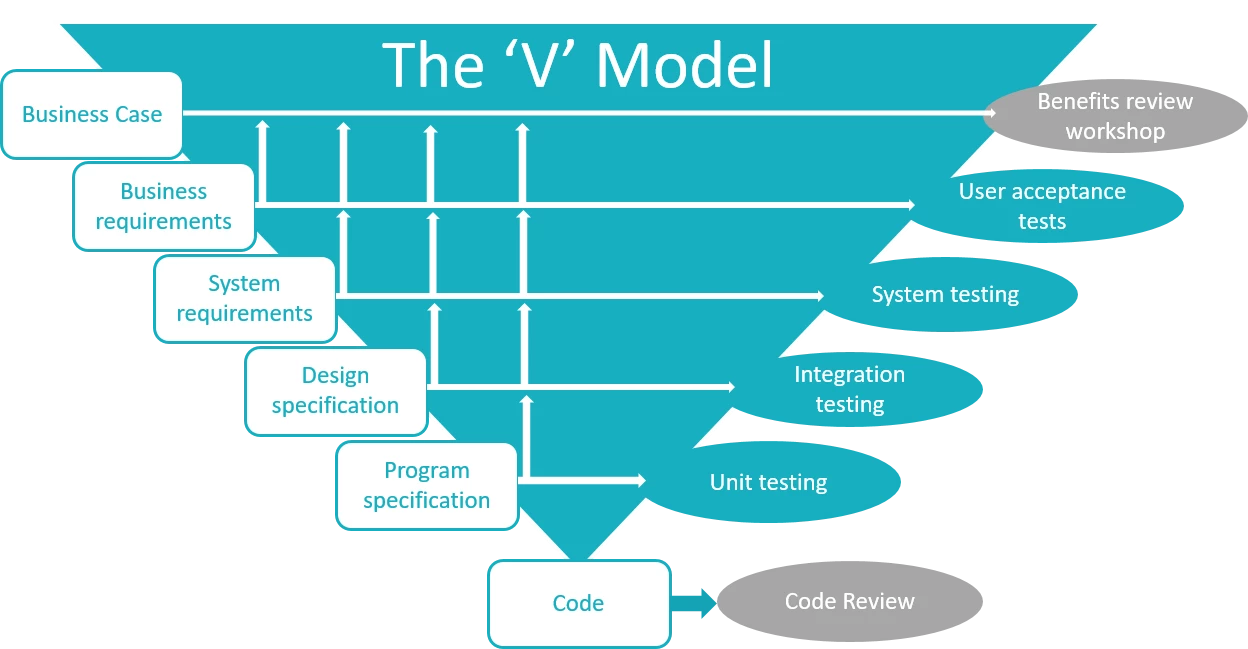

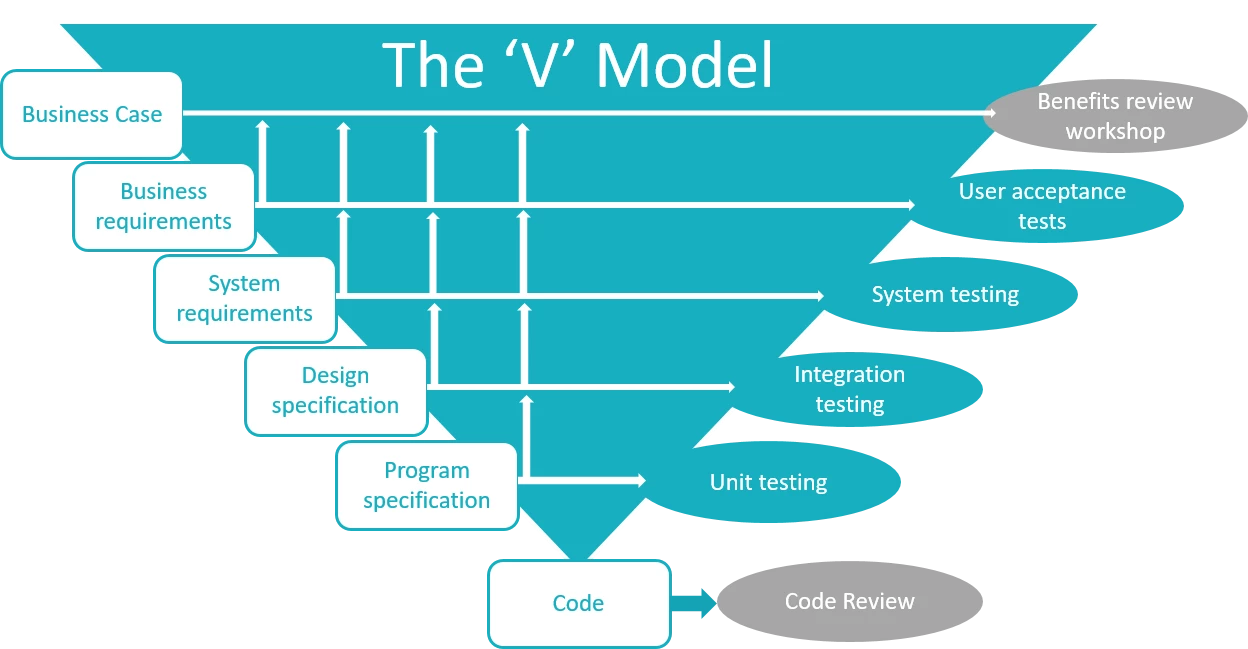

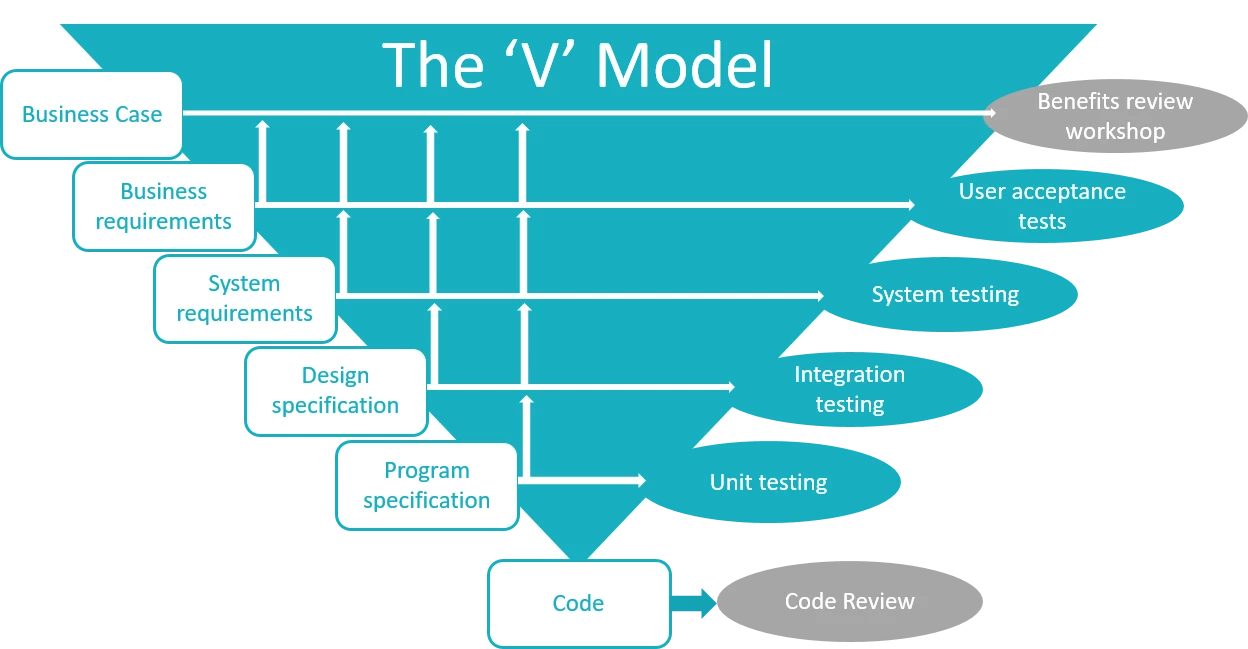

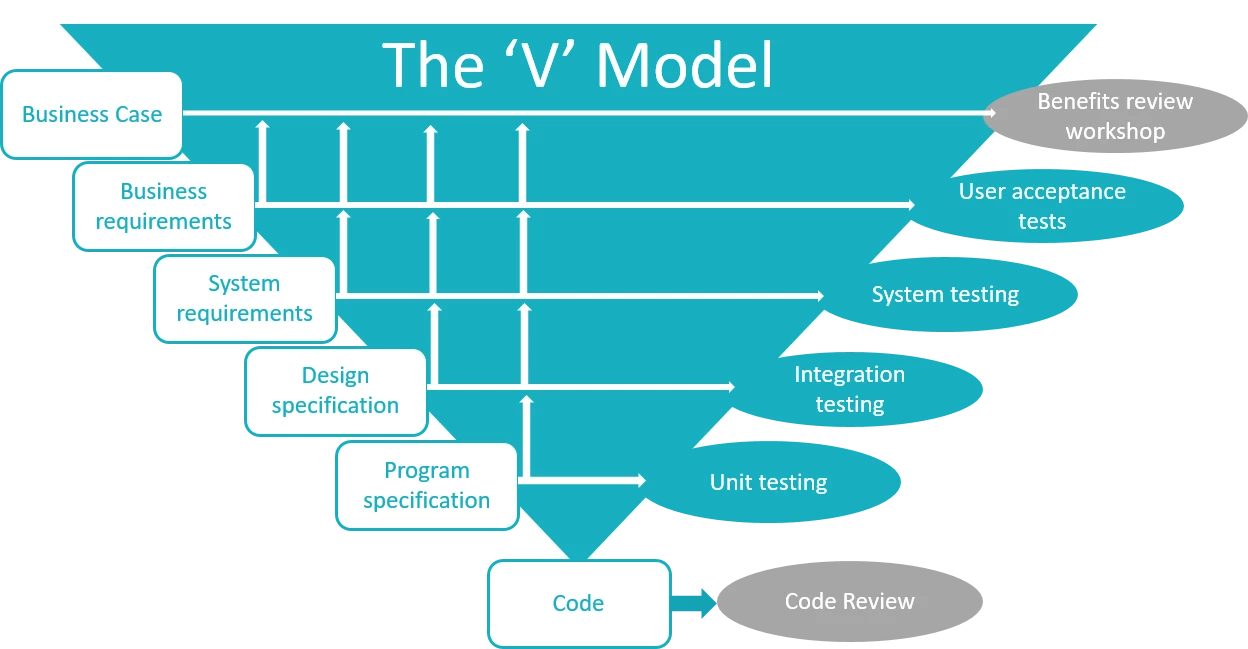

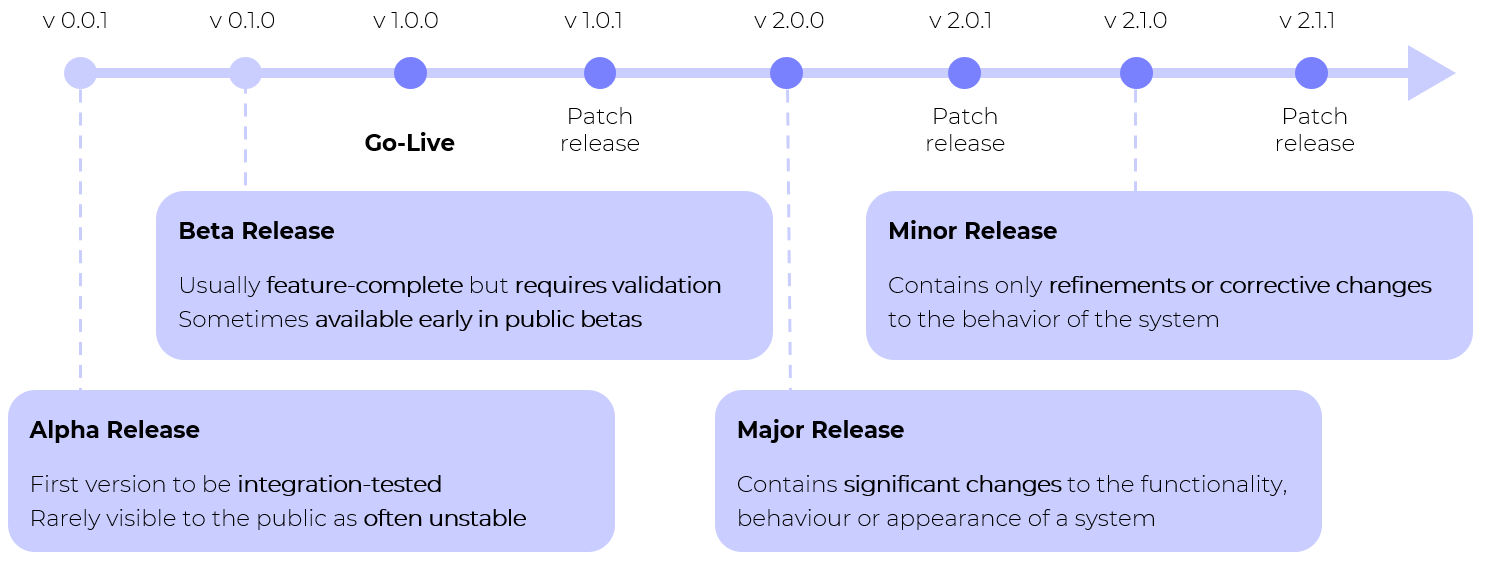

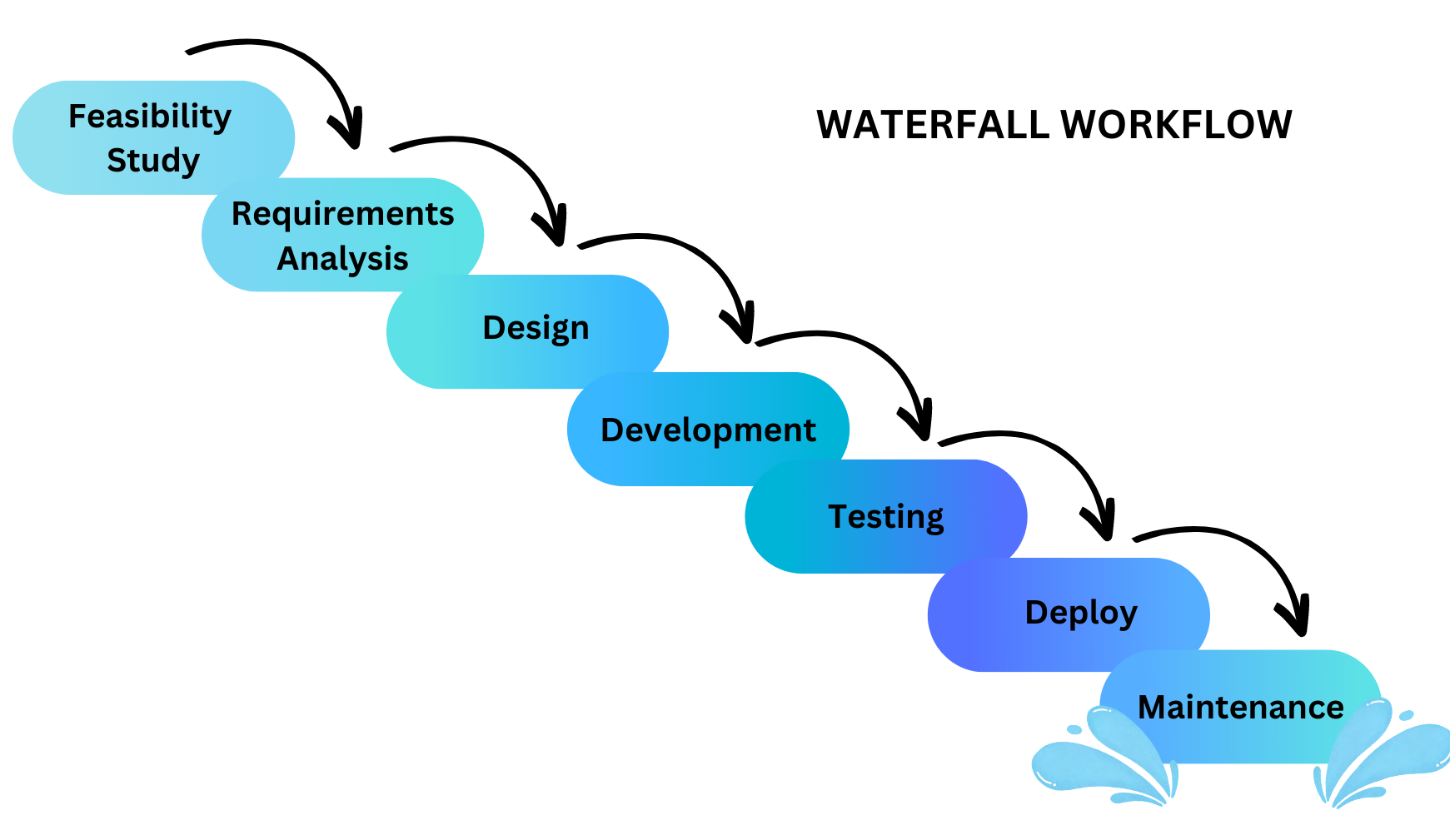

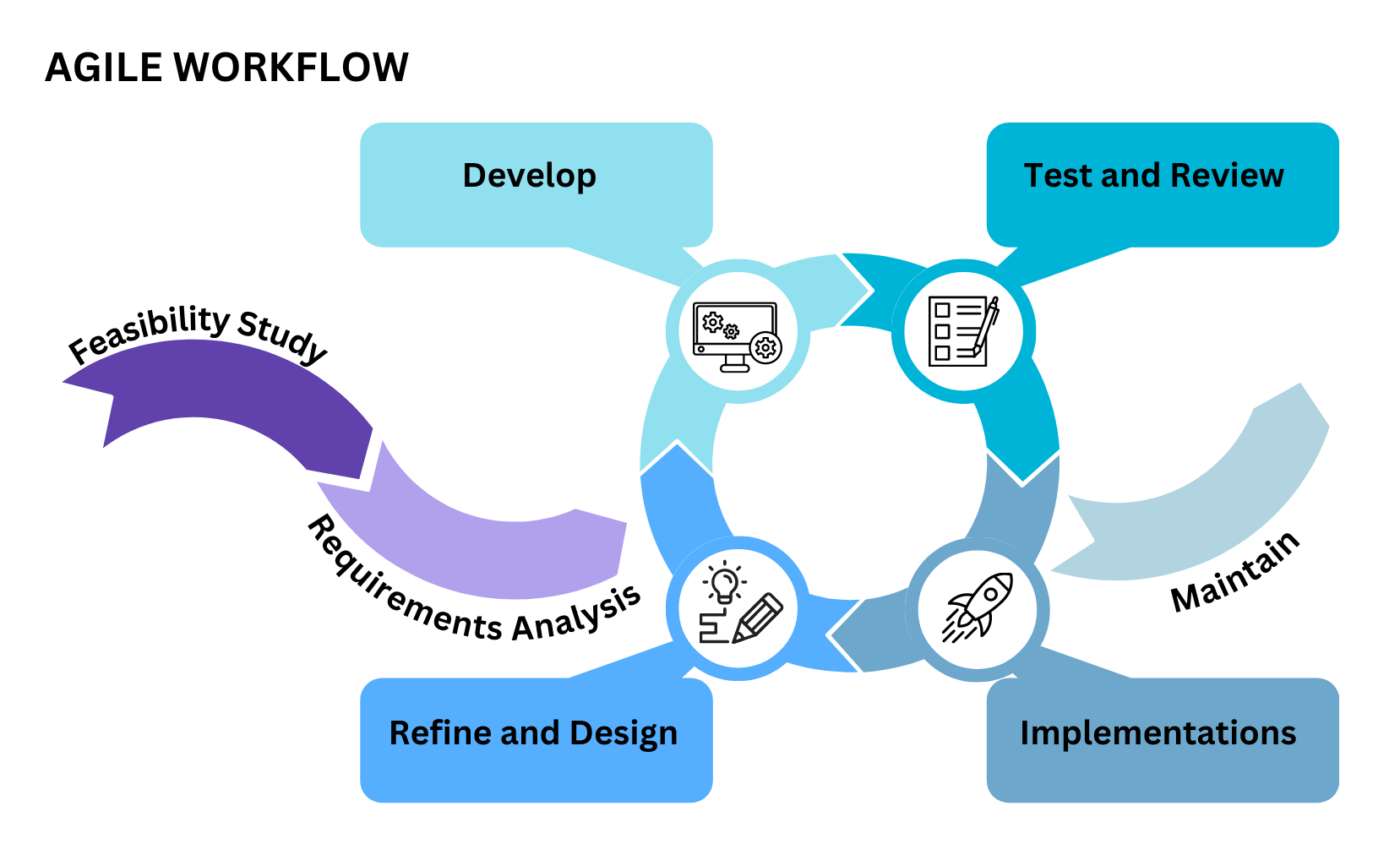

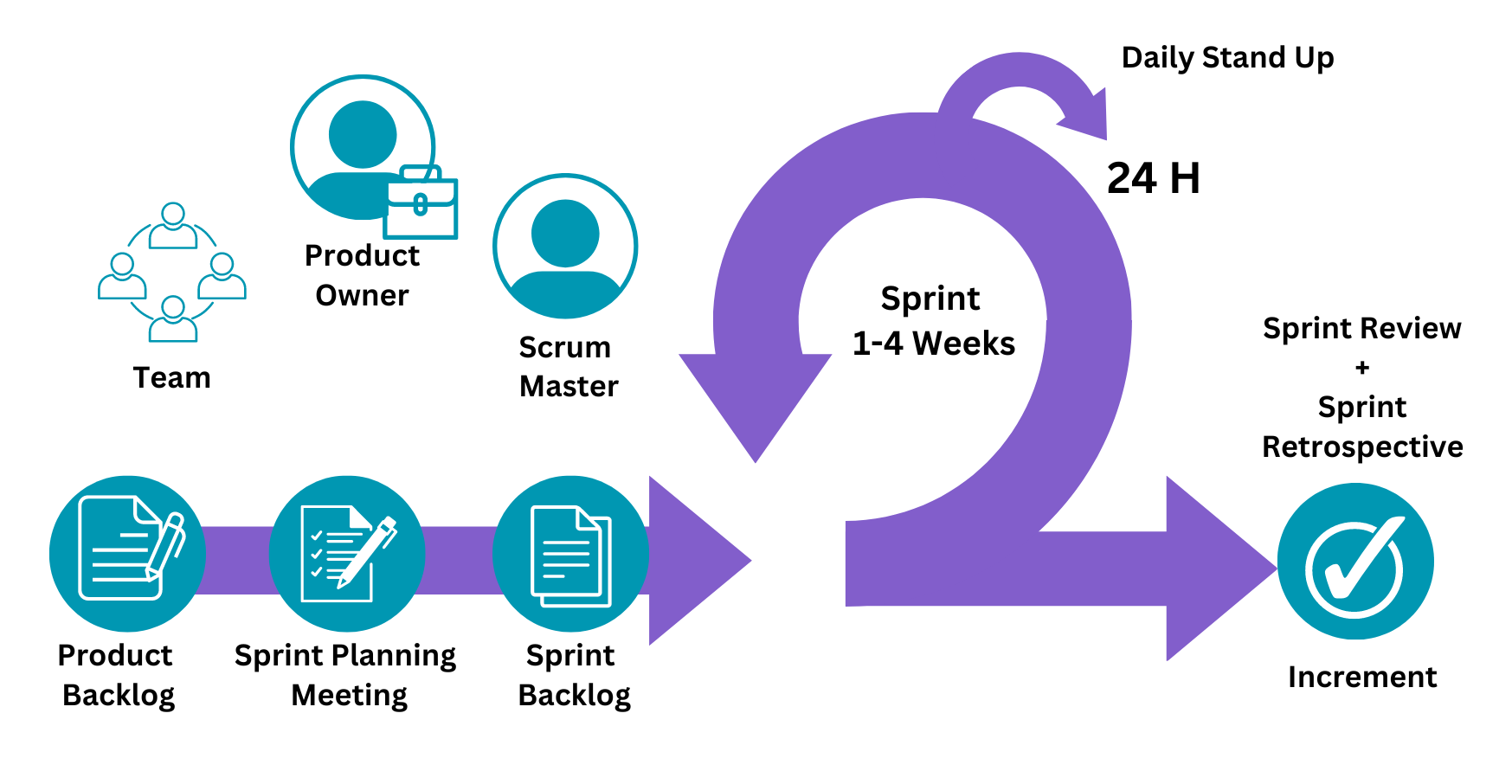

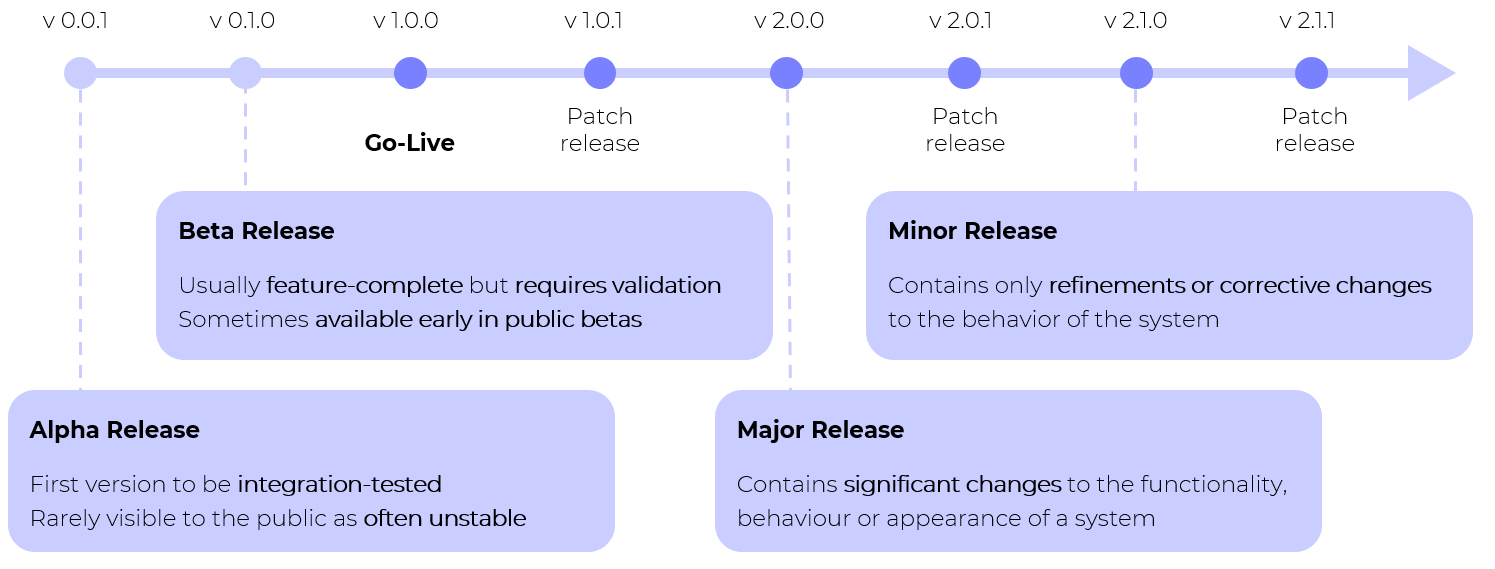

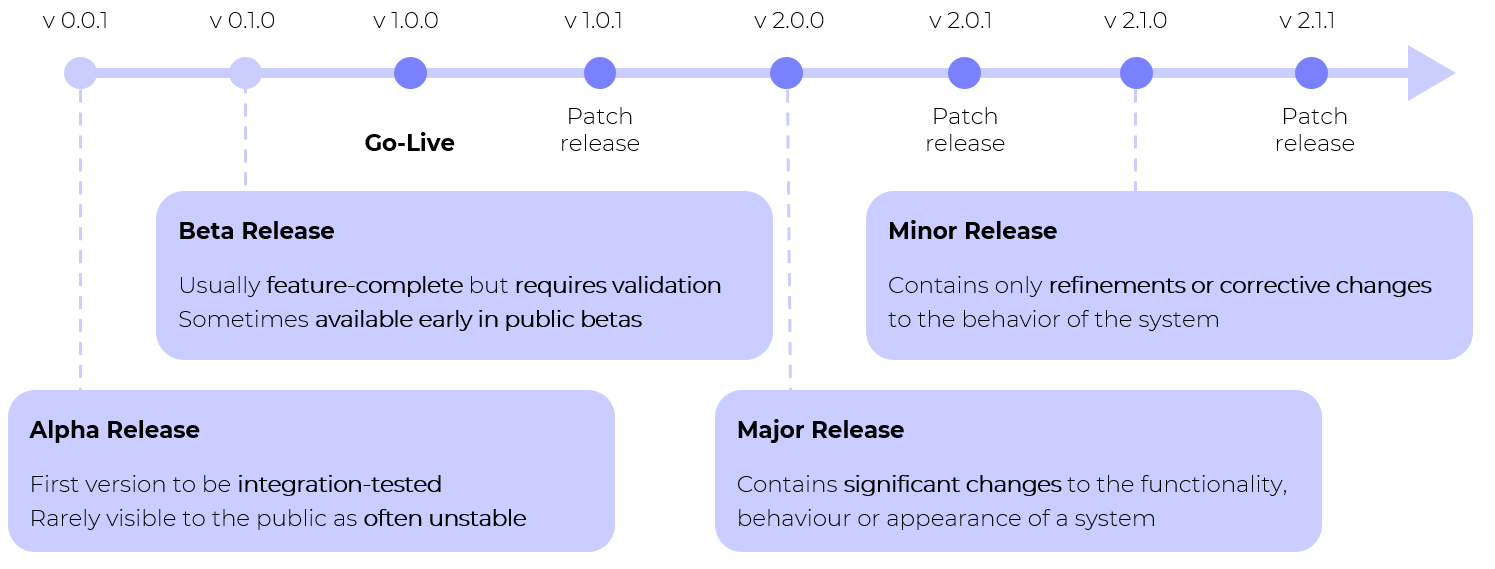

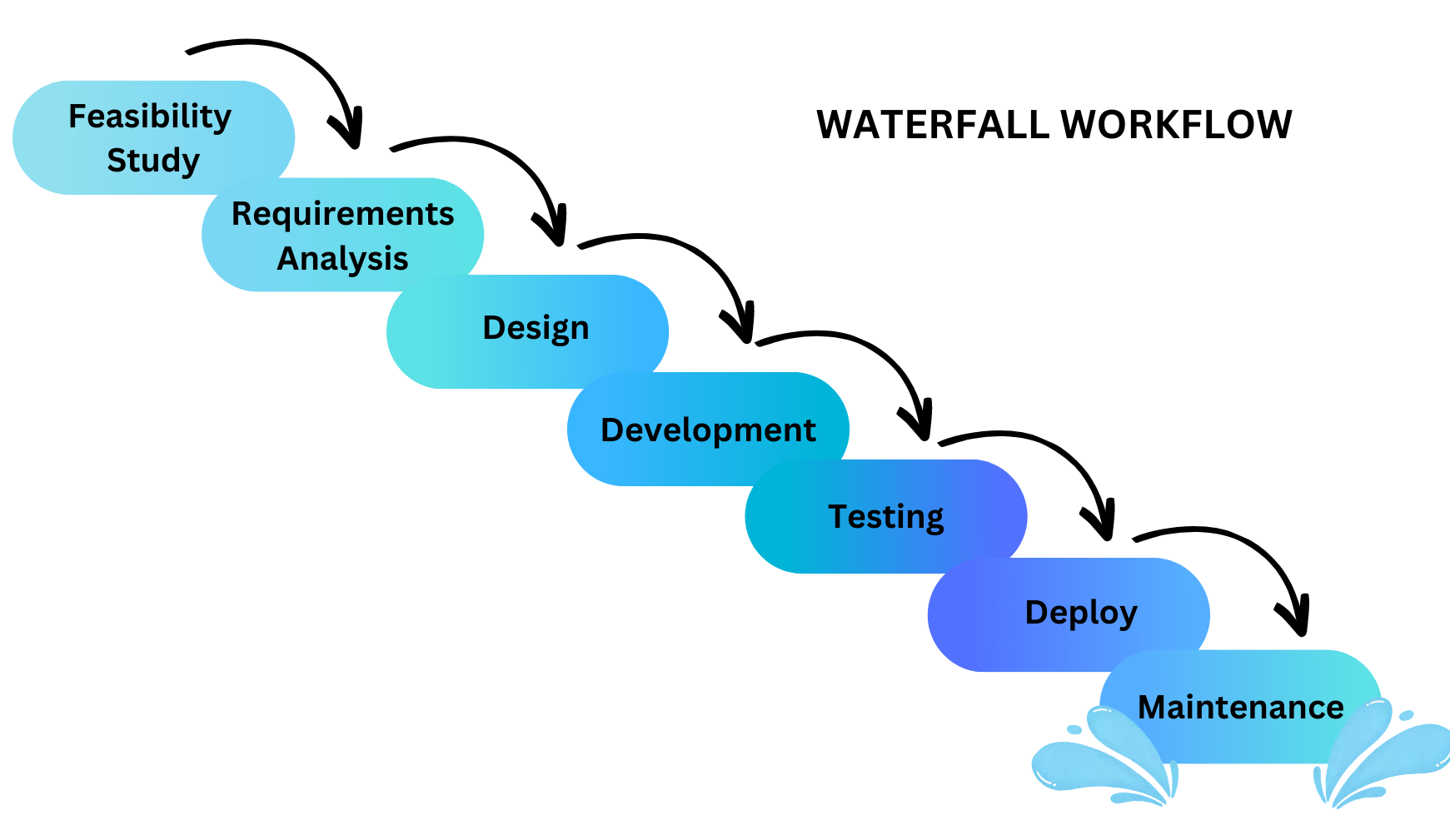

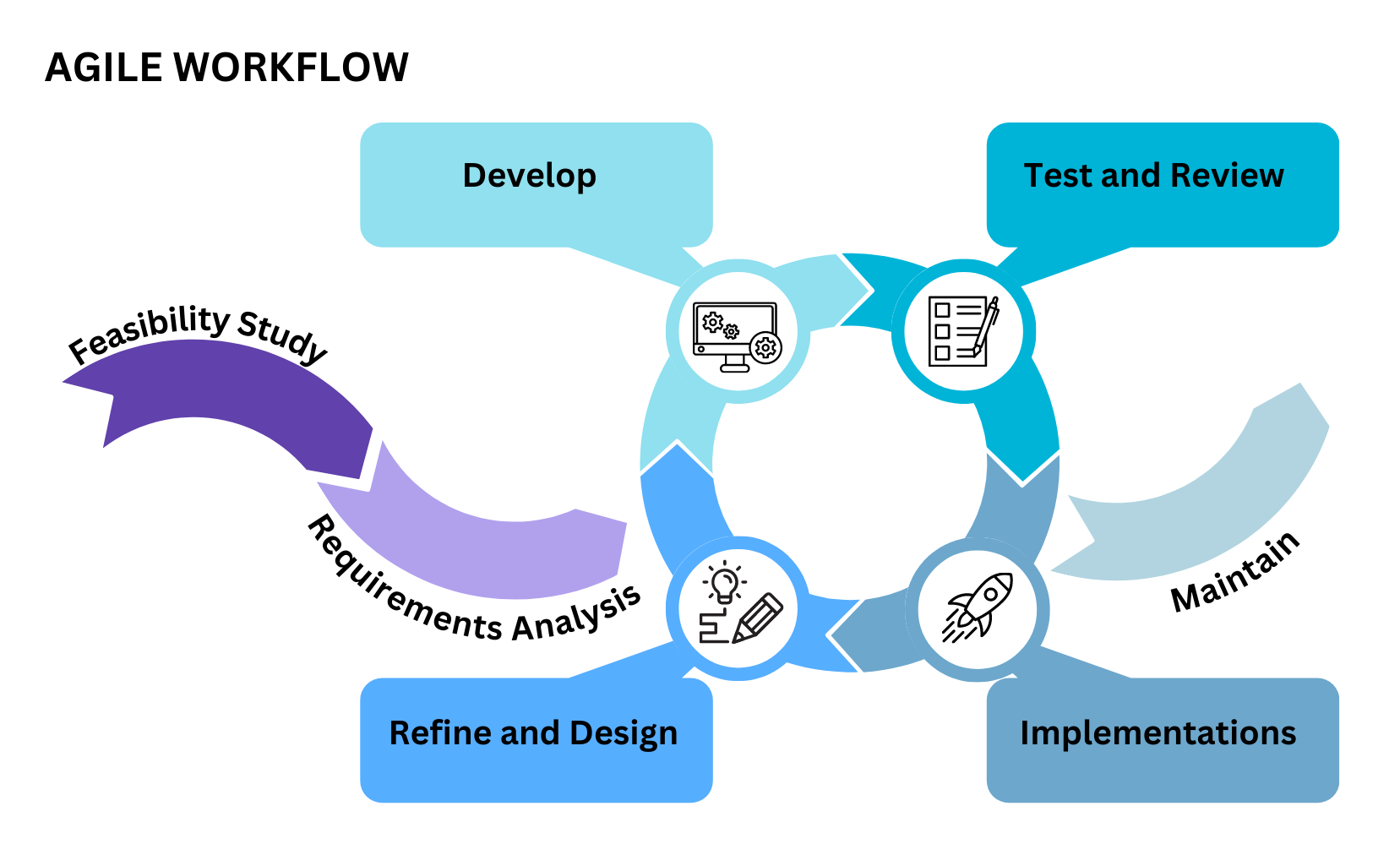

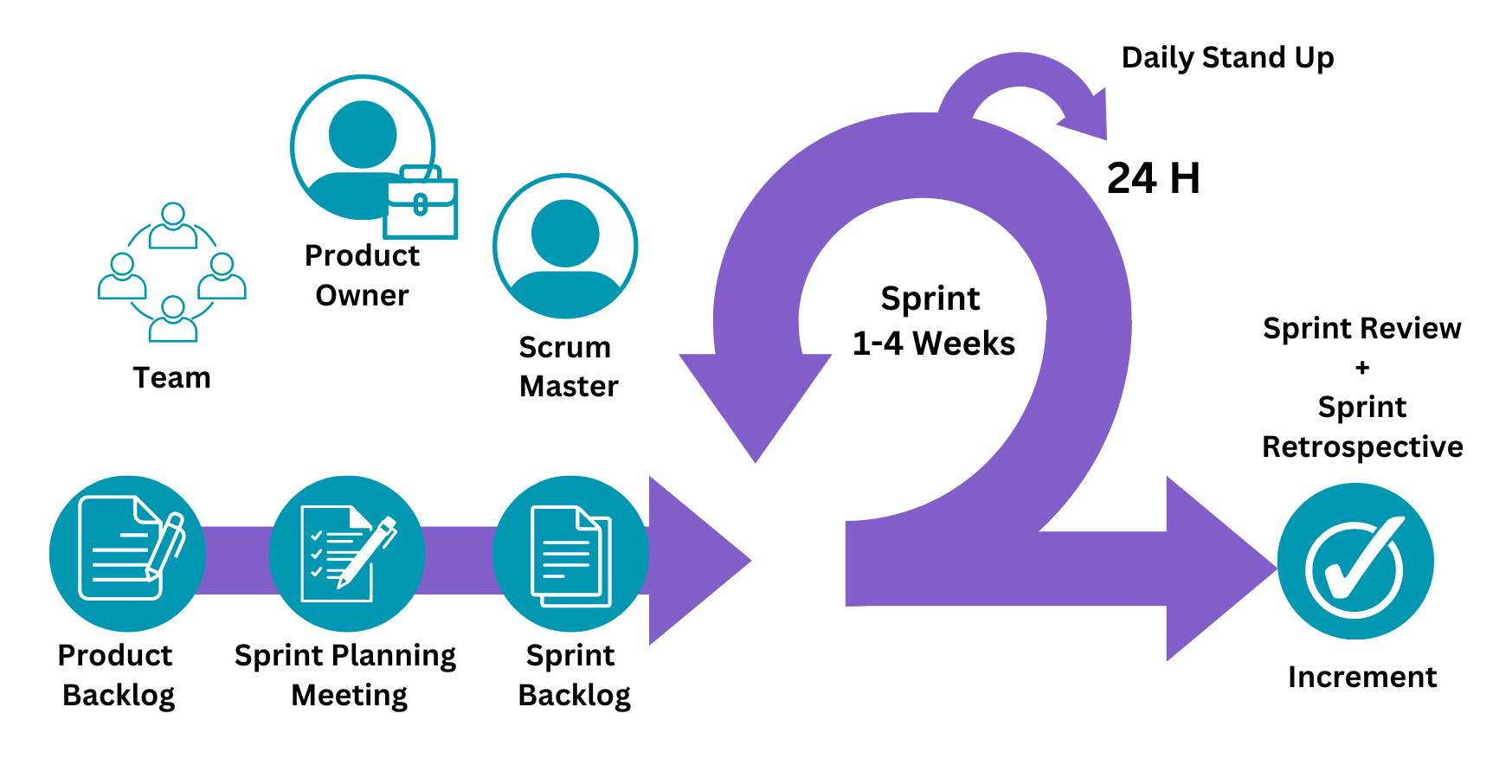

- Describes all stages of the software development lifecycle (K1)

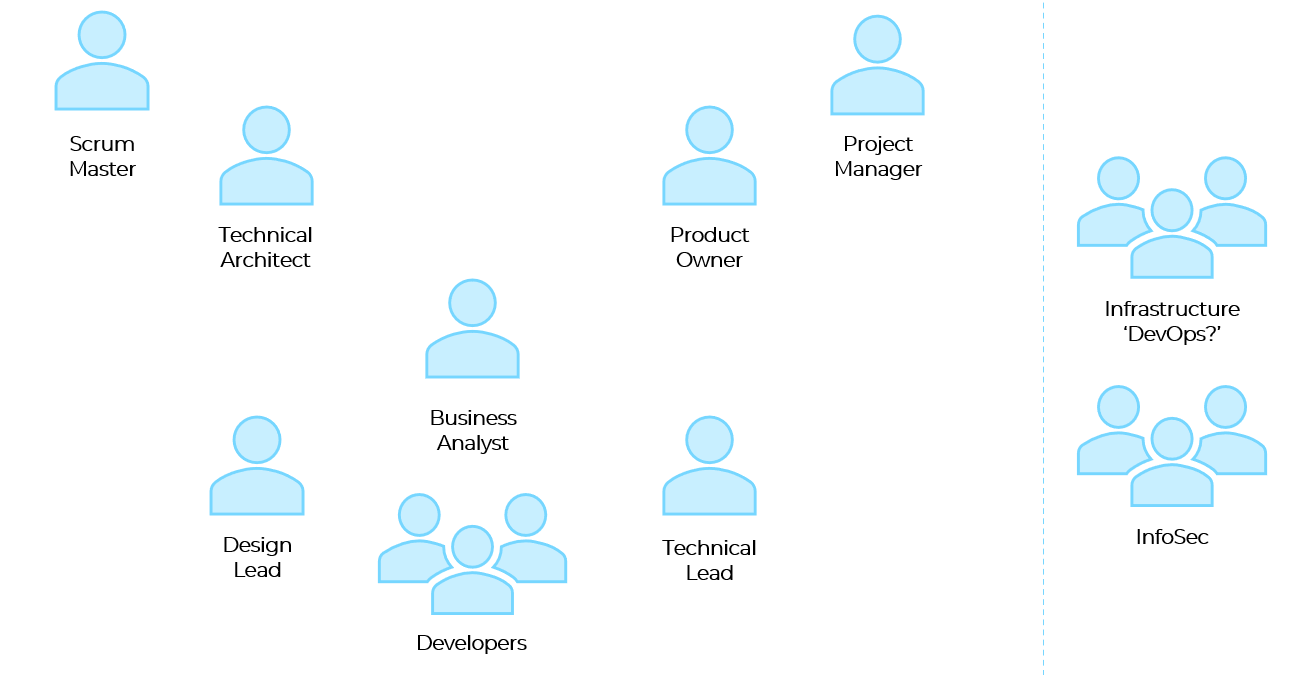

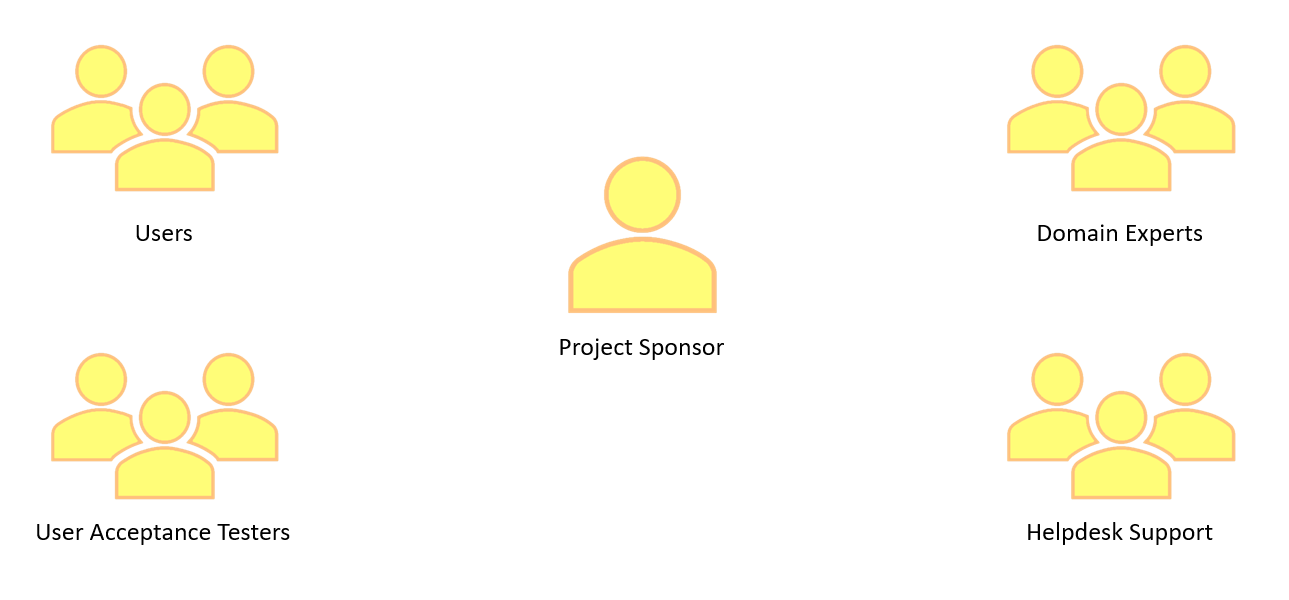

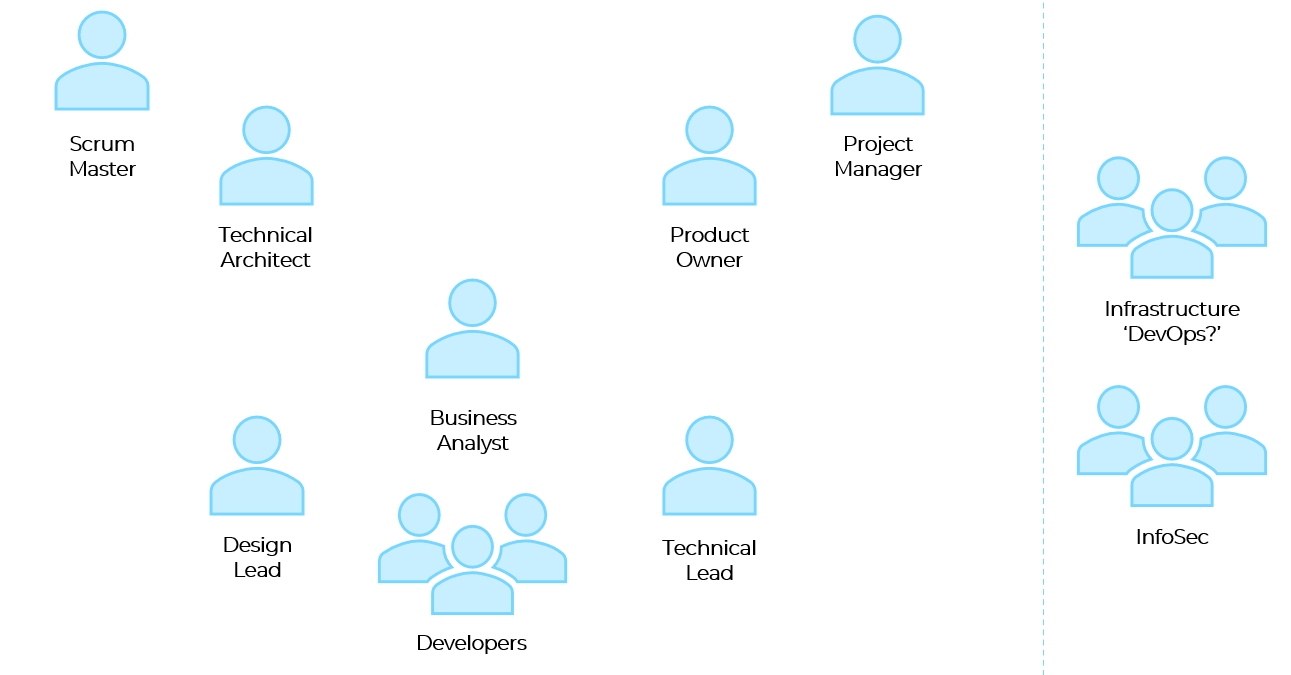

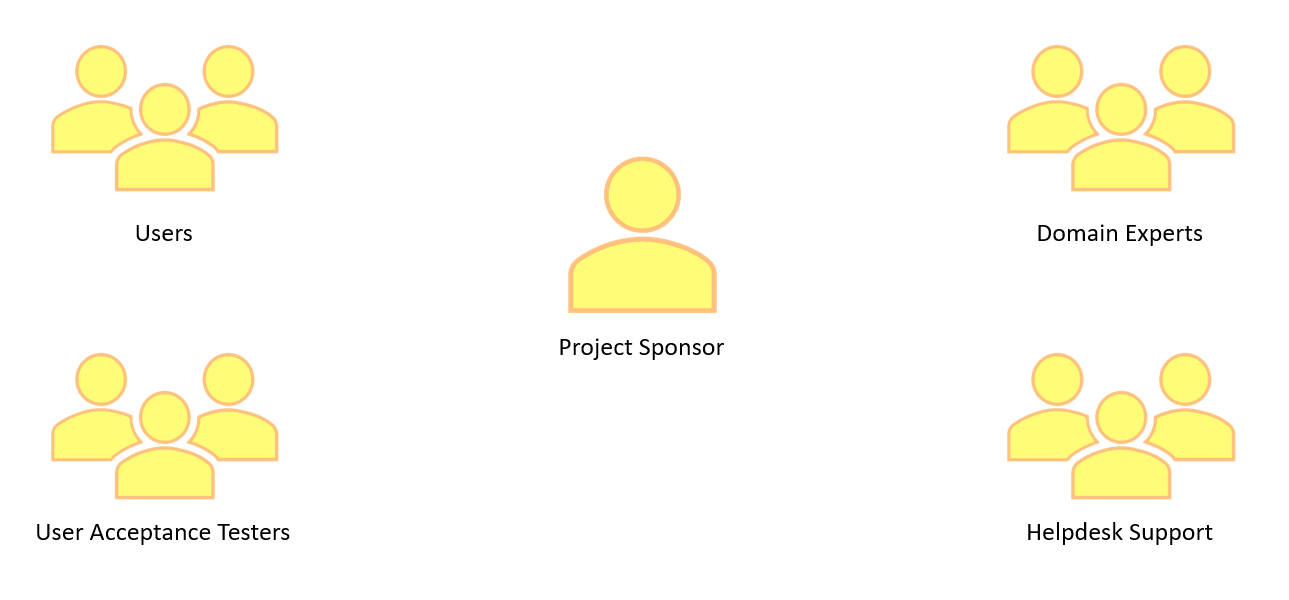

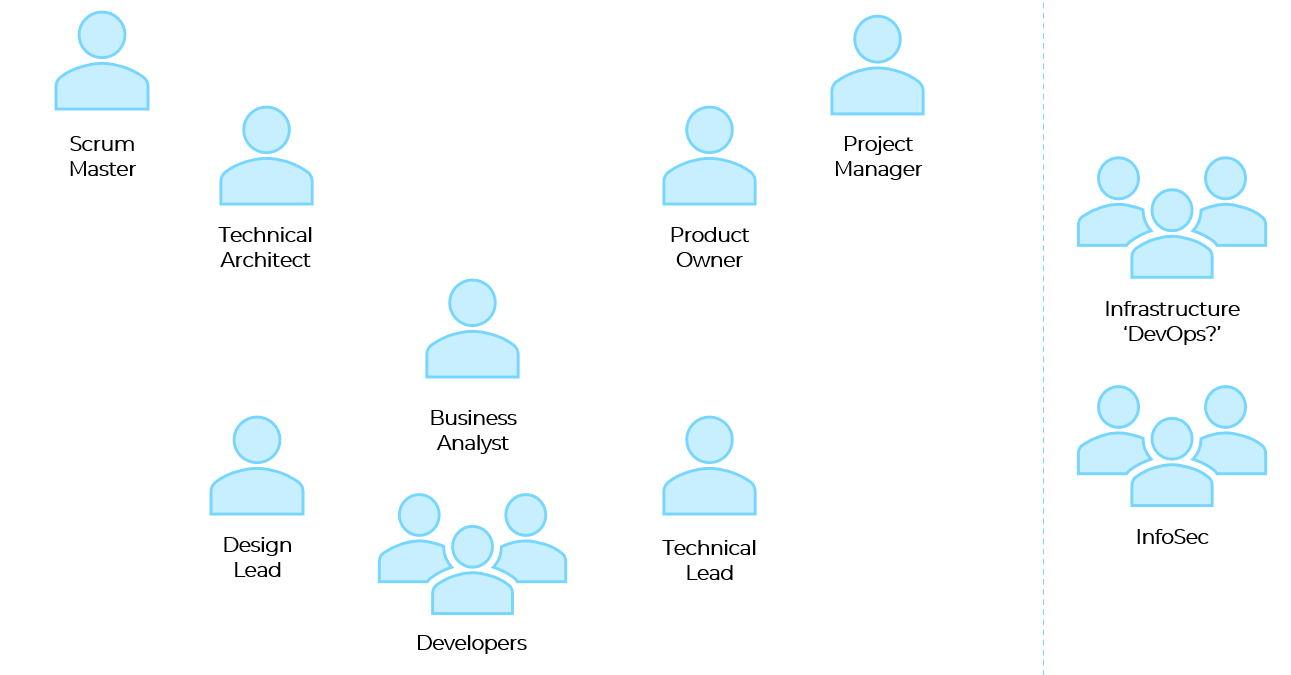

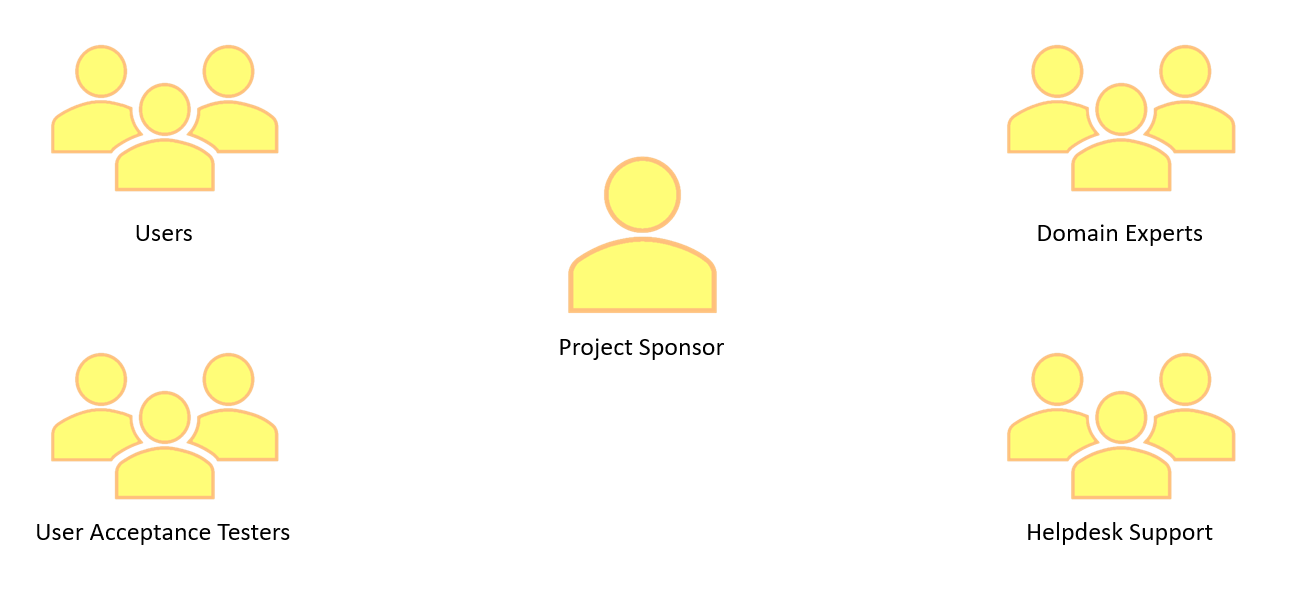

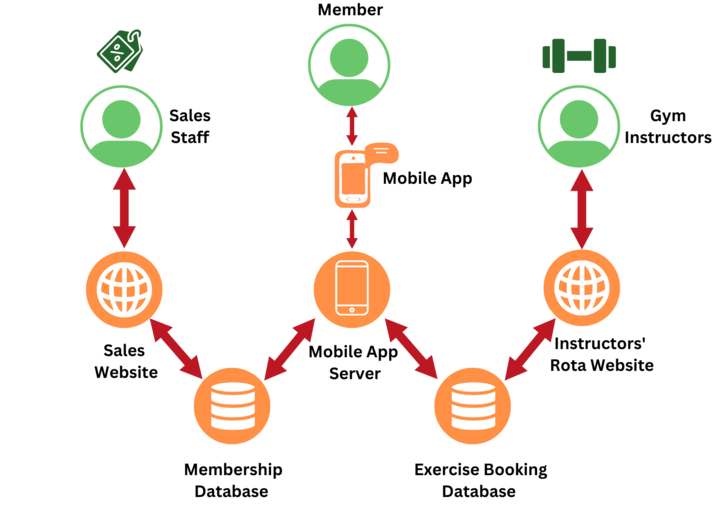

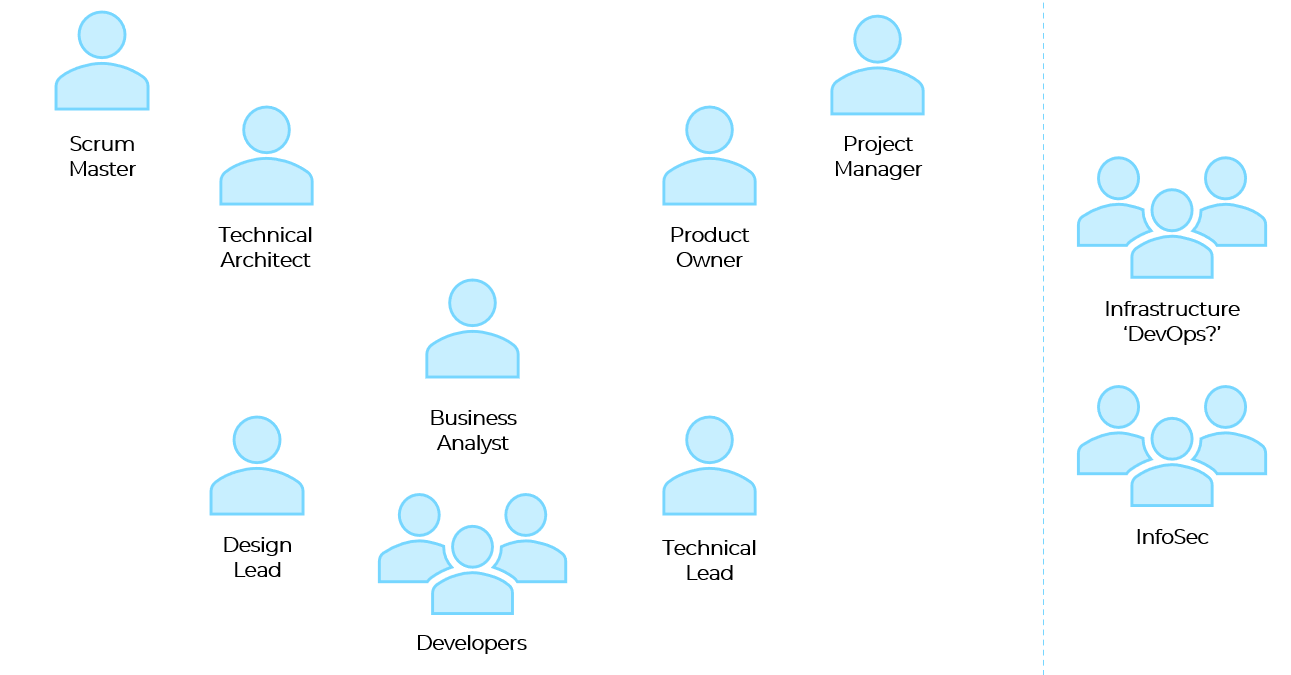

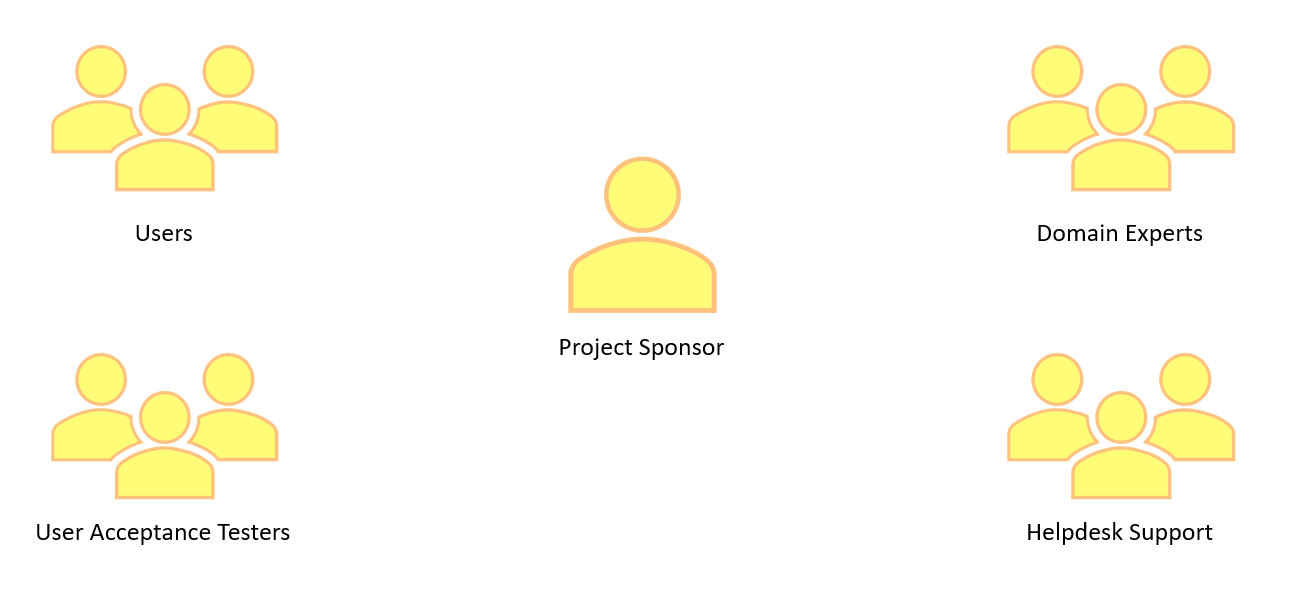

- Describes the roles and responsibilities of the project lifecycle within their organisation, and their role (K3)

- Describes methods of communicating with all stakeholders that is determined by the audience and/or their level of technical knowledge. (K4, S15)

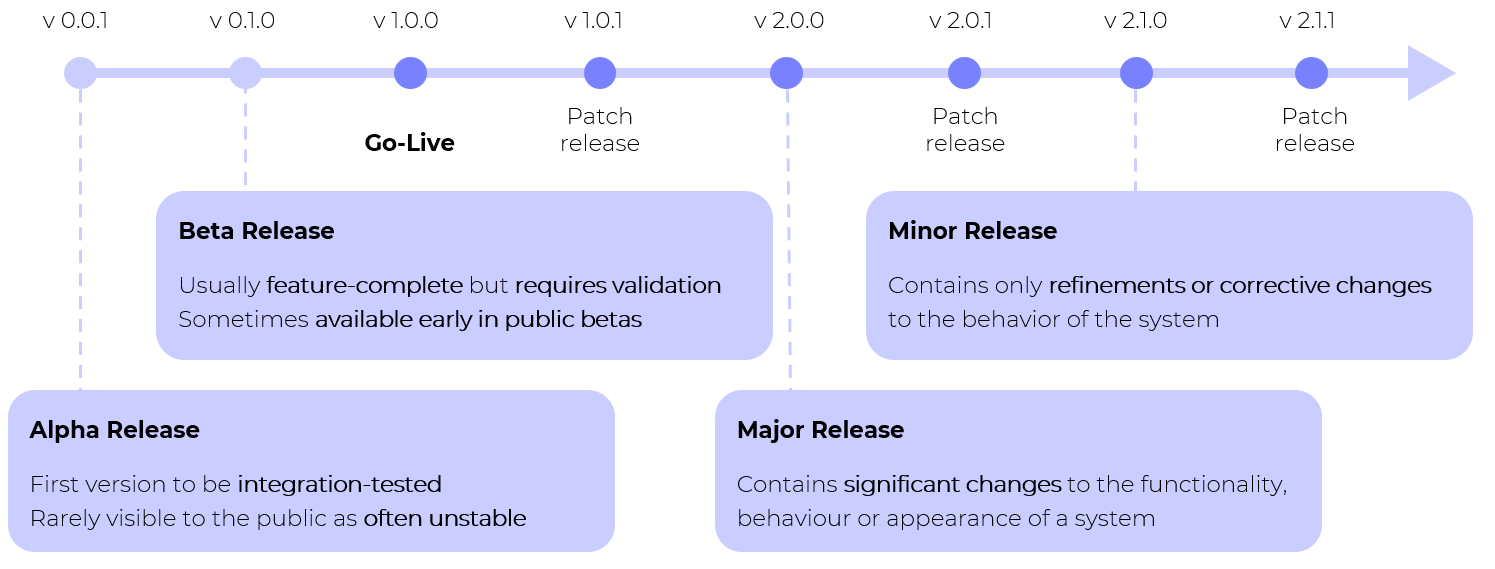

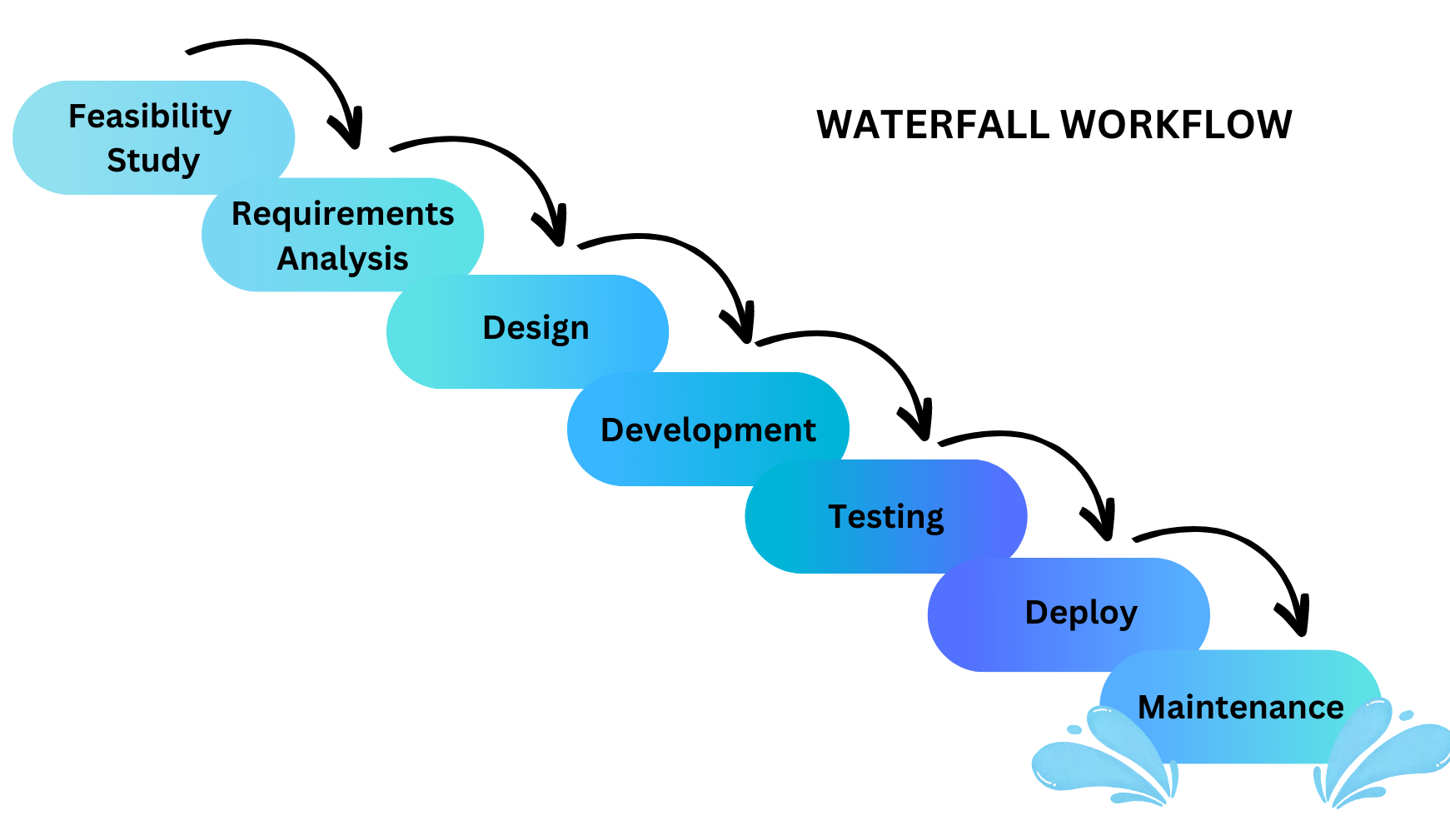

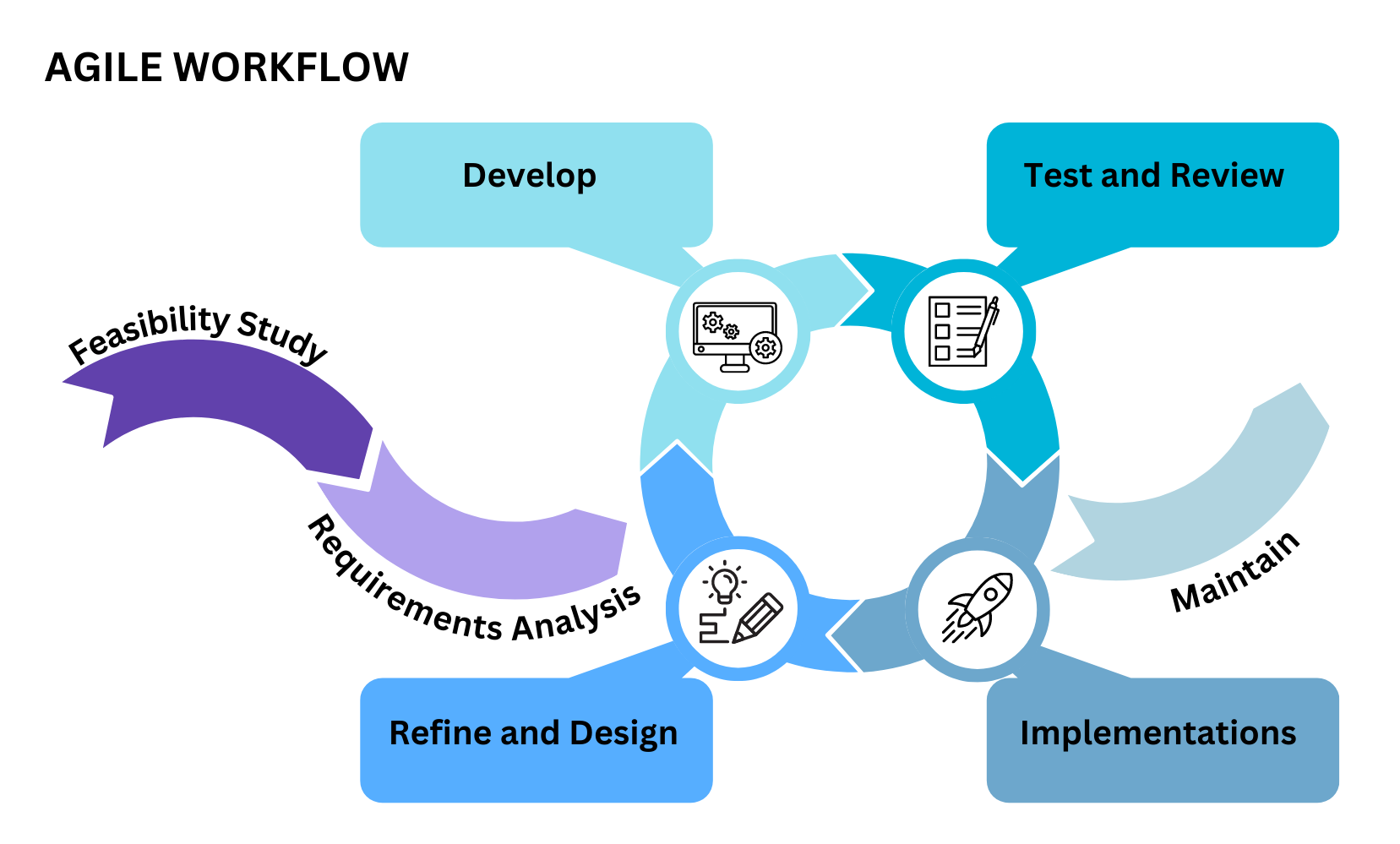

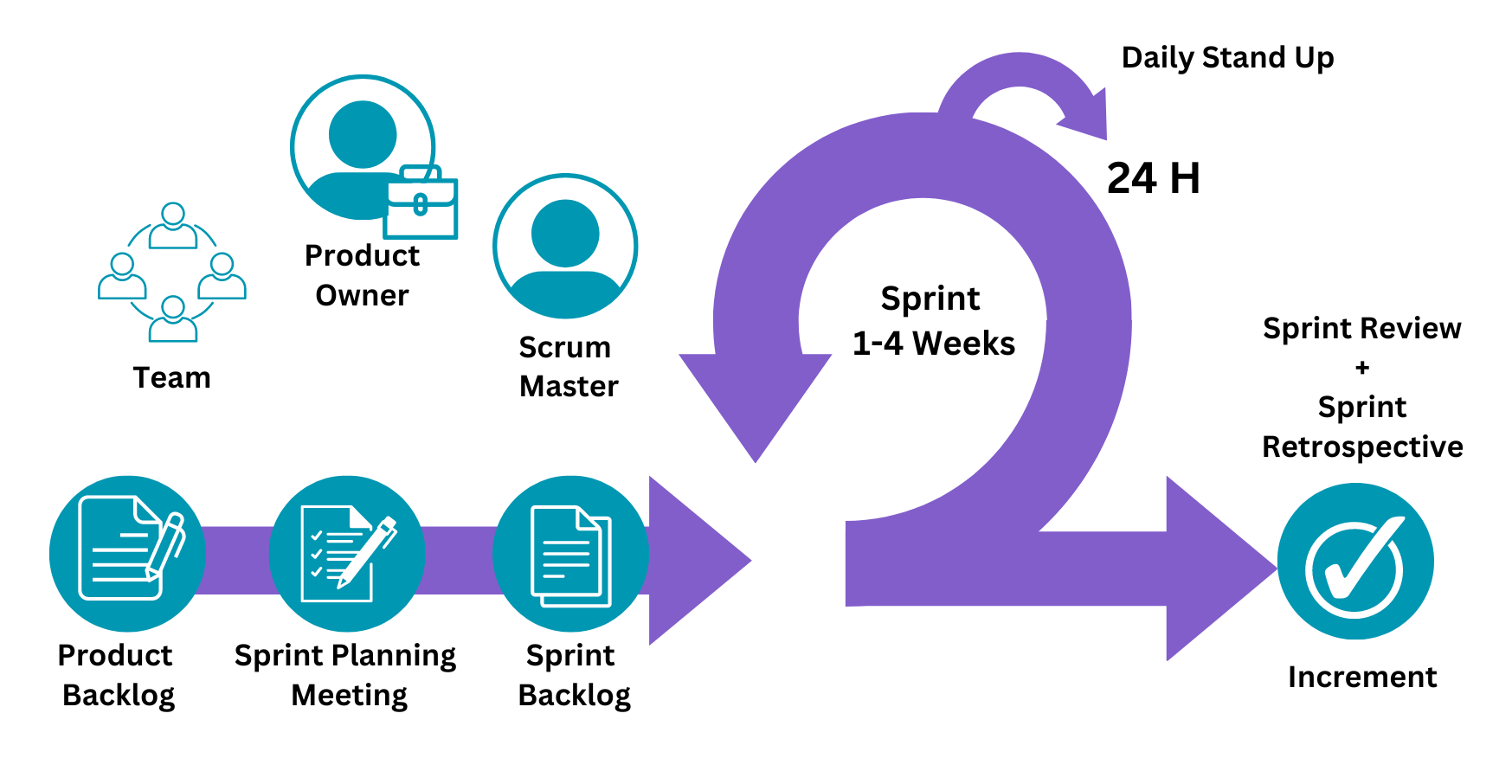

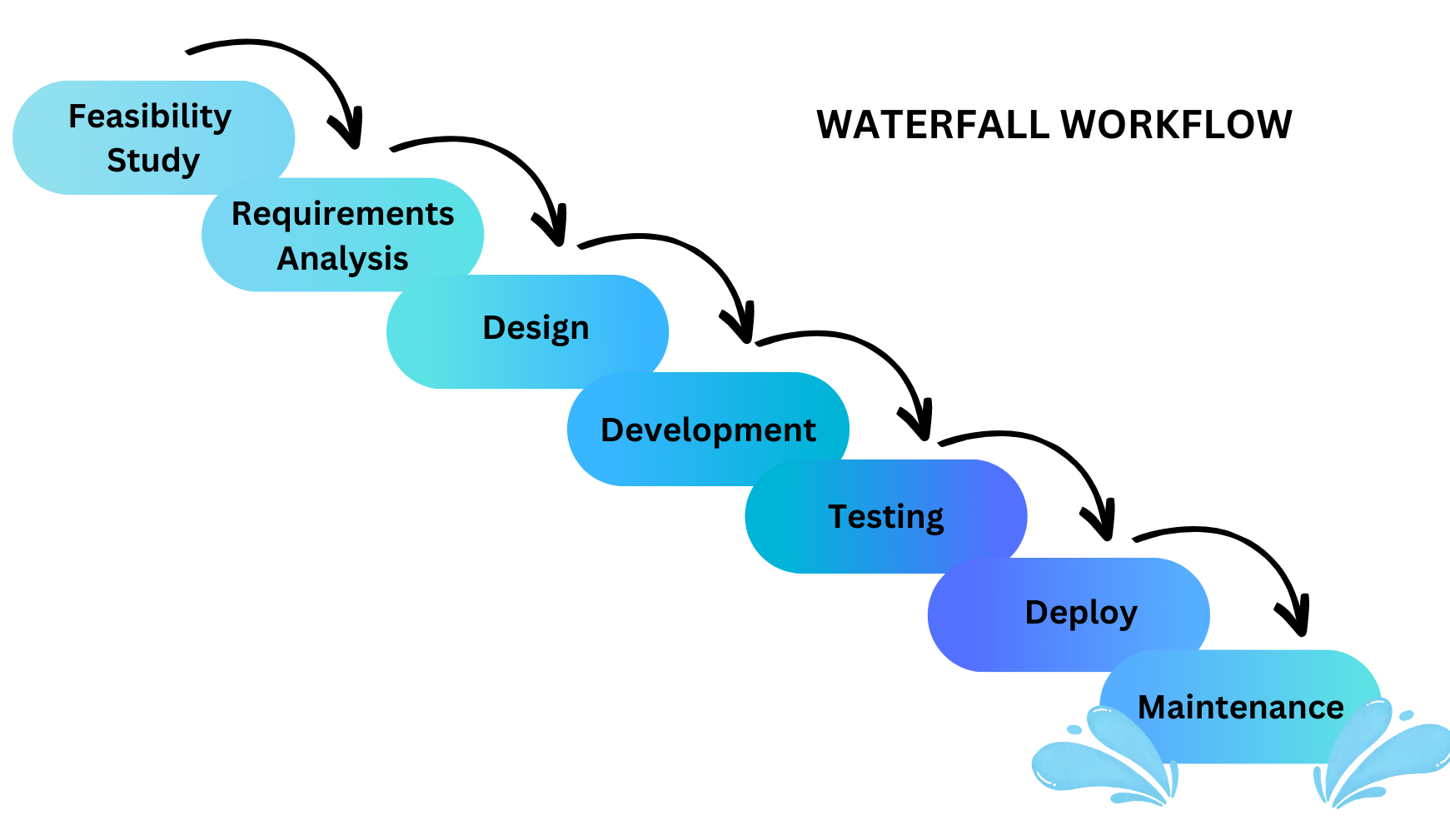

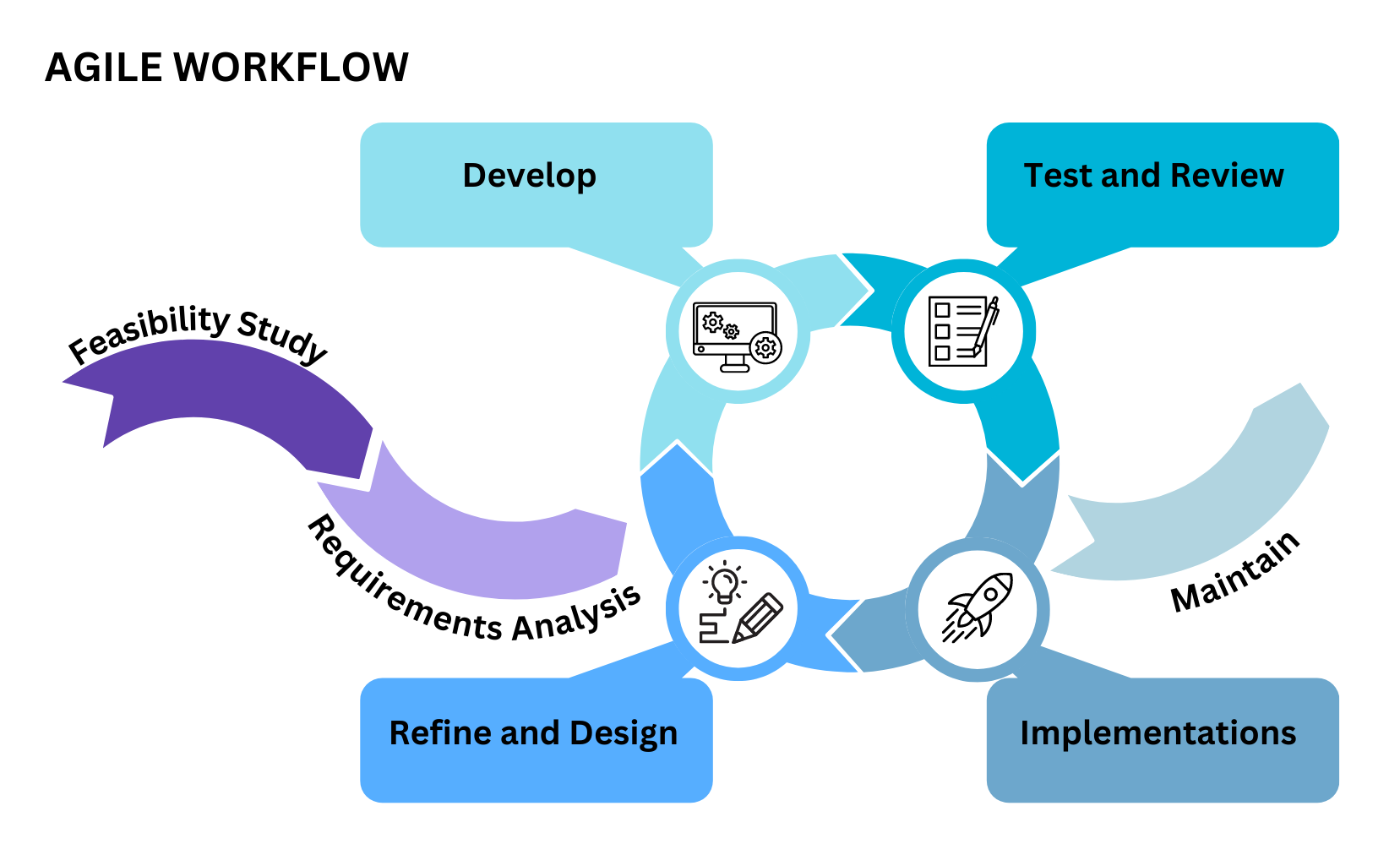

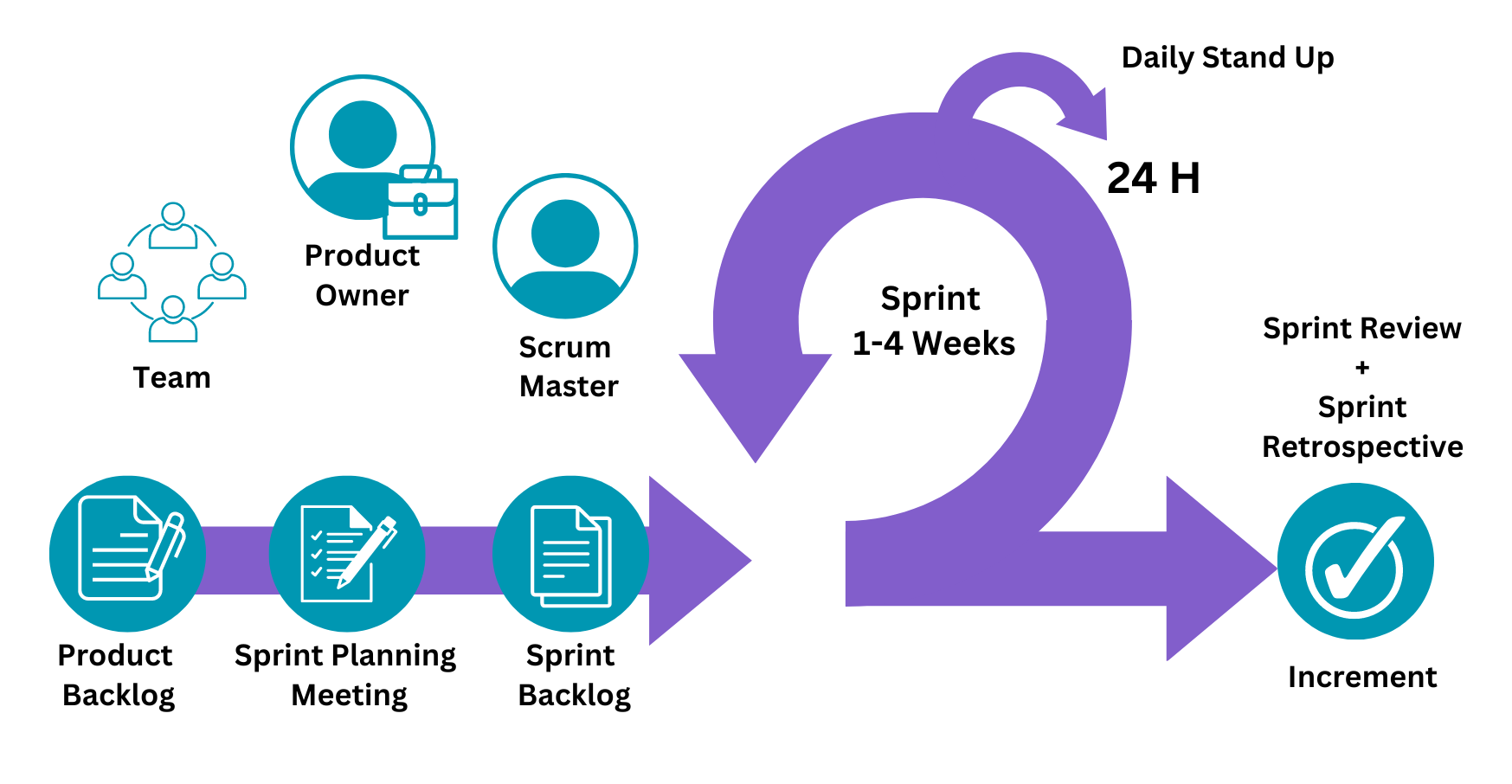

- Describes the similarities and differences between different software development methodologies, such as agile and waterfall (K5)

- Suggests and applies different software design approaches and patterns, to identify reusable solutions to commonly occurring problems (include Bespoke or off-the-shelf) (K7)

- Explains the relevance of organisational policies and procedures relating to the tasks being undertaken, and when to follow them including how they have followed company, team or client approaches to continuous integration, version, and source control (K8 S14)

- Applies the principles and uses of relational and non-relational databases to software development tasks (K10)

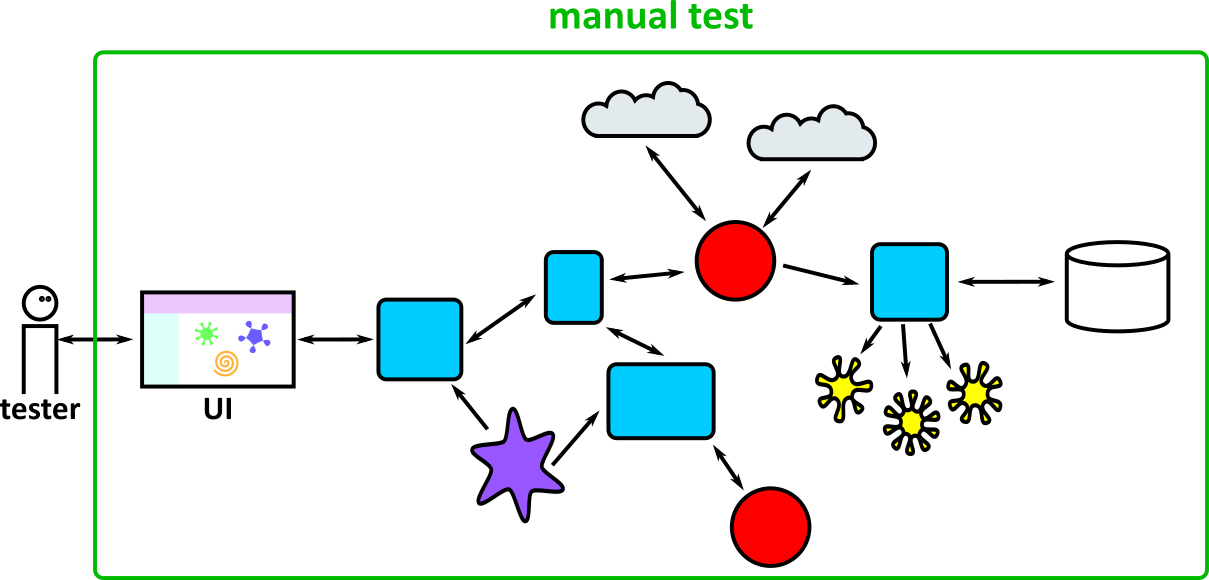

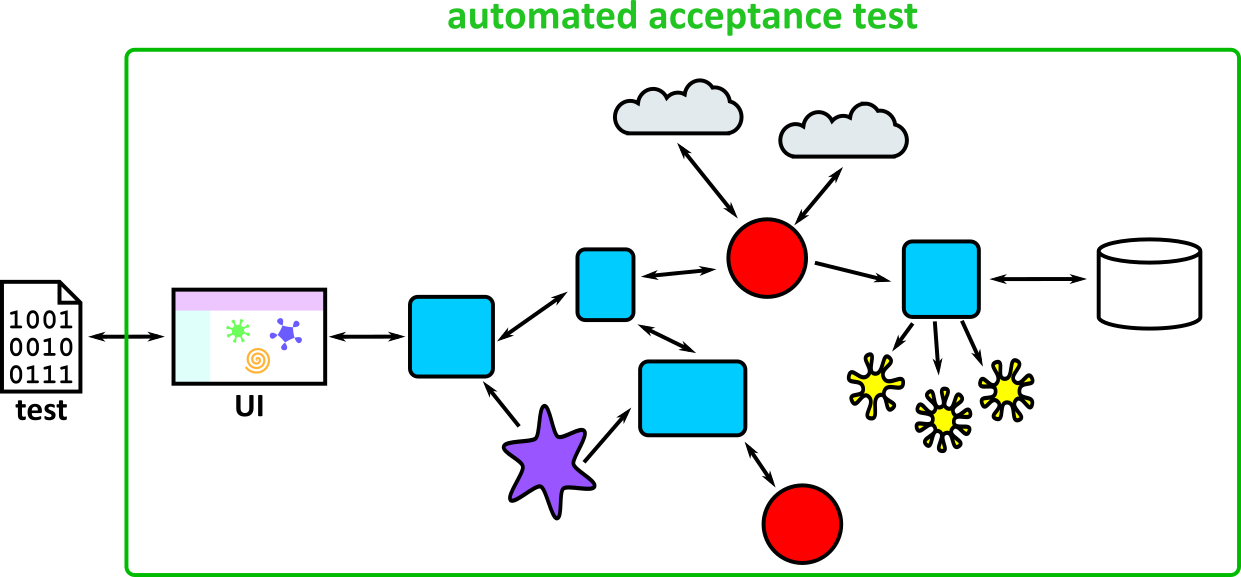

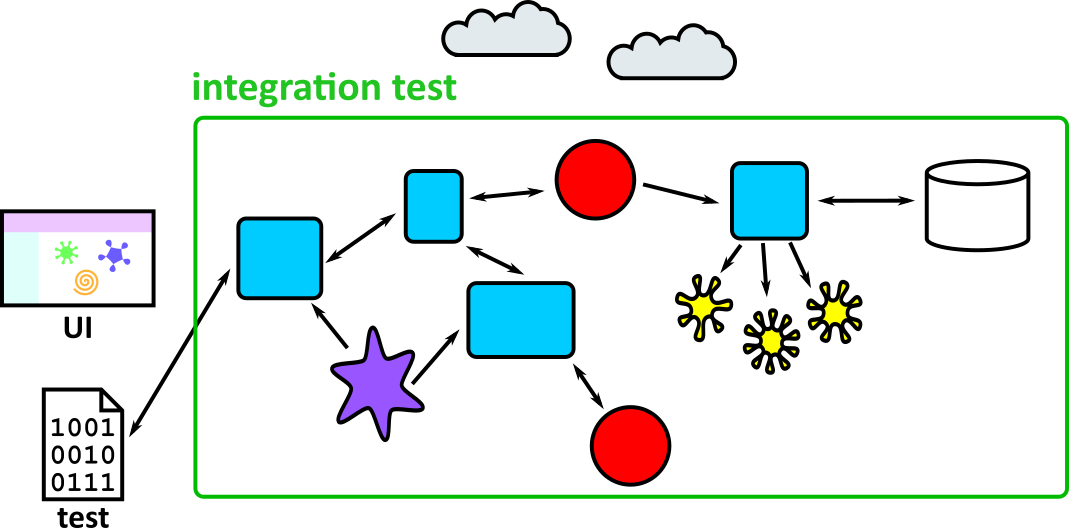

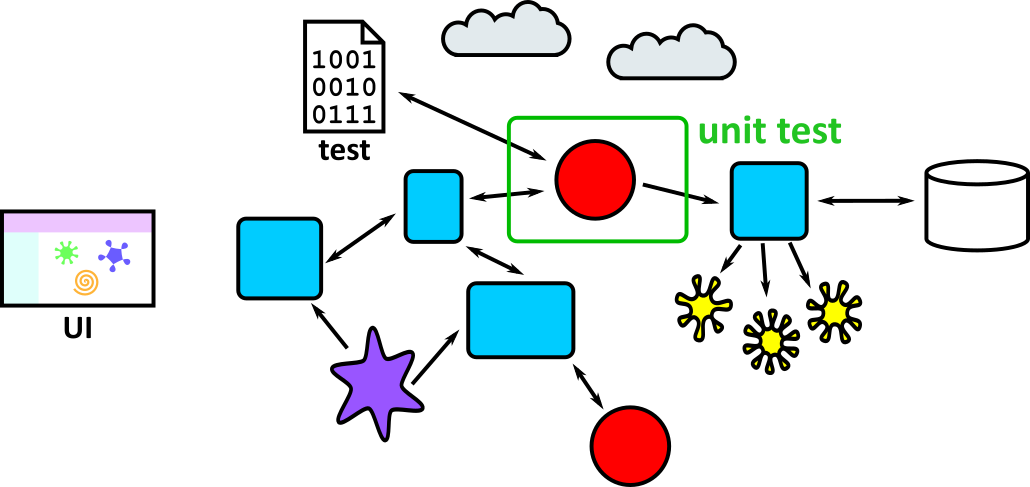

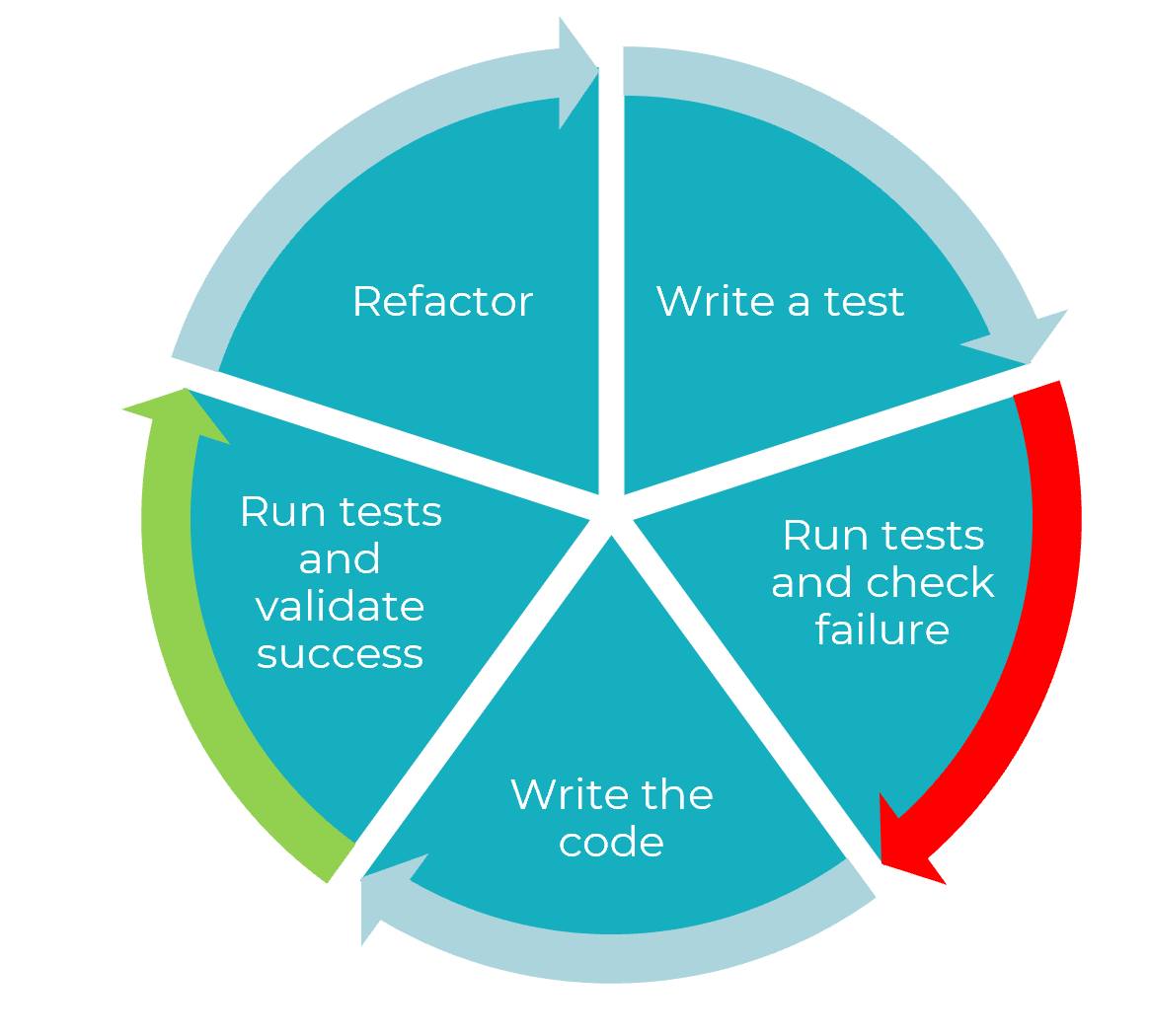

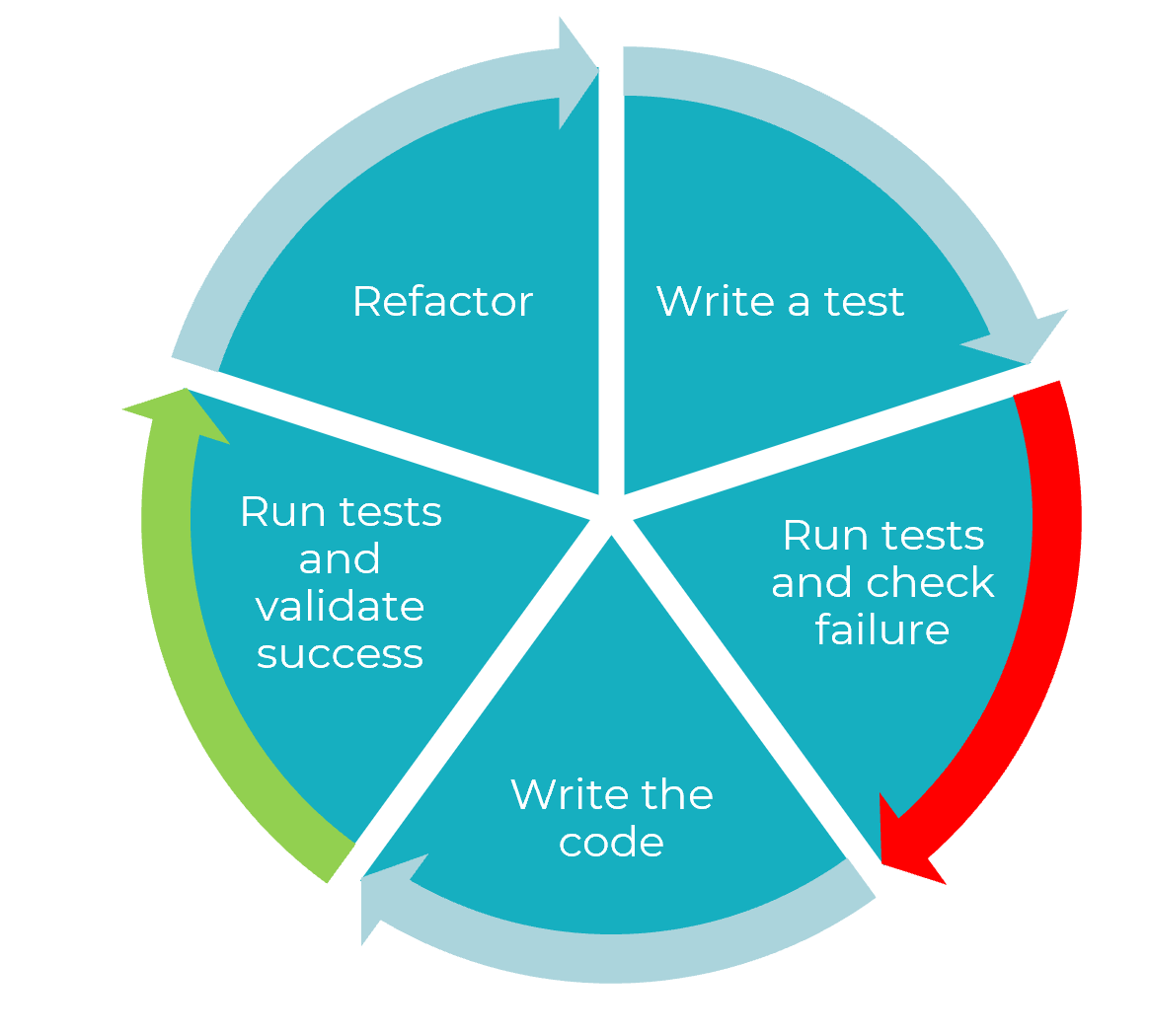

- Describes basic software testing frameworks and methodologies (K12)

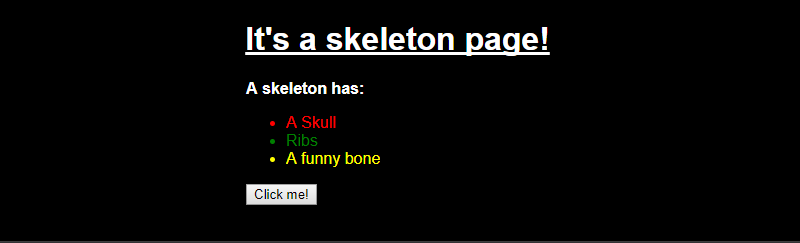

- Explains, their own approach to development of user interfaces (S2)

- Explains, how they have linked code to data sets (S3)

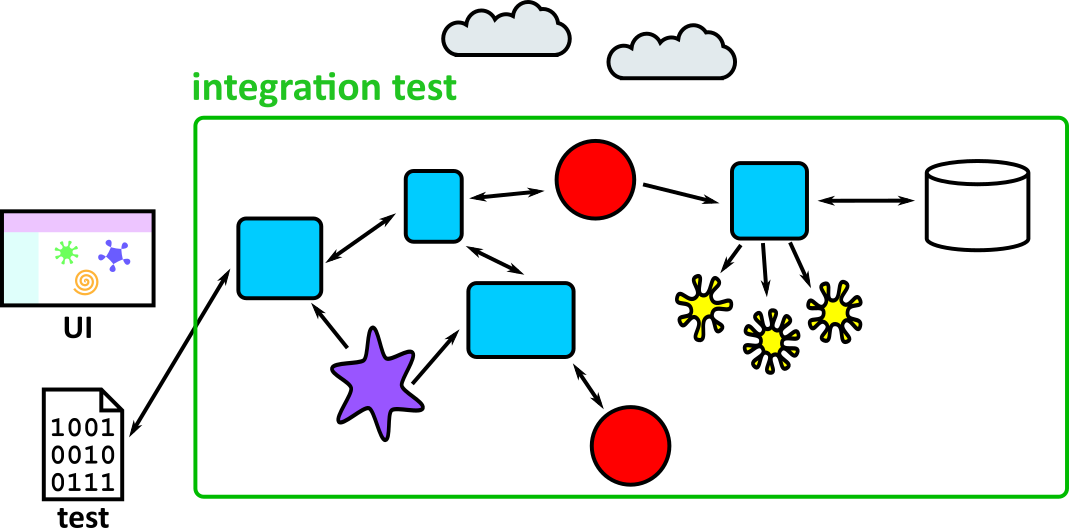

- Illustrates how to conduct test types, including Integration, System, User Acceptance, Non-Functional, Performance and Security testing including how they have followed testing frameworks and methodologies (S5, S13)

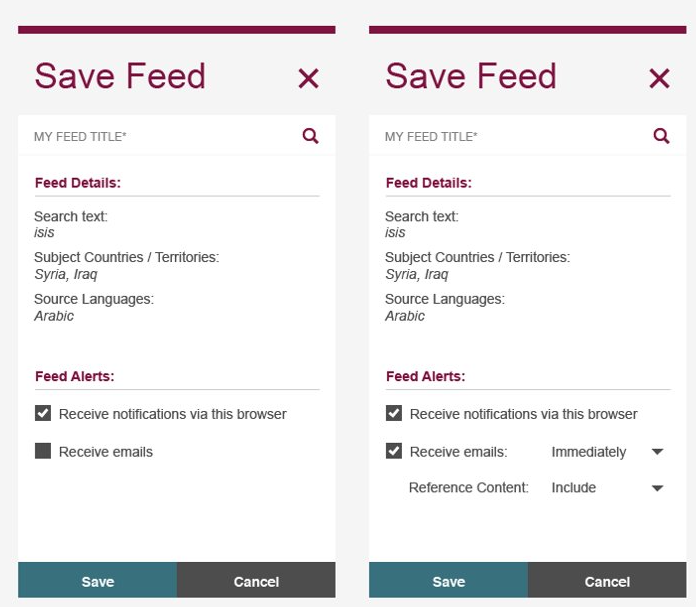

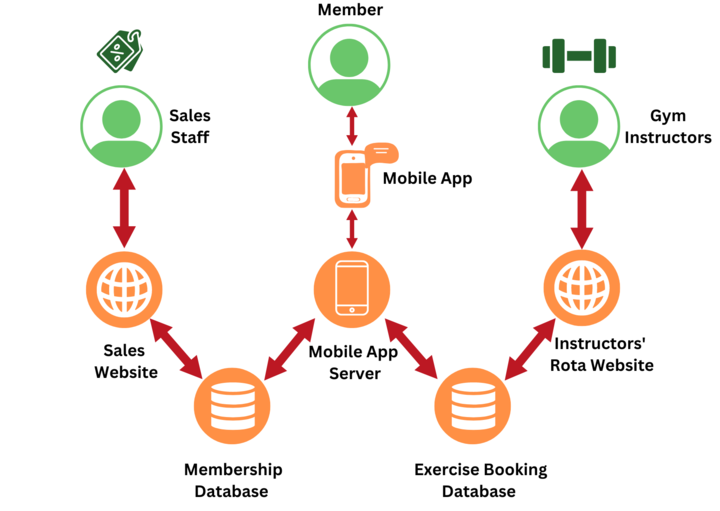

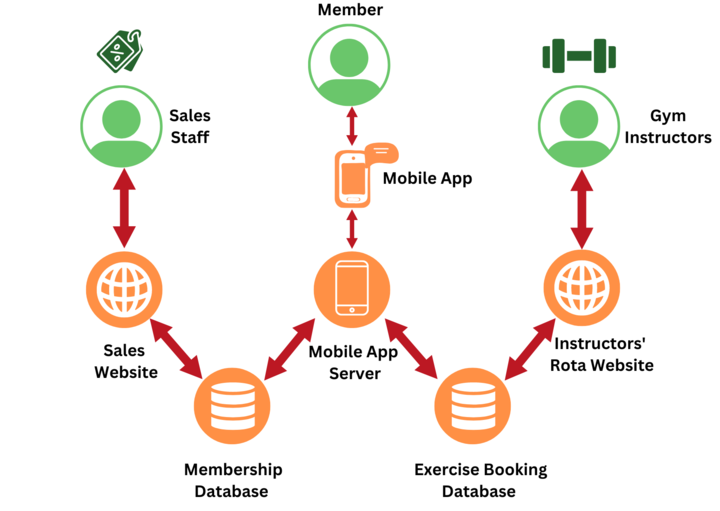

- Creates simple software designs to communicate understanding of the programme to stakeholders and users of the programme (S8)

- Creates analysis artefacts, such as use cases and/or user stories to enable effective delivery of software activities (S9)

- Explains, how they have interpreted and implemented a given design whilst remaining compliant with security and maintainability requirements (S17)

- Describes, how they have operated independently to complete tasks to given deadlines which reflect the level of responsibility assigned to them by the organisation. (B1)

- Illustrates how they have worked collaboratively with people in different roles, internally and externally, which show a positive attitude to inclusion & diversity. (B4)

- Explains how they have established an approach in the workplace which reflects integrity with respect to ethical, legal, and regulatory matters and ensures the protection of personal data, safety and security. (B5)

- Illustrates their approach to meeting unexpected minor changes at work and outlines their approach to delivering within their remit using their initiative. (B6)

- Explains how they have communicated effectively in a variety of situations to both a technical and non-technical audience. (B7)

- Illustrates how they have responded to the business context with curiosity to explore new opportunities and techniques with tenacity to improve solution performance, establishing an approach to methods and solutions which reflects a determination to succeed (B8)

- Explains how they reflect on their continued professional development and act independently to seek out new opportunities (B9)

In order to get a distinction in the professional discussion you must meet the following criteria:

- Compares and contrasts the different types of communication used for technical and non-technical audiences and the benefits of these types of communication methods (K4, S15, B7)

- Evaluates and recommends approaches to using reusable solutions to common problems. (K7)

- Evaluates the use of various software testing frameworks and methodologies and justifies their choice. (K12)

KSB addition

The above criteria should guide decisions around which elements of the learner’s work duties to include in the portfolio. Beyond that, there is one specific KSB that would benefit from extra practical evidence from the learner’s employment:

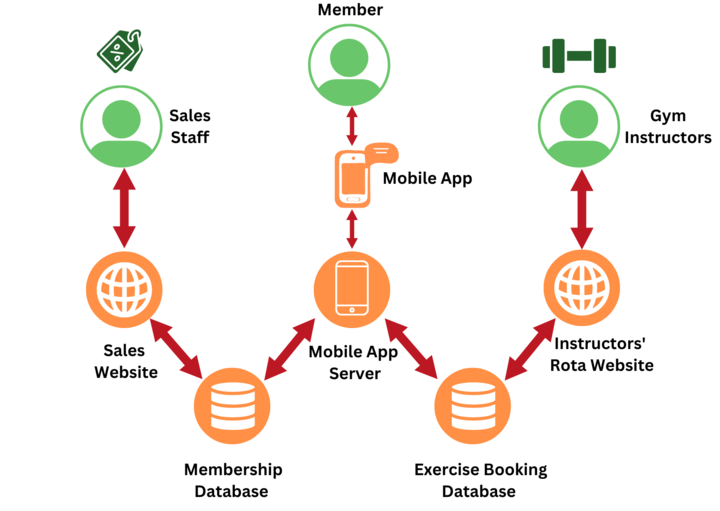

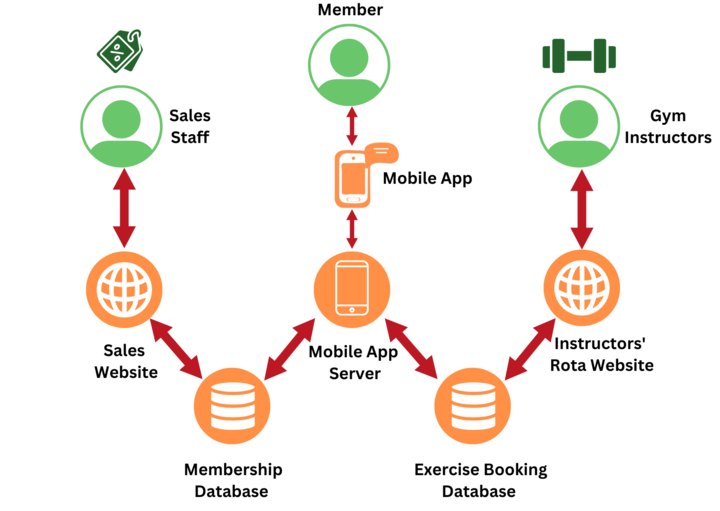

- S10: build, manage and deploy code into the relevant environment

This KSB is addressed through bootcamp exercise 6 (DuckDuckGoose) and the module Infrastructure and Deployment. In those topics the learners setup a CI pipeline and deploy code to a Docker container. It is recommended that each learner also gain some practical experience with their employer’s deployment infrastructure and environments – for example, a suggested approach is for the learner to trigger a deployment of an application to a Test environment, and then be able to demonstrate an understanding of what that action had achieved in their portfolio and during the professional discussion.

Technical Prerequisites

This document covers the technical prerequisites that learners need before beginning the C# Software Developer Apprenticeship bootcamp.

Each learner should have an development machine for their exclusive use running Windows 10 or later, with WSL2 installed, and an internet connection. WSL2 is required for the Infrastructure and Deployment module.

The following applications should be installed:

- .NET SDK (version 6.0.115)

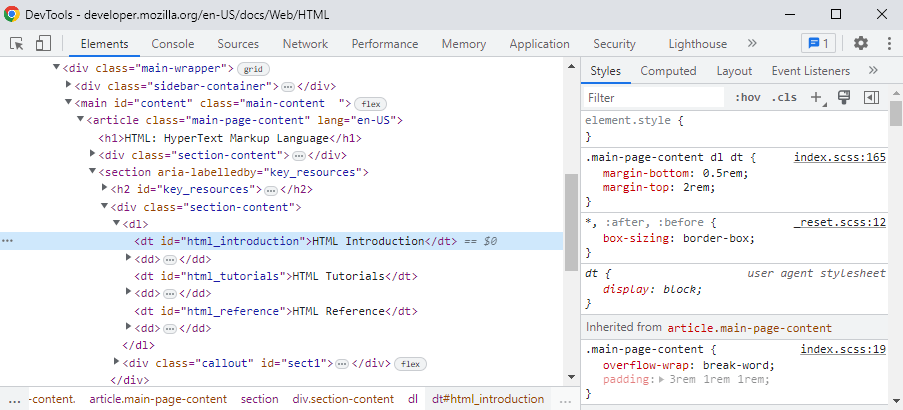

- VSCode – this is the Integrated Development Environment for which the exercise content was written, and the following VSCode extensions should be installed. From this point when VSCode is mentioned, it is assumed that these extensions are installed.

- A Git client application, such as Git Bash or GitKraken

- nvm – you should use this to install Node (version 18) with

nvm use 18

The learner should also have an individual GitHub account into which they can fork the sample code repositories or create their own.

Programs used in specific exercises

The learner notes for each bootcamp exercise (and each module in the ongoing training) includes a Programs used list, which lists the main programs that are needed for that exercise. This is not a list of prerequisites; the installation of any programs that are not in the list above will be addressed as part of the exercise.

In some cases there are explicit steps for the learner to follow (for example, to install PostgreSQL), while other times dependencies are tagged in the exercise code repository (in a .csproj file) and so are installed during the build phase.

Note that in the case of project dependencies being defined in the code repository, only core dependencies whose documentation is useful for the learner will be listed in the Programs used.

Technical Prerequisites

This document covers the technical prerequisites that learners need before beginning the C# Software Developer Apprenticeship bootcamp.

Each learner should have an development machine for their exclusive use running Windows 10 or later, with WSL2 installed, and an internet connection. WSL2 is required for the Infrastructure and Deployment module.

The following applications should be installed:

- .NET SDK (version 6.0.115)

- VSCode – this is the Integrated Development Environment for which the exercise content was written, and the following VSCode extensions should be installed. From this point when VSCode is mentioned, it is assumed that these extensions are installed.

- A Git client application, such as Git Bash or GitKraken

- nvm – you should use this to install Node (version 18) with

nvm use 18

The learner should also have an individual GitHub account into which they can fork the sample code repositories or create their own.

Programs used in specific exercises

The learner notes for each bootcamp exercise (and each module in the ongoing training) includes a Programs used list, which lists the main programs that are needed for that exercise. This is not a list of prerequisites; the installation of any programs that are not in the list above will be addressed as part of the exercise.

In some cases there are explicit steps for the learner to follow (for example, to install PostgreSQL), while other times dependencies are tagged in the exercise code repository (in a .csproj file) and so are installed during the build phase.

Note that in the case of project dependencies being defined in the code repository, only core dependencies whose documentation is useful for the learner will be listed in the Programs used.

Technical Prerequisites

This document covers the technical prerequisites that learners need before beginning the Java Software Developer Apprenticeship bootcamp.

Each learner should have an development machine for their exclusive use running Windows 10 or later, with WSL2 installed, and an internet connection. WSL2 is required for the Infrastructure and Deployment module. The following applications should be installed:

- Java (version 17.0.6)

- Gradle (version 8.0.2)

- VSCode – this is the Integrated Development Environment for which the exercise content was written, and the following VSCode extension should be installed. From this point when VSCode is mentioned, it is assumed that this extension is installed.

- A Git client application, such as Git Bash or GitKraken

- nvm – you should use this to install Node (version 18) with

nvm use 18

The learner should also have an individual GitHub account into which they can fork the sample code repositories or create their own.

Programs used in specific exercises

The learner notes for each bootcamp exercise (and each module in the ongoing training) includes a Programs used list, which lists the main programs that are needed for that exercise. This is not a list of prerequisites; the installation of any programs that are not in the list above will be addressed as part of the exercise.

In some cases there are explicit steps for the learner to follow (for example, to install PostgreSQL), while other times dependencies are tagged in the exercise code repository (in a Gradle file) and so are installed during the build phase.

Note that in the case of project dependencies being defined in the code repository, only core dependencies whose documentation is useful for the learner will be listed in the Programs used.

Technical Prerequisites

This document covers the technical prerequisites that learners need before beginning the JavaScript Software Developer Apprenticeship bootcamp.

Each learner should have an development machine for their exclusive use running Windows 10 or later, with WSL2 installed, and an internet connection. WSL2 is required for the Infrastructure and Deployment module.

The following applications should be installed:

- nvm – you should use this to install Node (version 18) with

nvm use 18 - VSCode – this is the Integrated Development Environment for which the exercise content was written, and the following VSCode extension should be installed. From this point when VSCode is mentioned, it is assumed that this extension is installed.

- A Git client application, such as Git Bash or GitKraken

The learner should also have an individual GitHub account into which they can fork the sample code repositories or create their own.

Programs used in specific exercises

The learner notes for each bootcamp exercise (and each module in the ongoing training) includes a Programs used list, which lists the main programs that are needed for that exercise. This is not a list of prerequisites; the installation of any programs that are not in the list above will be addressed as part of the exercise.

In some cases there are explicit steps for the learner to follow (for example, to install PostgreSQL), while other times dependencies are tagged in the exercise code repository (in a package.json file) and so are installed during the build phase.

Note that in the case of project dependencies being defined in the code repository, only core dependencies whose documentation is useful for the learner will be listed in the Programs used.

Technical Prerequisites

This document covers the technical prerequisites that learners need before beginning the Python Software Developer Apprenticeship bootcamp.

Each learner should have an development machine for their exclusive use running Windows 10 or later, with WSL2 installed, and an internet connection. WSL2 is required for the Infrastructure and Deployment module.

The following applications should be installed:

- Python (version 3.11.0)

- Poetry (version 1.4)

- Make sure to add poetry to the system environment variables as mentioned here

- VSCode – this is the Integrated Development Environment for which the exercise content was written, and the following VSCode extension should be installed. From this point when VSCode is mentioned, it is assumed that this extension is installed.

- A Git client application, such as Git Bash or GitKraken

- nvm – you should use this to install Node (version 18) with

nvm use 18

The learner should also have an individual GitHub account into which they can fork the sample code repositories or create their own.

Programs used in specific exercises

The learner notes for each bootcamp exercise (and each module in the ongoing training) includes a Programs used list, which lists the main programs that are needed for that exercise. This is not a list of prerequisites; the installation of any programs that are not in the list above will be addressed as part of the exercise.

In some cases there are explicit steps for the learner to follow (for example, to install PostgreSQL), while other times dependencies are tagged in the exercise code repository (in a pyproject.toml file) and so are installed during the build phase.

Note that in the case of project dependencies being defined in the code repository, only core dependencies whose documentation is useful for the learner will be listed in the Programs used.

Pre-bootcamp Learner Introduction

This section is designed to teach you the basic of C# programming. You should be able to follow it even if you’ve done little or no programming before. The goal is to get to the point where you can attend the Bootcamp training course, and start to understand and write real code.

If you do have some prior programming experience, read on – there are some bits you can skip over, but don’t move too fast as there could be something you’re not so familiar with that’s worth revising!

The objective of this section is to be able to understand and use the following C# programming concepts:

- Variables and assignment

- Conditional logic - the if statement

- Looping logic - the for, foreach and while statements

- Arrays

- Creating and calling methods and classes

- Building a simple console application

These topics will be addressed by way of Microsoft-provided tutorials.

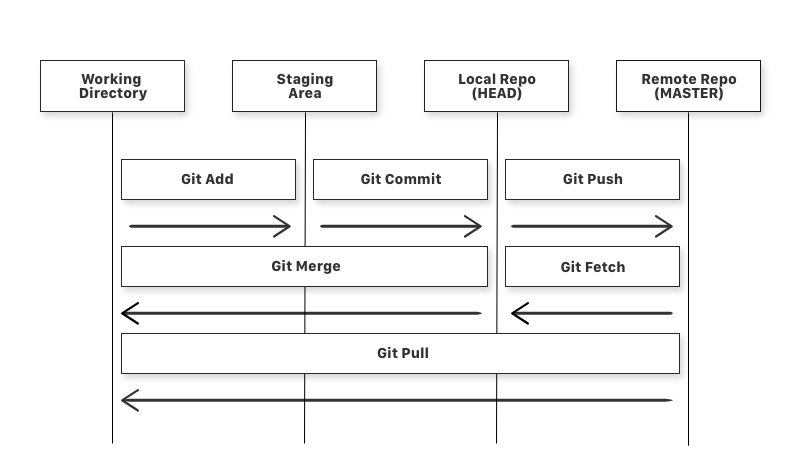

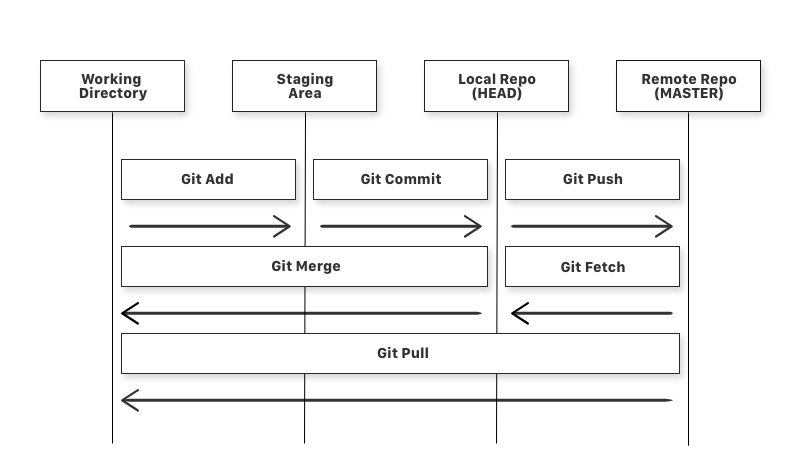

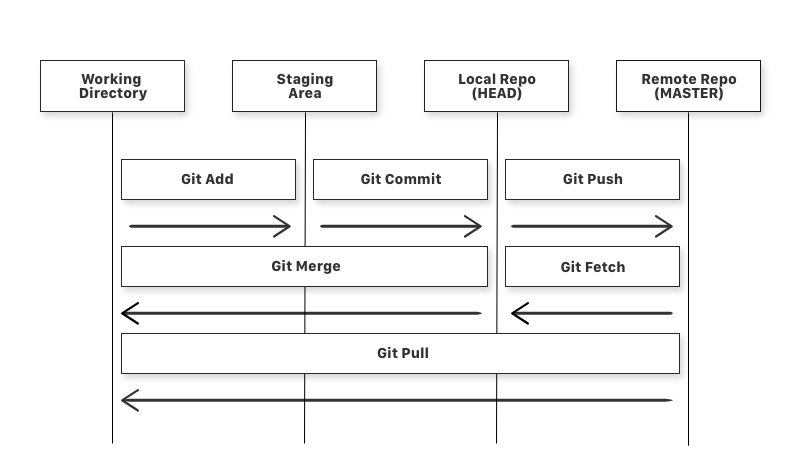

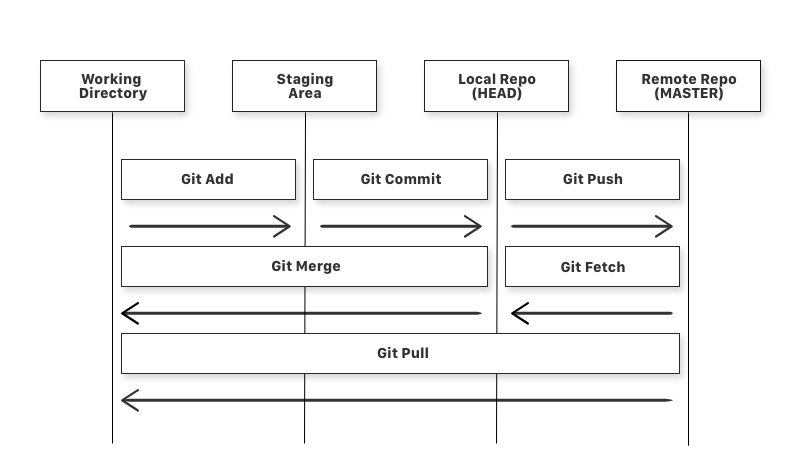

You’ll also learn the very basics of the Git version control system, and how to put the code you’ve written on GitHub.

Setup

Development tools

Before beginning this preparation you will need a development machine with the following already installed – this information has been provided to your employer as technical prerequisites.

- .NET SDK (version 6.0.115)

- Visual Studio Code (VSCode) – this is the Integrated Development Environment for which the exercise content was written, and the following VSCode extensions should be installed.

Note that the course assumes that you will be using Visual Studio Code as your IDE, which is a more lightweight set of tools than the full Visual Studio package.

Git setup

Before beginning Bootcamp, you will need to be able to upload your exercise solutions to GitHub so that your trainer can see them. To do this, you’ll need the following.

If you do not already have a GitHub account, go to GitHub and “Sign Up” for one (it’s free). It’s fine to use an existing account if you have one.

If you have never used Git before, follow GitHub’s short tutorial just to get used to the basics. You can skip over “pull requests” for now if you wish – you won’t need them until you start collaborating with other team members.

Then create a Personal Access Token (PAT) on GitHub by following this tutorial. Choose “classic” rather than “fine-grained”. Name your token something like “C sharp training”, give it an expiry time of at least 6 weeks and ensure it has the repo scope. Make sure to copy the token because you’ll need it to connect to GitHub in a moment.

To check that your Git installation is working properly, create a folder in which you’ll store the code you’ll write for this course (e.g., C:\Work\Training). Then in your Git client run a version of the following command:

git clone https://git@github.com:YourName/YourProject.git

You’ll need to replace YourName by your GitHub account name, and YourProject by the name of one of your GitHub repositories (you should have at least one if you’ve followed the tutorial on GitHub). The easiest way to get a url for the git clone command is to navigate to your project in GitHub and find the green “Code” button. Click this and you should get a “Clone with HTTPS” box containing the correct URL to copy and use.

Once you run the git clone command above, you should be prompted for your GitHub login details. Instead of your password, use the PAT you created earlier.

You should find that your project is now downloaded locally into a new folder inside C:/Work/Training. We’ll explore more about how to work with Git as we progress through the course.

If you have any trouble getting the above to work, get in touch with your trainer who should be able to give you some pointers to get moving.

Pre-bootcamp content structure

This pre-bootcamp section is built from the C# Fundamentals for Absolute Beginners video series on Microsoft Virtual Academy. Note that the number and order of the videos on the Microsoft site is different from what is linked here; we suggest that you rely on the links in this section rather than clicking on the ‘Next’ video.

As you go through the standard tutorials, you’ll be guided through a series of exercises to build a simple application with the tools that have been presented.

By the end of this section you should have all the basics you need to start writing your own C# code. But don’t panic if it doesn’t all make sense – help is on hand if you get stuck, and once you’ve learnt the basic syntax then during Bootcamp we’ll demystify any remaining puzzles and take your programming to the next level.

If you do already have prior experience you’re welcome to skim over any of the reading material that you’re already confident in. But make sure you’re proud of the code you’re writing, and if you’re not completely confident then re-read the material even if it’s largely familiar.

Further reading

There are two books that we recommend as C# learning resources. They’re not essential, but we’d recommend that all employers have at least the second of these on hand for reference as you continue your C# journey. Which book is best for your first foray into C# depends on your preferred learning style.

Head First C# (Stellman & Greene). This is a very practical hands-on introduction to C#, which explains everything from the basics to fairly complex concepts. It adopts a very informal, graphical style. The premise of the book is very much to throw you in at the deep end – the first chapter involves copying out the code for a computer game, most or all of which you won’t initially understand, but it uses this to explain the first concepts while still giving you an exciting and fully featured application to play with. As you progress through the book the same pattern continues, but you’ll understand increasingly large chunks of the code, until you reach the point where you understand everything!

Learning C# 3.0 (Liberty & MacDonald). This introductory book is more traditionally structured. It introduces topics one by one, using simple examples that focus only on the concepts you’ve already learnt. As a reference book for C# learners this is more suitable than Head First C#, because it’s easier to find a sub-section concentrating on some specific topic you want to look up. But some people find it a bit dry, and you might not want to read it cover-to-cover. Note that version 3.0 of C# is now a bit dated, but very little of the content is actually wrong – you’re just missing out on some of the newer features (and we’ll cover these separately where necessary). Sadly there’s not yet a more recent edition of this series – hopefully they’ll produce one soon!

Both books support the “Look Inside” feature on Amazon.co.uk, so if your employer doesn’t already have a copy lying about you can use that to get a feel for the content and then try to persuade them to buy you a copy of the best one! As mentioned above, we recommend that employers with C# learners have a copy of Learning C# 3.0, although there are other books they might point you to as alternatives.

Both books include various quizzes and exercises to complete. The answers are all in the books so you can test yourself. They’re worth doing – the only way to learn to program is to practice!

Pre-bootcamp Learner Introduction

This section is designed to teach you the basic of C# programming. You should be able to follow it even if you’ve done little or no programming before. The goal is to get to the point where you can attend the Bootcamp training course, and start to understand and write real code.

If you do have some prior programming experience, read on – there are some bits you can skip over, but don’t move too fast as there could be something you’re not so familiar with that’s worth revising!

The objective of this section is to be able to understand and use the following C# programming concepts:

- Variables and assignment

- Conditional logic - the if statement

- Looping logic - the for, foreach and while statements

- Arrays

- Creating and calling methods and classes

- Building a simple console application

These topics will be addressed by way of Microsoft-provided tutorials.

You’ll also learn the very basics of the Git version control system, and how to put the code you’ve written on GitHub.

Setup

Development tools

Before beginning this preparation you will need a development machine with the following already installed – this information has been provided to your employer as technical prerequisites.

- .NET SDK (version 6.0.115)

- Visual Studio Code (VSCode) – this is the Integrated Development Environment for which the exercise content was written, and the following VSCode extensions should be installed.

Note that the course assumes that you will be using Visual Studio Code as your IDE, which is a more lightweight set of tools than the full Visual Studio package.

Git setup

Before beginning Bootcamp, you will need to be able to upload your exercise solutions to GitHub so that your trainer can see them. To do this, you’ll need the following.

If you do not already have a GitHub account, go to GitHub and “Sign Up” for one (it’s free). It’s fine to use an existing account if you have one.

If you have never used Git before, follow GitHub’s short tutorial just to get used to the basics. You can skip over “pull requests” for now if you wish – you won’t need them until you start collaborating with other team members.

Then create a Personal Access Token (PAT) on GitHub by following this tutorial. Choose “classic” rather than “fine-grained”. Name your token something like “C sharp training”, give it an expiry time of at least 6 weeks and ensure it has the repo scope. Make sure to copy the token because you’ll need it to connect to GitHub in a moment.

To check that your Git installation is working properly, create a folder in which you’ll store the code you’ll write for this course (e.g., C:\Work\Training). Then in your Git client run a version of the following command:

git clone https://git@github.com:YourName/YourProject.git

You’ll need to replace YourName by your GitHub account name, and YourProject by the name of one of your GitHub repositories (you should have at least one if you’ve followed the tutorial on GitHub). The easiest way to get a url for the git clone command is to navigate to your project in GitHub and find the green “Code” button. Click this and you should get a “Clone with HTTPS” box containing the correct URL to copy and use.

Once you run the git clone command above, you should be prompted for your GitHub login details. Instead of your password, use the PAT you created earlier.

You should find that your project is now downloaded locally into a new folder inside C:/Work/Training. We’ll explore more about how to work with Git as we progress through the course.

If you have any trouble getting the above to work, get in touch with your trainer who should be able to give you some pointers to get moving.

Pre-bootcamp content structure

This pre-bootcamp section is built from the C# Fundamentals for Absolute Beginners video series on Microsoft Virtual Academy. Note that the number and order of the videos on the Microsoft site is different from what is linked here; we suggest that you rely on the links in this section rather than clicking on the ‘Next’ video.

As you go through the standard tutorials, you’ll be guided through a series of exercises to build a simple application with the tools that have been presented.

By the end of this section you should have all the basics you need to start writing your own C# code. But don’t panic if it doesn’t all make sense – help is on hand if you get stuck, and once you’ve learnt the basic syntax then during Bootcamp we’ll demystify any remaining puzzles and take your programming to the next level.

If you do already have prior experience you’re welcome to skim over any of the reading material that you’re already confident in. But make sure you’re proud of the code you’re writing, and if you’re not completely confident then re-read the material even if it’s largely familiar.

Further reading

There are two books that we recommend as C# learning resources. They’re not essential, but we’d recommend that all employers have at least the second of these on hand for reference as you continue your C# journey. Which book is best for your first foray into C# depends on your preferred learning style.

Head First C# (Stellman & Greene). This is a very practical hands-on introduction to C#, which explains everything from the basics to fairly complex concepts. It adopts a very informal, graphical style. The premise of the book is very much to throw you in at the deep end – the first chapter involves copying out the code for a computer game, most or all of which you won’t initially understand, but it uses this to explain the first concepts while still giving you an exciting and fully featured application to play with. As you progress through the book the same pattern continues, but you’ll understand increasingly large chunks of the code, until you reach the point where you understand everything!

Learning C# 3.0 (Liberty & MacDonald). This introductory book is more traditionally structured. It introduces topics one by one, using simple examples that focus only on the concepts you’ve already learnt. As a reference book for C# learners this is more suitable than Head First C#, because it’s easier to find a sub-section concentrating on some specific topic you want to look up. But some people find it a bit dry, and you might not want to read it cover-to-cover. Note that version 3.0 of C# is now a bit dated, but very little of the content is actually wrong – you’re just missing out on some of the newer features (and we’ll cover these separately where necessary). Sadly there’s not yet a more recent edition of this series – hopefully they’ll produce one soon!

Both books support the “Look Inside” feature on Amazon.co.uk, so if your employer doesn’t already have a copy lying about you can use that to get a feel for the content and then try to persuade them to buy you a copy of the best one! As mentioned above, we recommend that employers with C# learners have a copy of Learning C# 3.0, although there are other books they might point you to as alternatives.

Both books include various quizzes and exercises to complete. The answers are all in the books so you can test yourself. They’re worth doing – the only way to learn to program is to practice!

Pre-bootcamp Learner Introduction

This section is designed to teach you the basic of Java programming. You should be able to follow it even if you’ve done little or no programming before. The goal is to get to the point where you can attend the Bootcamp training course, and start to understand and write real code.

If you do have some prior programming experience, read on – there are some bits you can skip over, but don’t move too fast as there could be something you’re not so familiar with that’s worth revising!

The objective of this section is to be able to understand and use the following Java programming concepts:

- Building a simple console application

- Variables and control flow (

if,for,whileetc.) - Number and string data types

- Object oriented programming basics

- Using collections

- Input and output

- Exception handling

- Packages and packaging

These topics will be addressed by way of official Java tutorials.

You’ll also learn the very basics of the Git version control system, and how to put the code you’ve written on GitHub.

Setup

Development tools

Before beginning this preparation you will need a development machine with the following already installed – this information has been provided to your employer as technical prerequisites.

- Java Development Kit (version 17.0.6)

- Visual Studio Code (VSCode) – this is the Integrated Development Environment for which the exercise content was written, and the following VSCode extension should be installed.

Note that the course assumes that you will be using Visual Studio Code as your IDE, which is a more lightweight set of tools than Eclipse or another IDE that you might use with your work.

Git setup

Before beginning Bootcamp, you will need to be able to upload your exercise solutions to GitHub so that your trainer can see them. To do this, you’ll need the following.

If you do not already have a GitHub account, go to GitHub and “Sign Up” for one (it’s free). It’s fine to use an existing account if you have one.

If you have never used Git before, follow GitHub’s short tutorial just to get used to the basics. You can skip over “pull requests” for now if you wish – you won’t need them until you start collaborating with other team members.

Then create a Personal Access Token (PAT) on GitHub by following this tutorial. Choose “classic” rather than “fine-grained”. Name your token something like “Java training”, give it an expiry time of at least 6 weeks and ensure it has the repo scope. Make sure to copy the token because you’ll need it to connect to GitHub in a moment.

To check that your Git installation is working properly, create a folder in which you’ll store the code you’ll write for this course (e.g., C:\Work\Training). Then in your Git client run a version of the following command:

git clone https://git@github.com:YourName/YourProject.git

You’ll need to replace YourName by your GitHub account name, and YourProject by the name of one of your GitHub repositories (you should have at least one if you’ve followed the tutorial on GitHub). The easiest way to get a url for the git clone command is to navigate to your project in GitHub and find the green “Code” button. Click this and you should get a “Clone with HTTPS” box containing the correct URL to copy and use.

Once you run the git clone command above, you should be prompted for your GitHub login details. Instead of your password, use the PAT you created earlier.

You should find that your project is now downloaded locally into a new folder inside C:/Work/Training. We’ll explore more about how to work with Git as we progress through the course.

If you have any trouble getting the above to work, get in touch with your trainer who should be able to give you some pointers to get moving.

Pre-bootcamp content structure

This pre-bootcamp section is built relevant sections of the official Java Tutorials. This is available in different formats:

- Online: The Java Tutorials

- As a physical book: The Java Tutorial: A Short Course on the Basics

Note that both of these versions were written for Java SE 8, whereas you will be using the more modern Java 17. Unfortunately a newer official tutorial set has not been produced. The content is still appropriate – during this time we will be focusing on the language fundamentals, which have not changed.

Don’t just sit down and read the book cover-to-cover. You’ll probably get bored! Instead, work through the topics in this course and we will:

- Suggest appropriate sections to read

- Provide some exercises that you should use to practice what you learn

By the end of this section you should have all the basics you need to start writing your own Java code. But don’t panic if it doesn’t all make sense – help is on hand if you get stuck, and once you’ve learnt the basic syntax then during Bootcamp we’ll demystify any remaining puzzles and take your programming to the next level.

If you do already have prior experience you’re welcome to skim over any of the reading material that you’re already confident in. But make sure you’re proud of the code you’re writing, and if you’re not completely confident then re-read the material even if it’s largely familiar.

Pre-bootcamp Learner Introduction

This section is designed to teach you the basic of JavasScript programming. You should be able to follow it even if you’ve done little or no programming before. The goal is to get to the point where you can attend the Bootcamp training course, and start to understand and write real code.

If you do have some prior programming experience, read on – there are some bits you can skip over, but don’t move too fast as there could be something you’re not so familiar with that’s worth revising!

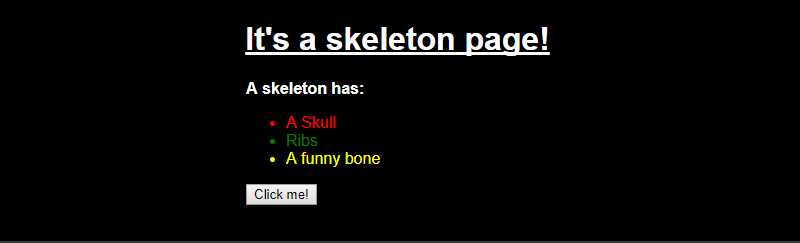

The objective of this course is to be able to understand and use the following JavaScript programming concepts:

- Variables and assignment

- Conditional logic – the if statement

- Looping logic – the for and while statements

- Arrays and objects

- Functions

- Modules

- Building a simple console application

You’ll also learn the very basics of the Git version control system, and how to put the code you’ve written on GitHub.

Anything beyond this starting point is good! But the above represents the minimum you need to know in order to be able to effectively tackle the Bootcamp.

Setup

Development tools

Before beginning this preparation you will need a development machine with the following already installed – this information has been provided to your employer as technical prerequisites.

- VSCode – this is the Integrated Development Environment for which the exercise content was written, and the following VSCode extension should be installed.

- Node (version 18)

- npm (version 9)

Git setup

Before beginning Bootcamp, you will need to be able to upload your exercise solutions to GitHub so that your trainer can see them. To do this, you’ll need the following.

If you do not already have a GitHub account, go to GitHub and “Sign Up” for one (it’s free). It’s fine to use an existing account if you have one.

If you have never used Git before, follow GitHub’s short tutorial just to get used to the basics. You can skip over “pull requests” for now if you wish – you won’t need them until you start collaborating with other team members.

Then create a Personal Access Token (PAT) on GitHub by following this tutorial. Choose “classic” rather than “fine-grained”. Name your token something like “JavaScript training”, give it an expiry time of at least 6 weeks, and ensure it has the repo scope. Make sure to copy the token because you’ll need it to connect to GitHub in a moment.

To check that your Git installation is working properly, create a folder in which you’ll store the code you’ll write for this course (e.g., C:\Work\Training). Then in your Git client run a version of the following command:

git clone https://git@github.com:YourName/YourProject.git

You’ll need to replace YourName by your GitHub account name, and YourProject by the name of one of your GitHub repositories (you should have at least one if you’ve followed the tutorial on GitHub). The easiest way to get a url for the git clone command is to navigate to your project in GitHub and find the green “Code” button. Click this and you should get a “Clone with HTTPS” box containing the correct URL to copy and use.

Once you run the git clone command above, you should be prompted for your GitHub login details. Instead of your password, use the PAT you created earlier.

You should find that your project is now downloaded locally into a new folder inside C:/Work/Training. We’ll explore more about how to work with Git as we progress through the course.

If you have any trouble getting the above to work, get in touch with your trainer who should be able to give you some pointers to get moving.

Pre-course content structure

This course is designed to teach you the basics of JavaScript programming. You should be able to follow it even if you’ve done little or no programming before. The goal is to get to the point where you can attend the Bootcamp training course, and start to understand and write real code.

If you do have some prior programming experience, read on – there are some bits you can skip over, but don’t move too fast as there could be something you’re not so familiar with that’s worth revising! Make sure you complete all the exercises that we suggest, and if you find anything tricky then treat that as a prompt to revisit the reading material we suggest in more detail.

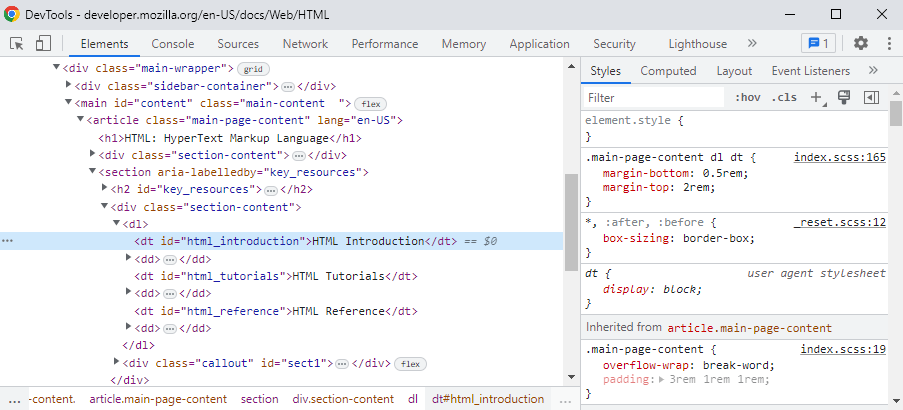

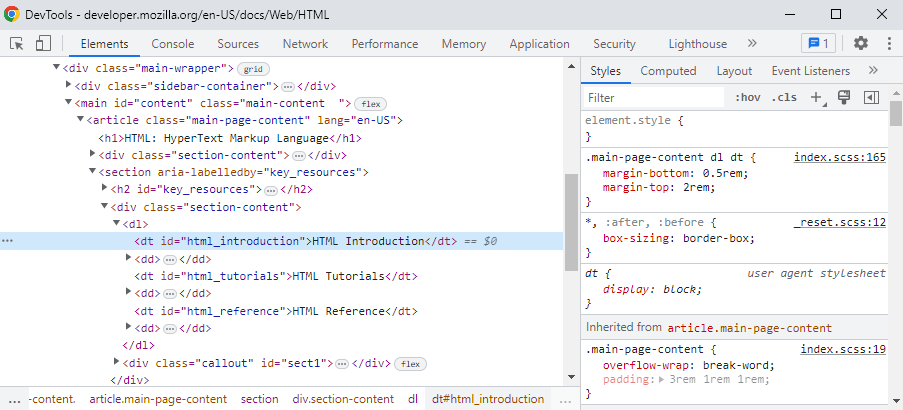

Reading material

The recommended route to learning the necessary JavaScript is to work through the relevant sections of Mozilla’s JavaScript Guide, but don’t just sit down and read the guide cover-to-cover. You’ll probably get bored! Instead, work through the topics in this course and we will:

- Suggest appropriate sections to read

- Provide some exercises that you should use to practice what you learn

You will almost certainly find it helpful, between reading a section of the guide and doing the exercises, to copy some of the code samples from the guide and run them. Change the code slightly, and run them again, then see if the result is what you expected. Doing this every time you meet a new concept will help you to pick it up more quickly and understand it in more depth.

By the end of this course, you should have all the basics you need to start writing your own JavaScript code. But don’t panic if it doesn’t all make sense – help is on hand if you get stuck, and once you’ve learnt the basic syntax you can come along to our Bootcamp where we’ll demystify the remaining puzzles and take your programming to the next level.

If you do already have prior experience then you’re welcome to skim over any of the reading material that you’re already confident in. But make sure you’re proud of the code you’re writing, and if you’re not completely confident then re-read the material even if it’s largely familiar.

Pre-bootcamp Learner Introduction

This section is designed to teach you the basic of Python programming. You should be able to follow it even if you’ve done little or no programming before. The goal is to get to the point where you can attend the Bootcamp training course, and start to understand and write real code.

If you do have some prior programming experience, read on – there are some bits you can skip over, but don’t move too fast as there could be something you’re not so familiar with that’s worth revising!

The objective of this section is to be able to understand and use the following Python programming concepts:

- Variables and assignment

- Data Types

- Functions

- Conditional logic – the if statement

- Looping logic – the for, foreach and while statements

- Importing Packages

- Creating and calling methods and classes

- Building a simple console application

You’ll also learn the very basics of the Git version control system, and how to put the code you’ve written on GitHub.

Anything beyond this starting point is good! But the above represents the minimum you need to know in order to be able to effectively tackle the Bootcamp.

Setup

Development tools

Before beginning this preparation you will need a development machine with the following already installed – this information has been provided to your employer as technical prerequisites.

- Poetry

- Visual Studio Code (VSCode) – this is the Integrated Development Environment for which the exercise content was written, and the following VSCode extension should be installed.

Git setup

Before beginning Bootcamp, you will need to be able to upload your exercise solutions to GitHub so that your trainer can see them. To do this, you’ll need the following.

If you do not already have a GitHub account, go to GitHub and “Sign Up” for one (it’s free). It’s fine to use an existing account if you have one.

If you have never used Git before, follow GitHub’s short tutorial just to get used to the basics. You can skip over “pull requests” for now if you wish – you won’t need them until you start collaborating with other team members.

Then create a Personal Access Token (PAT) on GitHub by following this tutorial. Choose “classic” rather than “fine-grained”. Name your token something like “Python training”, give it an expiry time of at least 6 weeks and ensure it has the repo scope. Make sure to copy the token because you’ll need it to connect to GitHub in a moment.

To check that your Git installation is working properly, create a folder in which you’ll store the code you’ll write for this course (e.g., C:\Work\Training). Then in your Git client run a version of the following command:

git clone https://git@github.com:YourName/YourProject.git

You’ll need to replace YourName by your GitHub account name, and YourProject by the name of one of your GitHub repositories (you should have at least one if you’ve followed the tutorial on GitHub). The easiest way to get a url for the git clone command is to navigate to your project in GitHub and find the green “Code” button. Click this and you should get a “Clone with HTTPS” box containing the correct URL to copy and use.

Once you run the git clone command above, you should be prompted for your GitHub login details. Instead of your password, use the PAT you created earlier.

You should find that your project is now downloaded locally into a new folder inside C:/Work/Training. We’ll explore more about how to work with Git as we progress through the course.

If you have any trouble getting the above to work, get in touch with your trainer who should be able to give you some pointers to get moving.

Pre-course content structure

This course is designed to teach you the basics of Python programming. You should be able to follow it even if you’ve done little or no programming before. The goal is to get to the point where you can attend the Bootcamp training course, and start to understand and write real code.

If you do have some prior programming experience, read on – there are some bits you can skip over, but don’t move too fast as there could be something you’re not so familiar with that’s worth revising! Make sure you complete all the exercises that we suggest, and if you find anything tricky then treat that as a prompt to revisit the reading material we suggest in more detail.

Reading Material

This course will consist of a reading section followed by an exercise. You will almost certainly find it helpful, between reading a section and doing the exercises, to copy some of the code samples from the reading and run them. Change the code slightly, and run them again, then see if the result is what you expected. Doing this every time you meet a new concept will help you to pick it up more quickly and understand it in more depth.

By the end of this course, you should have all the basics you need to start writing your own Python code. But don’t panic if it doesn’t all make sense – help is on hand if you get stuck, and once you’ve learnt the basic syntax you can come along to our Bootcamp where we’ll demystify the remaining puzzles and take your programming to the next level.

If you do already have prior experience then you’re welcome to skim over any of the reading material that you’re already confident in. But make sure you’re proud of the code you’re writing, and if you’re not completely confident then re-read the material even if it’s largely familiar.

If you get stuck or confused while working through this course then you might find these resources helpful:

Pre-bootcamp Learner Notes

Once you’ve followed the Pre-course Introduction section, follow through these tutorial videos and self-guided exercises. This will ensure that you’ve got a grounding in the fundamentals of the C# language and Git, so that you’re ready for Bootcamp.

1. Your first C# program

Watch the following videos from the Microsoft Virtual Academy. Remember that throughout these videos they are using Visual Studio to demonstrate, while we will be using Visual Studio Code. The VSCode interface is similar to but simpler than Visual Studio.

Prior experience

If you have significant prior experience:

- You may want to watch the Course Introduction to get a flavour for the course.

- If you already know how to write a “Hello, World” type program in C#, you can skip the other videos and go straight on to the exercises. Even if these first exercises seem trivial it’s best to do them since we’ll be building on these programs soon.

If you feel you’re somewhere short of “significant” experience, but still find it all fairly obvious, remember the “2x speed” option on the video. You’ll get used to the change in pitch quite quickly!

Exercise 1.1

As we progress through this section, we’re going to work through and build a very simple Calculator application. It will be a console (command line) application and so entirely text based. Here’s how to get started:

- Create a new folder for your project (say

C:\Work\Training\Calculator) and then open it in VSCode (File > Open Folder…) - Open a terminal console in VSCode (Terminal > New Terminal)

- Run the following command:

dotnet new console --framework net6.0 --use-program-main

You should see some code in Program.cs that looks like:

namespace Calculator;

class Program

{

static void Main(string[] args)

{

Console.WriteLine("Hello, World!");

}

}

- We’ll discuss what the

namespace,class, andMainlines mean later on. For now, just make sure that all the code you want to run is inside the pair of curly brackets understatic void Main. - Change your code to print out a welcome message for your calculator app – e.g. “Welcome to the calculator!”.

- Run the program and check that it works:

- Click the Play/Debug button on the left of the window or F5 key

- Build Assets

- Click the green play button near the top of the screen

- You should see the program output in the terminal within VSCode

The ‘Creating Your First C# Program’ tells you to put the line Console.ReadLine() at the end of the program so the output window doesn’t disappear immediately. This is no longer necessary so you can leave it out.

Exercise 1.2

So far all the code you’ve looked at will always output the same thing every time it’s run. If we want the user to control what the program does we can read input from the command line, using the Console.ReadLine method:

string aFriend = Console.ReadLine();

Create a new project in the same way you did before and give it a suitable name – say, “Greeting”. Write a program which displays a message asking the user their name, accepts some input, and then prints “Hello, [ name ]. Nice to meet you!” where [ name ] is the name they entered. Make sure to save it as we’ll come back to modify it later.

Exercise 1.3

Having started your Calculator program, we want to put it on GitHub for all the world to see. Or at least, so your tutor can take a look in due course. In git, each project generally lives in its own ‘repository’. Here’s how to create one:

- Go to GitHub

- Click the green “New” button to start creating a new repository

- Enter a suitable name for your repository – “Calculator” would do! Make sure the “Owner” is set to your name. Enter a description if you like too.

- It would be handy to “Add .gitignore” – choose “VisualStudio” as the template. (.gitignore is a file describing the types of files that shouldn’t be stored in git; for example, temporary files or anything else that shouldn’t be shared with other team members. You pick a Visual Studio-specific one so it’s preconfigured with all the files Visual Studio will create that really shouldn’t be stored in git).

- Leave everything else at the defaults. In particular you want a “Public” project, unless you want to pay for the privilege.

- Click “Create repository”.

That gives you a (nearly) empty repository. Now you want to link that up to your Calculator. Open up Git Bash (or your command line utility of choice). Navigate to where your Calculator lives (e.g. cd C:\Work\Training\Calculator, or right-click and use ‘git bash here’). Then run the following commands:

git init

git remote add origin https://git@github.com:YourName/Calculator.git

git fetch

git checkout main

Replace “YourName” in the above with your GitHub username. You can find the correct text to use by clicking the green “Code” in your project on GitHub and then finding the “Clone with HTTPS” URL.

We’ll discuss these git commands later in the Bootcamp, and for now you don’t need to worry about what they do exactly. Broadly what we’re doing is setting up a local repository that’s linked to the one on GitHub so changes you make can be uploaded there.

If you’re using a GitHub account you created in 2020 or earlier, you may need to replace main with master above because that used to be the default branch name. If you’ve just signed up for GitHub now that won’t be an issue.

You should find that there are no errors, and that the .gitignore file that you asked GitHub to create now exists locally. However if you refresh your web browser on your GitHub project you’ll see that hasn’t changed – the Calculator code is only on your local machine. You can fix this by running this in your command prompt:

git add .

git status

git commit -m "My first piece of C# code"

git push

Now refresh your GitHub browser window and your source code should be visible!

Again, we’ll discuss what these are doing later – for now just remember that you should run these four commands, replacing the text in quotes with a short summary of what you’ve changed, every time you’ve made a change to your code and want to update your GitHub repository with that change.

When you’re prompted to submit your answers exercises during the course, you can just supply the GitHub link – something like https://github.com/YourName/Calculator. Your trainer can see the code, and provide feedback on it if appropriate. You don’t need to submit anything at this stage, you can move on to the next exercise.

Some notes on git

For the time being, you don’t need to worry too much about what the various commands above actually did. However, here are some details to satisfy your curiosity:

git init: Turn the current directory into a git repository on your local machine. A hidden directory .git is created to manage all the git internals – the rest of your files stay unchanged.git remote add origin git@github.com:YourName/Calculator.git: Git is a distributed version control system. Your local machine contains a complete and working git repository, but other people can also have a complete and working copy of the git repository. If one of those “other people” is GitHub, that provides a convenient way of sharing code between multiple people. This line just says that GitHub (specifically, your Calculator repository) should have a remote copy, and we’re naming that copy “origin”. The name “origin” is just a git convention meaning “the main copy” – but actually you could use any name, and Git doesn’t really do anything special to make one copy “more important” than another.git fetch: This downloads all the latest changes from GitHub. In this case, that means downloading the .gitignore file to your machine. But it’s not visible on your local machine yet…git checkout main: This tells Git which version of the code you want to see. The “main” branch is the main copy of the code that’s currently being worked on. You’ll notice “Branch: main” is displayed in GitHub too – you can create multiple branches to track progress on different features under development, and this is useful if several people are working on your code at once.git add .: This tells Git to record the changes made to all the files at.which means the current working directory; you could equally well specify each individual file by name.git status: This doesn’t actually do anything, but displays the current status of your repository – you should see some files listed as being changed and ready for commit.git commit -m "My first piece of C# code": This tells Git to save those changes in its history. That way you can go back to this version of the code later, should you need to. Git provides a history of everything that’s happened. The-mprecedes a message which explains the purpose of the change.git push: This sends all the changes stored in your local repository up to GitHub. It’s just like the earliergit fetch, but in the opposite direction.

If you want to see the history of your commits, click on the “commits” label in GitHub, or run git log locally. There’s also a local graphical view by running gitk.

2. Data types and variables

Watch the following videos:

- Working with Code File, Projects and Solutions – remember that in our course we use VSCode as the IDE rather than Visual Studio, so the descriptions of the user interface will be different

- Understanding Data Types and Variables

Prior experience

If you’re familiar with data types and variables in C#, test yourself on the exercises below. If you can do it all easily, it’s fine to skip the rest of the second video.

Exercise 2.1

Write a program to calculate and display the area of a circle with a radius of 2.5 units. You can use the inbuilt constant Math.PI for the value of pi.

Exercise 2.2

Improve your program to ask the user for a radius, then output the area of the corresponding circle. Since Console.Readline always inputs a string, you’ll need to convert it as follows:

double radius = Double.Parse(myString);

What do you think will happen if the user enters something other than a number? Try running your program and see!

Exercise 2.3

Add the following functionality to your Calculator project:

- Prompt the user to enter one number

- Prompt the user to enter a second number

- Multiply the two numbers together and print out the result

Once you’ve got your program working, commit the changes to GitHub. You should be able to follow the same steps as last time (from git add onward), or here’s an abbreviated version:

git commit -a -m "Added a simple multiplier"

git push

The -a argument to git commit tells Git to first add any changes, and then commit them. Note that this only works if Git already knows about the files – it won’t pick up any newly created files.

3. Branching

Watch the following videos:

Prior experience

Here are the key things you should be learning from the videos. If you’re already familiar with these concepts in C#, it’s ok to skip the videos and just complete the exercises.

if(condition) { } else if(condition2) { } else { }- The difference between

a == banda = b - Joining strings together with

string1 + string2 - Variable declaration (

int a) and assignment (a = 3)

Exercise 3.1

Open the Greeting program you wrote in Exercise 1.2 and update it to do the following:

- Ask the user for a first name and surname separately then print a greeting, e.g. “Hello, Anne Smith!”

- If the user enters a blank input for either name, output a message commenting on the fact, e.g. “Hello, Anne! I see you only have one name, but that’s fine!”

- If the user enters a blank input for both names, output a message complaining that the user isn’t cooperating

Exercise 3.2

Update your Calculator program to support more operations than just multiplication, by promopting the user for an operator before they enter the numbers. So a typical program run might look like this:

Welcome to the calculator!

==========================

Please enter the operator: +

Please enter the first number: 10

Please enter the second number: 4

The answer is: 14

To keep things simple, we’ll just use four operators:

+– addition-– subtraction*– multiplication/– division

There are lots of ways to solve this, although not many have been covered in the videos yet. Feel free to do something fancier than simple if-statements if you wish! Perhaps you could make a Git commit after each so you can review the different approaches later.

Make sure you git push when you’re done so your trainer can see the results.

4. For loops and arrays

Watch the following videos:

Prior experience

Here are the key things you should be learning from the videos. If you’re already familiar with these concepts in C#, it’s ok to skip the videos and just complete the exercises.

for (int i=0; i<10; i++) { }foreach (int value in list)- Using

breakandcontinueto control loop behaviour - Creating arrays using

new int[4]andnew int[] {1,2,3,4} - Accessing arrays using

array[2] - Treating strings as character arrays using

toCharArray

Exercise 4.1

Write a program that will accept an integer input from the user, and find the sum of all integers from 1 up to that value (for example, the input 4 should give output 10).

Exercise 4.2

Create a program to display a set of times-tables, starting at 2 and going up to a number chosen by the user. For example, if the user enters 4 the output might look like this:

2 x 2 = 4

3 x 2 = 6

4 x 2 = 8

2 x 3 = 6

3 x 3 = 9

4 x 3 = 12

2 x 4 = 8

3 x 4 = 12

4 x 4 = 16

Exercise 4.3

Modify the Calculator so it can do calculations on any number of numbers. For example:

3 + 4 + 5 = 12

1 * 2 * 3 * 1 = 6

120 / 10 / 2 / 2 = 3

Let’s keep things simple by using the same operator each time. So a typical output might look like this (for the first sum above):

Welcome to the calculator!

==========================

Please enter the operator: +

How many numbers do you want to +? 3

Please enter number 1: 3

Please enter number 2: 4

Please enter number 3: 5

The answer is: 12

See what you can come up with, and push the result.

Exercise 4.4 (extension)

Create a program which inputs a number, then inputs that many words, then outputs each of them in reverse order. For example:

Enter number of words: 3

Enter a word: I

Enter a word: love

Enter a word: programming

Your sentence is: I love programming

Your reversed words are:

I

evol

gnimmargorp

Exercise 4.5 (extension)

Create a program that asks for an integer input and outputs whether or not that number is a square number. (C# has a method called Math.Sqrt() for finding square roots, but don’t use that for this exercise!)

5. Methods and while loops

Watch the following videos:

Prior experience

Here are the key things you should be learning from the videos. If you’re already familiar with these concepts in C#, it’s ok to skip the videos and just complete the exercises.

- Defining your own static method

- Calling a static method, with parameters

- Returning values from methods

while (condition) { }

Don’t forget to go back and look at the videos if anything in the exercises is puzzling!

Exercise 5.1

Create a program which keeps accepting input words and putting them together into a sentence until the user enters a blank input. For example:

Enter a word: I

Current sentence: I

Enter a word: love

Current sentence: I love

Enter a word: programming

Current sentence: I love programming

Enter a word:

Final sentence: I love programming

Exercise 5.2

One of our goals as programmers should be to write “clean code” – that is, code that is simple and understandable. Take a look at this piece of code:

static void Main(string[] args)

{

PrintWelcomeMessage();

PerformOneCalculation();

}

It’s hopefully fairly obvious what this code is trying to do. Refactor your Calculator code so it looks the same as this example – that will involve splitting your existing code into two new methods, and then having the Main method just call them both in turn.

“Refactoring” is the process of improving your code without changing its behaviour. Those improvements might be to make it more readable, or to make it easier to change and extend in future.

Exercise 5.3

Your Calculator probably has at least a couple of pieces of code of the form:

Console.Write("Please enter a number: ");

string answer = Console.ReadLine();

int number = int.Parse(answer);

Create a method that encapsulates this pattern, and use it to replace all the code that’s similar to the above. The same method should be usable every time you want to print out a message and interpret the response as an integer.

Exercise 5.4

Make your calculator keep running – once it’s calculated an answer, it should just loop round and start again. Presumably you don’t want to keep printing the welcome message every time though.

Exercise 5.5

Force the user to enter valid numbers – when prompting for an integer, it’s annoying if your program crashes if the user types in a string instead. Have it just ask again in this case.

For this you might find the int.TryParse method useful. It tries to interpret a string as a number, and returns false if it fails or true if it succeeds:

int answer;

if (int.TryParse("42", out answer))

{

// It worked! We can use answer here

}

else

{

// It didn't work... Don't try to use answer because it's not be set yet

}

Don’t worry about the out keyword for now – it just allows the TryParse method to return the answer as well as the true / false value. Having a method return two values isn’t normally a good idea, but this is one case where it’s a real improvement.

Once you’ve got all that working, push it to GitHub before you move on.

6. Strings, dates and times

Watch the following videos:

Prior experience

This topic’s videos are quite short, particularly if you run them at 2x speed. They contain some snippets that are probably interesting to all but C# experts, so our recommendation is to watch them through. Who knows, perhaps they’re getting interesting even if the earlier ones were a bit basic for you?

Having had a quick skim through them you can use the exercises below to check your understanding.

Exercise 6.1

Update the Greeting program you wrote earlier, so that:

- If the user inputs their name in all capitals, the program tells them “no need to shout!” – but still displays the greeting with their name in all lower-case

- If the user’s full name is longer than 12 characters, the program comments on it being a long name

- The program also asks for the user’s birthdate, and displays a different greeting if they are under 10 years old

Exercise 6.2

Review how your calculator deals with strings at the moment. Can you use string.Format to improve your code? Perhaps try adding some more informative text now that you have a neat way to print it out.

Console.WriteLine has an overload (alternative version) that takes exactly the same parameters as string.Format – so if you’ve written Console.WriteLine(string.Format(...)), you can replace it with just Console.WriteLine(...). You might be able to take advantage of this shortening from time to time.

Exercise 6.3

We’d like to enhance the calculator to operate on dates as well as numbers. Specifically, we’ll add support for taking a date, and adding a number of days to it. Working with dates doesn’t really fit into the current interface, so we’ll modify our Main method to look something like this:

private const int NumberCalculator = 1;

private const int DateCalculator = 2;

static void Main(string[] args)

{

PrintWelcomeMessage();

while (true)

{

int calculationMode = AskForCalculationMode();

if (calculationMode == NumberCalculator)

{

PerformOneNumberCalculation();

}

else

{

PerformOneDateCalculation();

}

}

}

And the output might look something like this:

Welcome to the calculator!

==========================

Which calculator mode do you want?

1) Numbers

2) Dates

> 2

Please enter a date: 7/2/17

Please enter the number of days to add: 2

The answer is: 09/02/2017

Which calculator mode do you want?

1) Numbers

2) Dates

>

Implement some function along these lines. You’ll need DateTime.TryParse, which works much like the int equivalent.

7. Classes

Watch the following videos:

- Understanding Classes

- More About Classes and Methods

- Understanding Scope and Accessibility Modifiers

Prior experience

Here are the key take-aways from each of the videos:

- Understanding Classes: Creating your own class, with properties (

getandset) and methods. You should know the difference between a property and a method. - More About Classes and Methods: Object instances, references and

nullvalues. The difference between static and instance methods. - Understanding Scope and Accessibility Modifiers: Why in

for(int i=0; i<10; i++)you cannot accessioutside of the loop. Why you cannot declare two variables of the same name in the same method. The difference betweenprivate,public,protectedandinternal.

If you have lots of prior C# experience and could confidently explain all the above, then it’s ok to skip the videos and go straight on to the exercises. Otherwise it’s best to watch the videos though, even if you do so on 2x speed.

Exercise 7.1

In your Greeting program, create a new Person Class. Modify your existing code so that all the user inputs and displaying the greetings are done by methods on this class. What properties does your class need?

Exercise 7.2 (extension)

Your Greeting program needs to perform several checks to decide what to say to a user. Create a new method for each of these checks – for example, one might be called IsUnderTen() and return true if the Person is aged under 10 (it’s common for methods that return booleans to have names beginning with ‘Is’). Think about the appropriate access level for each of these methods.

Exercise 7.3

Your Calculator application is getting quite large. Hopefully you’ve split it up into reasonably small and self-describing methods, but that’s still a lot of code to lump together into a single file. Let’s split it up a bit.

A sensible structure for your Calculator program would be the following:

- A main class, which you might leave with the default name

Program, which contains theMainmethod and works out whether you want numbers mode or dates mode. - A separate class that deals with number calculations. Perhaps

NumberCalculator. - Another class that deals with date calculations. Perhaps

DateCalculator.

Try separating your application into classes like this.

Here are some additional questions to answer as you go:

- What challenges do you face? – what do you find that’s not satisfactory or straightforward? How do you overcome these challenges?

- How many of your methods need to be static? – see how few you can get away with.

8. Namespaces and references

Watch the following videos:

- Understanding Namespaces and Working with the .NET Class Library

- Creating and Adding References to Assemblies

Prior experience

Most of the video content this time is about the practicalities of handling multi-project solutions within Visual Studio, and importing references to other libraries. They’re worth a skim (i.e. double speed if you feel you know most of it already) even if you have prior experience in writing the actual C# code.

Exercise 8.1